Space

Scientists’ understanding of dark energy may be completely wrong

Published

2 weeks agoon

Scientists’ understanding of dark energy may be completely wrong

On April 4th, astronomers who created the largest and most detailed 3D map ever made of the universe announced that they may have found a major flaw in their understanding of dark energy, the mysterious force driving the universe’s expansion.

Dark energy has been postulated as a stable force in the universe, both in the current era and throughout the history of the universe; But new data suggests that dark energy may be more variable, getting stronger or weaker over time, reversing or even disappearing.

Adam Reiss, an astronomer at Johns Hopkins University and the Space Telescope Science Institute in Baltimore, who was not involved in the new study, was quoted by the New York Times as saying, “The new finding may be the first real clue we’ve had in 25 years about the nature of dark energy.” In 2011, Reiss won the Nobel Prize in Physics along with two other astronomers for the discovery of dark energy.

The recent conclusion, if confirmed, could save astronomers and other scientists from predicting the ultimate fate of the universe. If the dark energy has a constant effect over time, it will eventually push all the stars and galaxies away from each other so much that even the atoms may disintegrate and the universe and all life in it, light, and energy will be destroyed forever. Instead, it appears that dark energy can change course and steer the universe toward a more fruitful future.

Dark energy may become stronger or weaker, reverse or even disappear over time

However, nothing is certain. The new finding has about a 1 in 400 chance of being a statistical coincidence. More precisely, the degree of certainty of a new discovery is three sigma, which is much lower than the gold standard for scientific discoveries called five sigma or one in 1.7 million. In the history of physics, even five-sigma events have been discredited when more data or better interpretations have emerged.

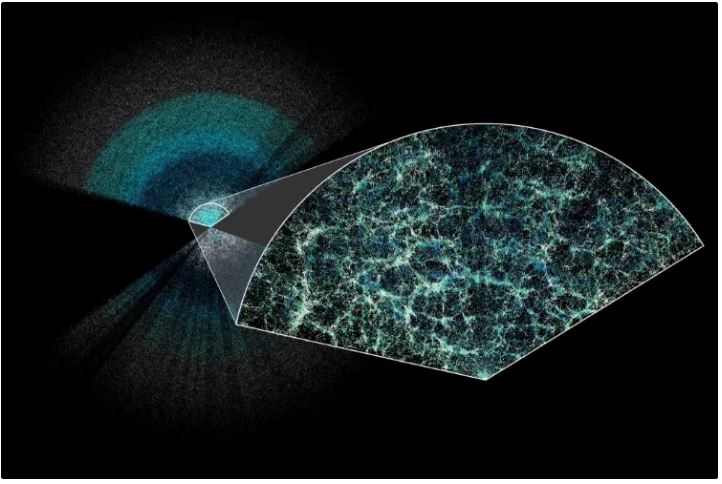

The recent discovery is considered an initial report and has been published as a series of articles by the group responsible for an international project called “Dark Energy Spectroscopy Instrument” or DESI for short. The group has just begun a five-year effort to create a three-dimensional map of the positions and velocities of 40 million galaxies over the 11 billion-year history of the universe. The researchers made their initial map based on the first year of observations of just six million galaxies. The results were presented April 4 at the American Physical Society meeting in Sacramento, California, and at a conference in Italy.

“So far we’re seeing initial consistency with our best model of the universe,” DESI director Michael Levy said in a statement released by Lawrence Berkeley National Laboratory, the center overseeing the project. “But we also see some potentially interesting differences that may indicate the evolution of dark energy over time.”

“The DESI team didn’t expect to find the treasure so soon,” Natalie Palanque-Delaberville, an astrophysicist at Lawrence Berkeley Lab and project spokeswoman, said in an interview. The first year’s results were designed solely to confirm what we already knew. “We thought we would basically approve the standard model.” But the unknowns appeared before the eyes of the researchers.

The researchers’ new map is not fully compatible with the standard model

When the scientists combined their map with other cosmological data, they were surprised to find that it didn’t completely fit the Standard Model. This model assumes that dark energy is stable and unchanging; While variable dark energy fits the new data. However, Dr. Palanque-Delaberville sees the new discovery as an interesting clue that has not yet turned into definitive proof.

University of Chicago astrophysicist Wendy Friedman, who led the scientific effort to measure the expansion of the universe, described the team’s results as “tremendous findings that have the potential to open a new window into understanding dark energy.” As the dominant force in the universe, dark energy remains the greatest mystery in cosmology.

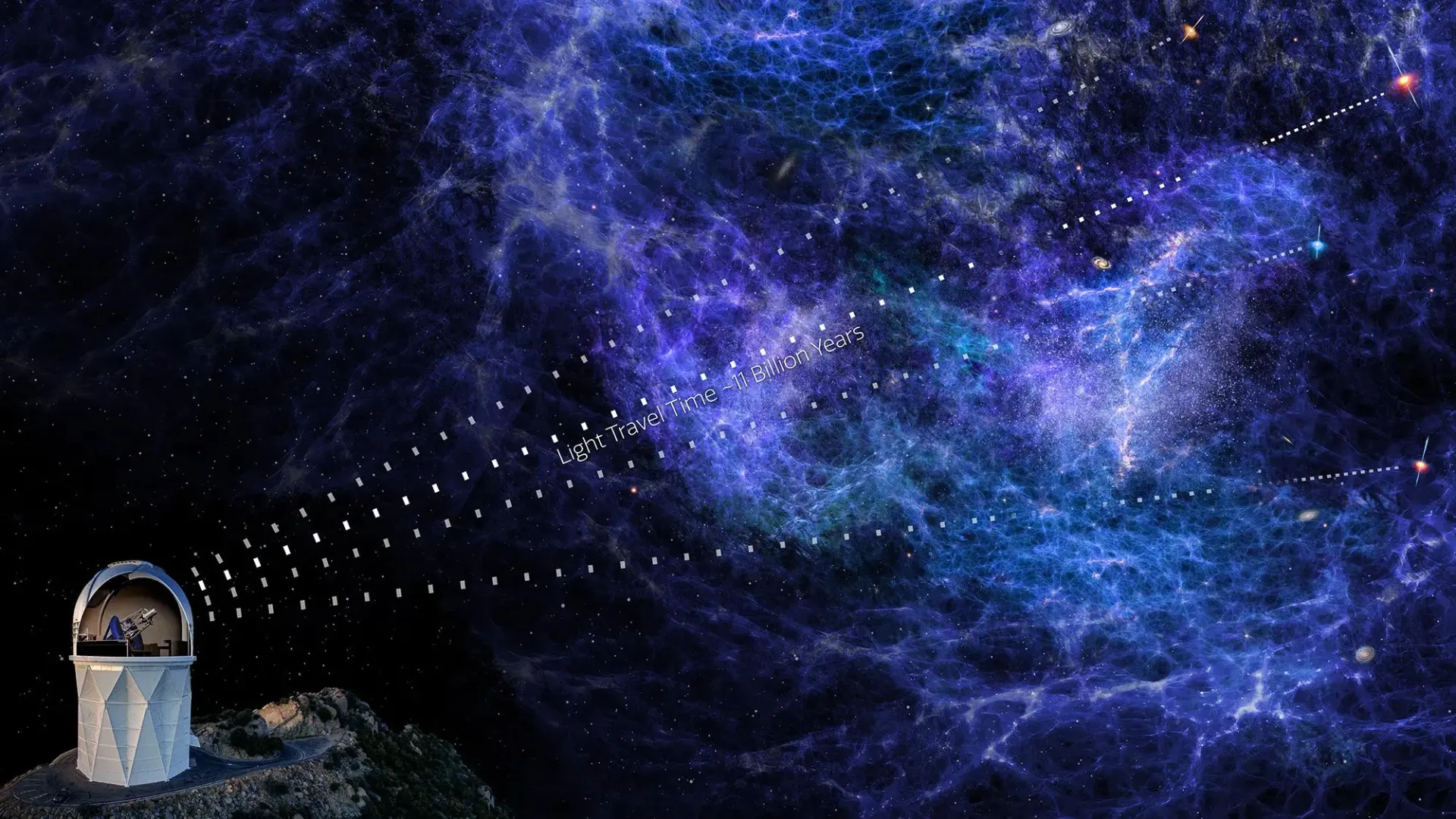

Artistic rendering of quasar light passing through intergalactic clouds of hydrogen gas. This light provides clues to the structure of the distant universe.

NOIRLab/NSF/AURA/P. Marenfeld and DESI collaboration

The idea of dark energy was proposed in 1998; When two competing groups of astronomers, including Dr. Rees, discovered that the rate of expansion of the universe was increasing rather than decreasing, contrary to what most scientists expected. Early observations seemed to show that dark energy behaved just like the famous ” fudge factor ” denoted by the Greek letter lambda. Albert Einstein included lambda in his equations to explain why the universe does not collapse due to its own gravity; But later he called this action his biggest mistake.

However, Einstein probably judged too soon. Lambda, as formulated by Einstein, was a property of space itself: as the universe expands, the more space there is, the more dark energy there is, which pushes ever harder, eventually leading to an unbridled, lightless future.

Dark energy was placed in the standard model called LCDM, consisting of 70% dark energy (lambda), 25% cold dark matter (a collection of low-speed alien particles), and 5% atomic matter. Although this model has now been discredited by the James Webb Space Telescope , it still holds its validity. However, what if dark energy is not as stable as the cosmological model assumes?

The problem is related to a parameter called w, a special measure for measuring the density or intensity of dark energy. In Einstein’s version of dark energy, the value of this parameter remains constant negative one throughout the life of the universe. Cosmologists have used this value in their models for the past 25 years.

Albert Einstein included lambda in his equations to explain why the universe is collapsing under its own gravity.

But Einstein’s hypothesis of dark energy is only the simplest version. “With the Desi project we now have the precision that allows us to go beyond that simple model to see if the dark energy density is constant over time or if it fluctuates and evolves over time,” says Dr. Palanque-Delabreville.

The Desi project, 14 years in the making, is designed to test the stability of this energy by measuring the expansion rate of the universe at different times in the past. In order to do this, scientists equipped one of the telescopes of the Keith Peak National Observatory in Arizona, USA, with five thousand optical fiber detectors that can perform spectroscopy on a large number of galaxies at the same time to find out how fast they are moving away from Earth.

The researchers used fluctuations in the cosmic distribution of galaxies, known as baryonic acoustic variations , as a measure of distance. The sound waves in the hot plasma accumulated in the universe, when it was only 380,000 years old, carved the oscillations on the universe. At that time, the oscillations were half a million light years across. 13.5 billion years later, the universe has expanded a thousandfold, and the oscillations, now 500 million light-years across, serve as convenient rulers for cosmic measurements.

Desi scientists divided the last 11 billion years of the universe into 7 time periods and measured the size of the fluctuations and the speed of the galaxies in them moving away from us and from each other. When the researchers put all the data together, they found that the assumption that dark energy is constant does not explain the expansion of the universe. Galaxies appeared closer than they should be in the last three periods; An observation that suggests dark energy may be evolving over time.

“We’re actually seeing a clue that the properties of dark energy don’t fit a simple cosmological constant, and instead may have some deviations,” says Dr. Palanque-Delaberville. However, he believes that the new finding is too weak and is not considered proof yet. Time and more data will determine the fate of dark energy and the researchers’ tested model.

You may like

-

James Webb space telescope map of the climate of an exoplanet

-

The highest observatory in the world officially started its work

-

What is an exoplanet? Everything you need to know

-

Seven surprising discoveries about the planet Mercury

-

Why there is no gaseous moon in solar system?

-

The strangest things that can happen to humans in space

Space

James Webb space telescope map of the climate of an exoplanet

Published

15 hours agoon

02/05/2024

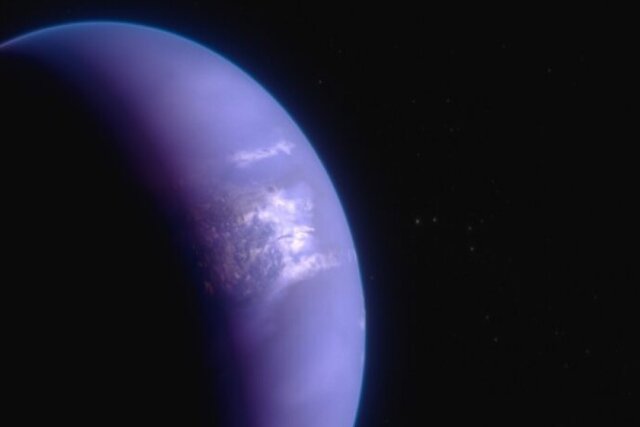

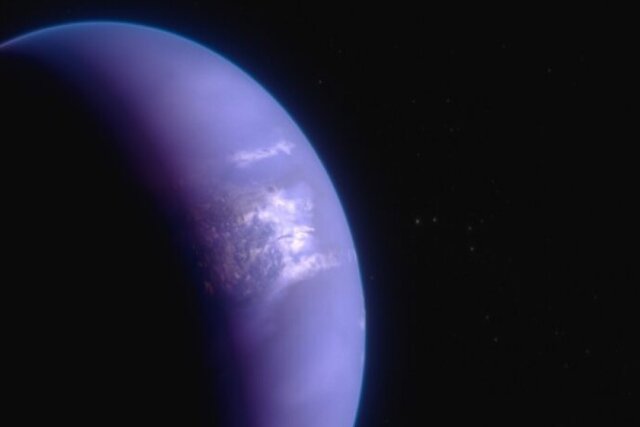

The James Webb Space Telescope helped researchers map the climate of an exoplanet.

James Webb space telescope map of the climate of an exoplanet

An international team of researchers has successfully used the James Webb Space Telescope to map the climate of a hot gas giant exoplanet.

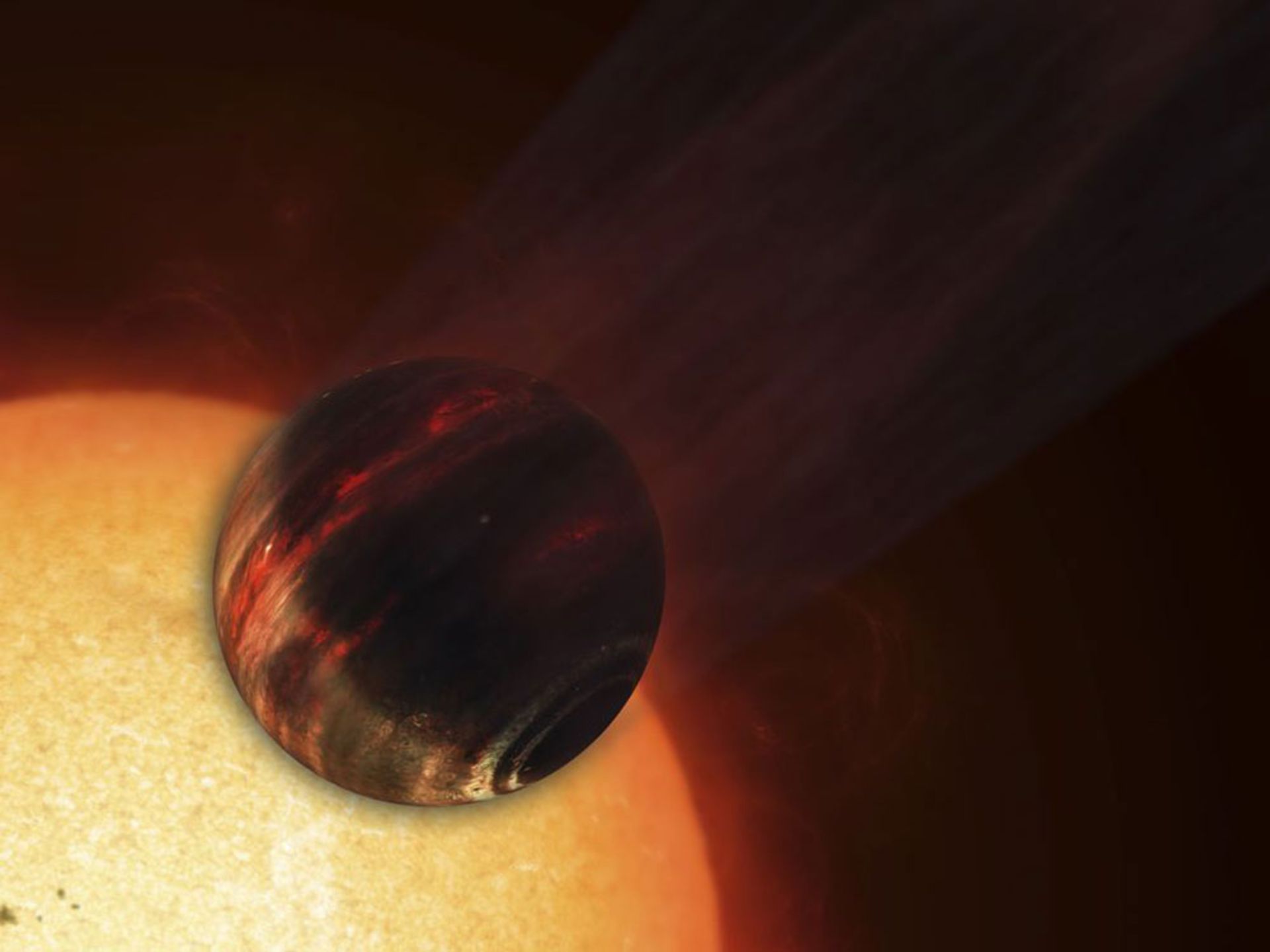

According to NASA, detailed observations in a wide range of mid-infrared light, along with 3D weather models and previous observations from other telescopes, show the presence of dense, high clouds that cover the sky during the day and night, as well as show tropical winds. They say they are merging atmospheric gases at 5,000 miles per hour around the exoplanet WASP-43 b.

This is the latest demonstration of exoplanet science, now made possible by James Webb’s extraordinary ability to probe temperature changes and detect atmospheric gases trillions of miles away.

The exoplanet WASP-43 b is a type of “Hot Jupiter”. This Jupiter-sized planet is made mostly of hydrogen and helium and is much hotter than the other giant planets in the solar system. Although its star is smaller and cooler than the Sun, WASP-43 b orbits at a distance of 1.3 million miles, less than one-twenty-fifth the distance between Mercury and the Sun.

With such an orbit, the planet is tidally locked; This means that one side is constantly lit and the other side is in permanent darkness. Although the night side never receives any direct radiation from the star, strong eastward winds carry heat from the day side around.

Since the discovery of the planet WASP-43 b in 2011, it has been observed by several telescopes, including the Hubble Space Telescope and the Spitzer Space Telescope. “With the Hubble Space Telescope, we can clearly see that there is water vapor on the day side of the planet,” said Bay Area Environmental Research Institute (BAERI) researcher Taylor Bell. Both Hubble and Spitzer showed that clouds may exist on the night side, but we needed more detailed surveys with the James Webb Space Telescope to begin mapping temperatures, cloud cover, winds, and atmospheric composition more precisely across the planet.

Although WASP-43 b is too small, faint, and too close to its star to be seen directly by a telescope, the planet’s short orbital period of just 19.5 hours makes it ideal for “phase curve spectroscopy.” The phase curve spectroscopic method involves examining small changes in the brightness of a star-planet system as the planet orbits the star.

Because the amount of mid-infrared light emitted by a body depends largely on how hot it is, James Webb’s brightness data can be used to calculate a planet’s temperature.

For more than 24 hours, the research team used James Webb’s Mid-Infrared Instrument (MIRI) to measure the light of the WASP-43 system every 10 seconds. “By observing an entire orbit, we were able to calculate the temperature of different sides of the planet as it rotated into view,” Bell explained. Based on these calculations, we were able to create a map of the temperature of the entire planet.

Measurements show that the air temperature on the day side of the planet is close to 1250 degrees Celsius on average; While the temperature of the night side reaches 600 degrees Celsius and is significantly cooler. These data help locate the hottest spot on the planet, which is slightly eastward from the point receiving the most stellar radiation. This change occurs due to the blowing of winds that move the warm air towards the east.

“Michael Roman” (University of Leicester) researcher and one of the researchers of this project said: “The fact that we can map the temperature in this way is a real proof of James Webb’s sensitivity and stability.”

To interpret the map, the researchers used complex 3D atmospheric models, similar to those used to understand weather and climate on Earth. Analyzes show that the night side of the planet is probably covered in a dense and high layer of clouds, and this layer prevents part of the infrared light from reaching space. As a result, although the night side is very warm, it appears dimmer and cooler than when there are no clouds.

The broad spectrum of mid-infrared light taken by James Webb makes it possible to measure the amount of water vapor and methane around the planet. “Joanna Barstow”, a researcher at “The Open University of UK” and one of the researchers of this project, said: “James Webb has given us the opportunity to find out exactly which molecules we see and put limits on their abundance.”

The observed light spectra show clear signatures of water vapor on the planet’s nightside and dayside, providing additional information about the density of clouds and their height in the atmosphere.

Read more: The highest observatory in the world officially started its work

Also, the researchers were surprised to find that the data showed a lack of methane everywhere in the atmosphere. Because the day is too hot for methane to exist, methane should be cooler, stable, and detectable at night.

“The fact that we don’t see methane tells us that the wind speed on WASP-43 b must be about 5,000 miles per hour,” Barstow explained. If the winds move the gas from the day side to the night side of the planet and back again quickly, there won’t be enough time for the chemical reactions to produce detectable amounts of methane on the night side.

Researchers believe that because of this wind-driven mixing, the chemistry of the atmosphere is the same across the planet. This result was not clear in previous researches that were conducted with Hubble and Spitzer telescopes.

This research was published in “Nature Astronomy” magazine.

Space

The highest observatory in the world officially started its work

Published

2 days agoon

01/05/2024

The Tokyo Atacama University Observatory, which has the title of the highest observatory in the world, is now ready for work.

The highest observatory in the world officially started its work

A new telescope, which is introduced as the highest observatory in the world, has been officially opened.

Tokyo Atacama University Observatory (TAO), which was first designed 26 years ago to study the evolution of galaxies and exoplanets, is located on top of a high mountain in the Chilean Andes at an altitude of 5,640 meters above sea level. . The height of this telescope even exceeds the “Atacama Large Millimeter Array” (ALMA), which is located at an altitude of 5050 meters.

The TAO observatory is located in a region where the high altitude, sparse atmosphere, and perpetually dry weather are deadly for humans, but it is an excellent spot for infrared telescopes like TAO because their observational accuracy relies on low humidity levels that keep the Earth’s atmosphere at wavelengths. Infrared makes it transparent.

Yuzuru Yoshii, a professor at the University of Tokyo (UTokyo), said: “Building a telescope on the top of the mountain was an incredible challenge, not only from a technical point of view but also from a political point of view.” I communicated with the indigenous people to ensure their rights and views were taken into account, with the Chilean government to obtain permits, with local universities for technical cooperation, and even with the Chilean Ministry of Health to ensure that people could climb safely at that altitude. to work

He added: The research that I have always dreamed of doing, thanks to everyone involved, will soon become a reality and I could not be happier.

The 6.5-meter TAO telescope has two science instruments designed to observe the world in infrared light. One such instrument, called SWIMS, will image galaxies in the early universe to understand how they formed from the merger of dust and pristine gas. Despite decades of research, the details of this process remain obscure. The second device, MIMIZUKU, will contribute to the mission’s overall goal by studying the primordial dust disks from which stars and galaxies formed.

Riko Senoo, a student at the University of Tokyo and a researcher on the TAO project, said: “The better astronomical observations of the real object, the more accurately we can reproduce what we see with our experiments on Earth.”

Masahiro Konishi, a researcher at the University of Tokyo, said: “I hope that the next generation of astronomers will use TAO and other ground-based and space-based telescopes to make unexpected discoveries that challenge our current understanding and provide the unexplained.”

Read more: Why there is no gaseous moon in solar system?

Before the newly opened telescope was built, Yoshi and his colleagues in 2009 also assembled a 1-meter telescope on top of Mt. This small telescope called “miniTAO” imaged the center of the Milky Way galaxy. Two years later, miniTAO received the Guinness World Record for being the highest astronomical observatory on Earth.

Although the observatory has been the talk of the town for the past 26 years, work on its construction site began in 2006. At that time, the first road to reach the summit was paved, and shortly after, a weather monitoring system was installed there.

What is an exoplanet? Everything you need to know

For years, extrasolar planets have occupied the minds of scientists and dreamers. Since the discovery of the first stars in the night sky, man has been searching for the worlds that revolve around these stars. Are exoplanets rocky bodies similar to Earth? Can they have liquid water flow on their surface? Does the existence of essential elements for life in other worlds mean that we are not alone in this infinite world?

For thousands of years, humans have been trying to answer this question: Are we alone? Until the 1990s, astronomers had no evidence of exoplanets; But finally, in 1992, the first exoplanet was discovered. Since then, more than 5,000 exoplanets have been discovered in different types and categories and have amazed scientists more than ever

-

What is an exoplanet?

-

Types of extrasolar planets

-

Stone worlds

-

Gas giants

-

Introduction of extrasolar planets

-

TOI-1452b, a blue world candidate

-

WASP-39b, the first planet with a carbon dioxide atmosphere

-

WASP 103b, the rugby ball planet

-

51 Pegasi b; The first planet around a Sun-like star

-

PSR B1620-26b; The oldest known planet

-

Gliese 876d; rocky planet

-

Kepler-11f; gas dwarf

-

Kepler-452b; Earth-like planet

-

Search for life in extrasolar planets

-

Interesting facts about exoplanets

-

Detecting the color of an exoplanet for the first time in 2013

-

There are 10 billion Earth-like planets in the Milky Way

-

NASA’s Kepler space telescope has discovered the most exoplanets

-

The possibility of exoplanets around stars with high metallicity

-

Using the gravitational microlensing method to observe exoplanets

-

Most exoplanets were discovered through radial velocity

-

The transit method is the easiest way to find exoplanets

-

Exoplanets can orbit more than one star

-

Exoplanets can have harsh climates

-

Some exoplanets have strange orbits

-

Exoplanets can have unique atmospheres

-

Conclusion

What is an exoplanet?

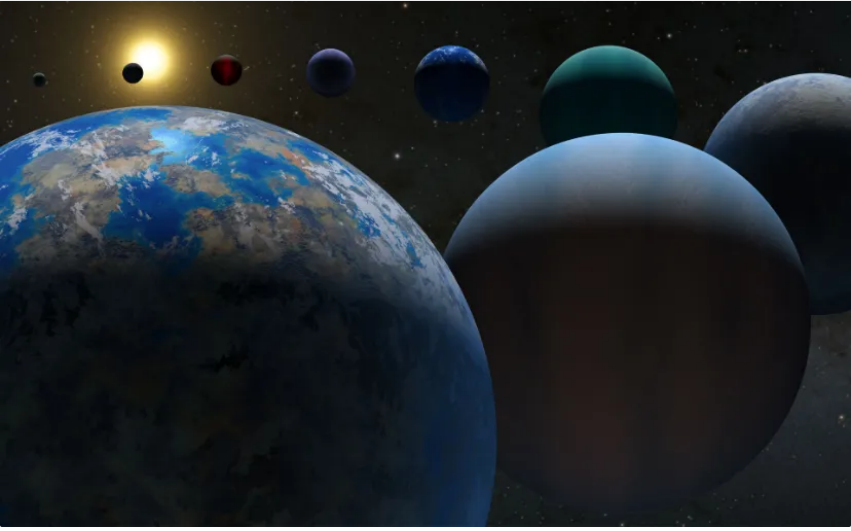

Illustration of the exoplanet k12-18b, which is a super-Earth.

Illustration of the exoplanet k12-18b, which is a super-Earth.

An exoplanet is a world outside the solar system in a different star system. Over the past two decades, thousands of exoplanets have been discovered, and most of these discoveries belong to NASA’s Kepler Space Telescope.

Exoplanets have different sizes and orbits. Some of them are giant worlds near the host star and others are icy and rocky worlds. NASA and other space agencies are always on the lookout for a specific type of exoplanet: an Earth-like planet in the habitable belt of a Sun-like star.

The habitable zone is the region of a star’s orbit where the planet’s temperature allows surface liquid water to flow. The first definition of the life belt was based on the concept of heat balance, however, based on current calculations, it also includes other criteria such as the greenhouse effect of the planet’s atmosphere. For this reason, determining the boundaries of the life belt has become a little vague.

The Kepler Space Telescope, an observatory that started its operation in 2009 and continued to operate until 2018, has the honor of discovering the largest number of exoplanets. The telescope has definitively discovered 2,342 exoplanets and provided indications of the existence of another 2,245 planets.

Types of extrasolar planets

Our solar system is home to eight planets, divided into two groups: rocky planets and gas giants. The four inner planets of the solar system, namely Mercury, Venus, Earth, and Mars, are rocky. At the same time, the four outer planets of the solar system, namely Jupiter, Saturn, Uranus, and Neptune, are classified as gas giants. Most of the planets that were discovered in the orbit of other stars are either rocky or gas giants. However, gas and rock giants can be divided into groups and subgroups.

Stone worlds

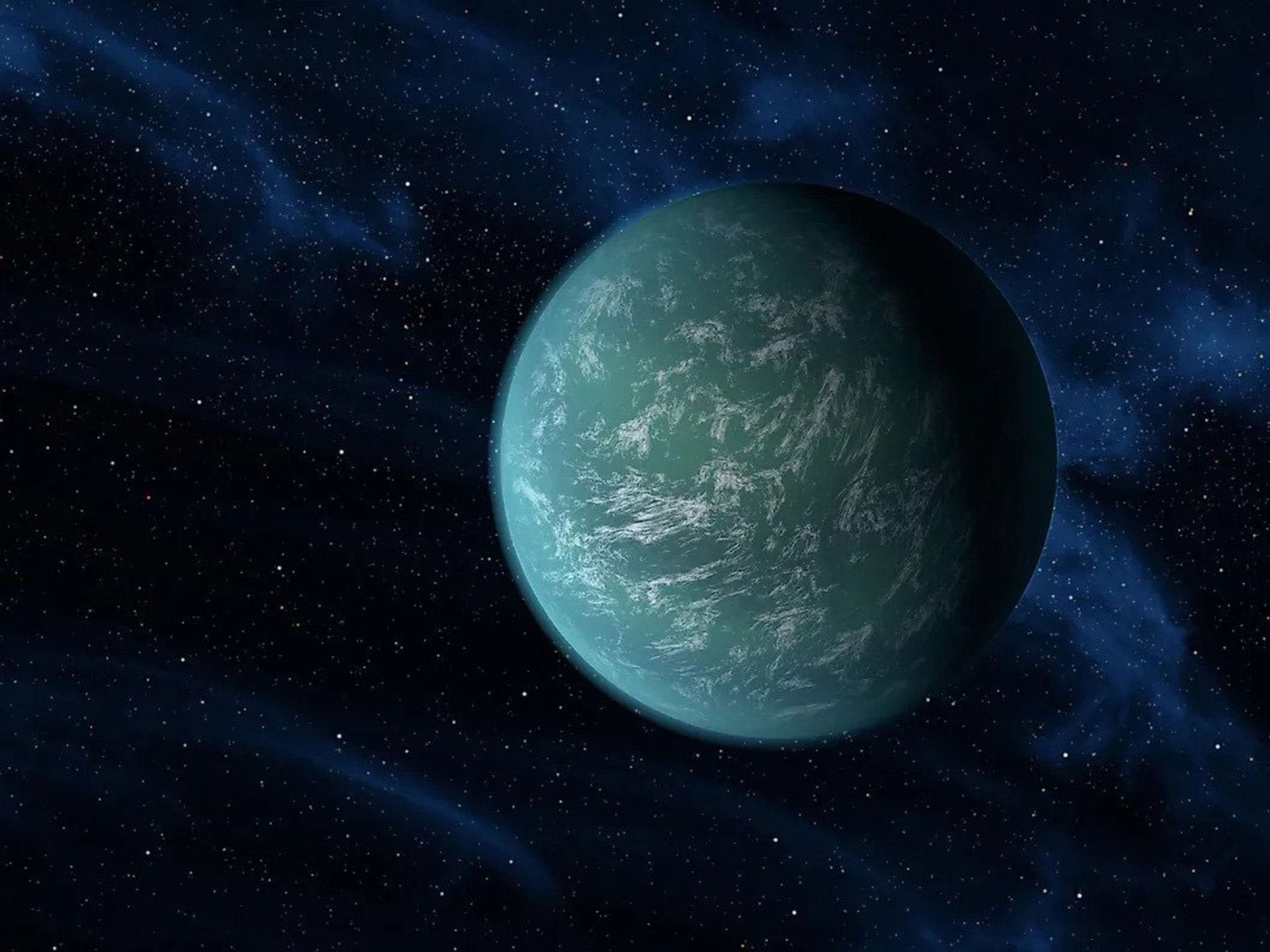

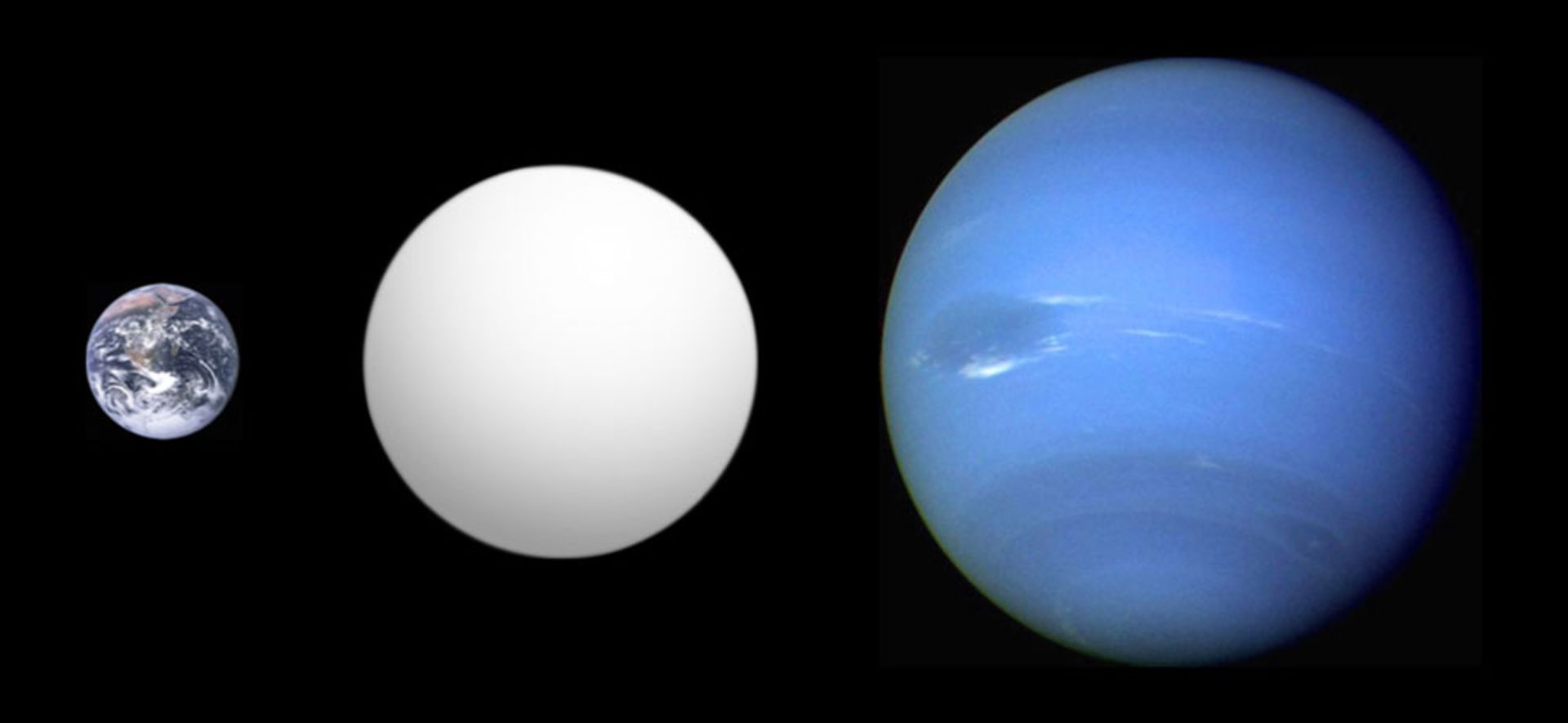

An artist’s rendering of Kepler 22b, a distant, possibly super-Earth planet

The rocky planets themselves are divided into two categories: small rocky planets and so-called super-Earth planets. Small rocky planets are the type of planets that are also found in the solar system. Although the rocky planets in the solar system differ from each other, they all fall into one category.

However, exoplanets do not exist in the solar system, yet they are one of the most common planets in the Milky Way. These planets, as their name suggests, are a kind of rocky planet larger than Earth. According to a more precise definition, the rocky exoplanets are at least twice the size of Earth.

The mass of super-Earths can reach up to ten times the mass of the Earth. Scientists still do not know at what point the planets lose their rocky surface and become gas planets. However, in the range of 3 to 10 times the mass of Earth, there may be super-Earths with different compositions, such as blue worlds, snowball worlds, or even planets like Neptune that are made of very dense gas. As a result, heavy super-Earths that have turned into gas giants can be classified as sub-Neptunian or mini-Neptunian planets.

Gas giants

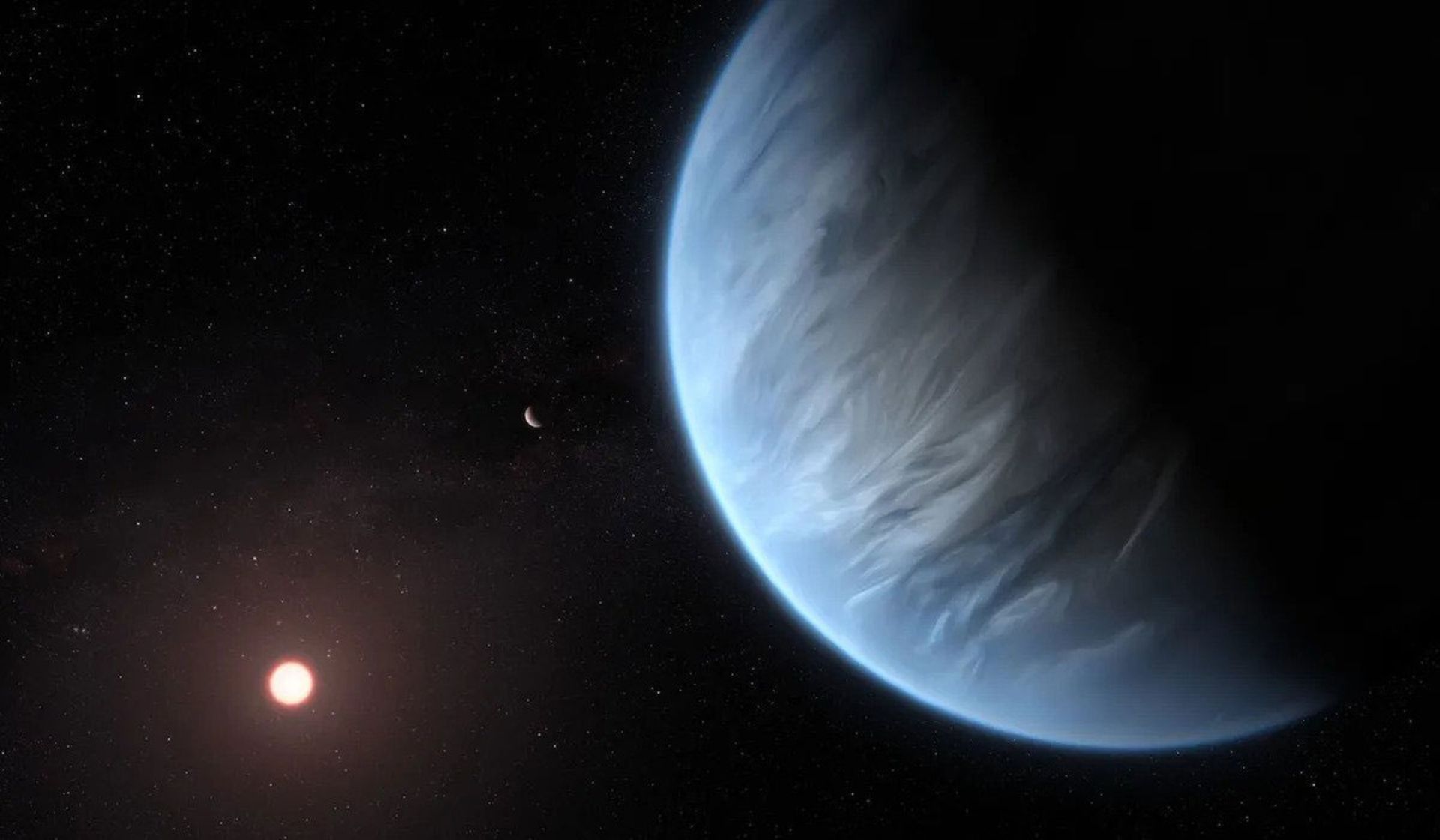

Artist’s rendering of hot Jupiter orbiting its star

Artist’s rendering of hot Jupiter orbiting its star

Gas giants are divided into three categories: gas giants, ice giants, and hot Jupiters. Simple gas giants are called worlds like Jupiter and Saturn. These heavy worlds are usually located in orbit far from their star, have dense atmospheres mostly composed of hydrogen and helium, and do not have a solid surface.

On the other hand, planets like Uranus and Neptune are called ice giants. Although the predominant composition of all gas giants is hydrogen and helium, ice giants are a type of gas giant with ice concentrations in their atmosphere. For example, both the planets Uranus and Neptune have large amounts of chemicals such as methane, ammonia, and water. Ice giants are usually located in the outer reaches of their star system; Where ice is found in high concentrations.

Gas giants and ice giants can be seen in the solar system. However, the third type, hot Jupiter, does not exist in our solar system. A hot Jupiter is a gas giant that is in a very close orbit from its parent star. This orbit can be even closer to the Sun than the orbit of Mercury; Therefore, hot Jupiter planets usually have hellish temperatures in their atmospheres, hence the nickname hot Jupiters.Introduction of extrasolar planets

Although exoplanets are classified in the group of rocky planets such as super-Earths or gas and ice giants and hot Jupiters, some planets violate the existing classifications and the number of these types of planets is increasing day by day. In this section, we introduce some of the most interesting exoplanets that have been discovered so far.

TOI-1452b, a blue world candidate

Illustration of TOI 1452b, a super-Earth planet.

Illustration of TOI 1452b, a super-Earth planet.

The planet TOI-1452b is located in the orbit of a red dwarf star at a distance of 100 light-years from Earth. Researchers discovered this planet through the blocking of starlight and its fluctuations.

Based on the obtained information, the planet TOI-1452b is almost 70% larger than the Earth and therefore it is included in the group of super-Earth planets. The planet also orbits its star once every 11 days. The density of this planet indicates that it has a liquid ocean surface as well as rocky and metallic compositions like planet Earth. Surprisingly, water makes up 30% of TOI-1452b’s mass. While water on earth is only 1% of its mass.

WASP-39b, the first planet with a carbon dioxide atmosphere

An artist’s rendering of the exoplanet WASP 39b

An artist’s rendering of the exoplanet WASP 39b

The James Webb Telescope’s Near-Infrared Spectroscopy (NIRSpec), closely observing the exoplanet WASP-39b, found clear evidence of carbon dioxide in its atmosphere. This is the first time that this familiar gas has been discovered on Earth in a planet outside the solar system. The spectrum of 3 to 5.5 microns, which is the infrared ratio of the transmission spectrum, is useful not only for detecting carbon dioxide but also for water and methane, which are all indicators of life.

WASP 39b, with a temperature of 870°C, is a hot Jupiter-type planet about 700 light-years from Earth. The mass of this planet is equal to a quarter of the mass of the planet Jupiter, but its diameter is 1.3 times larger than Jupiter. The planet also orbits a Sun-like star at such a high speed that it completes its orbit in just four days.

WASP 103b, the rugby ball planet

A rendering of the planet wasp103b that resembles a rugby ball.

A rendering of the planet wasp103b that resembles a rugby ball.

WASP-103b, shaped like a rugby ball, is the first non-spherical exoplanet ever discovered. This planet, which completes its orbit around its star in less than a day, has strong gravitational forces that have turned it into a rugby ball.

The Cheops telescope of the European Space Agency discovered this strange planet in the constellation of Hercules. The planet WASP 103b, twice the size of Jupiter, is very close to its star.

51 Pegasi b; The first planet around a Sun-like star

Illustration of the exoplanet Pegasi B 51

Illustration of the exoplanet Pegasi B 51

Although 51 Pegasi b is not the first exoplanet discovered, it can be considered the first example discovered around a Sun-like star. In addition, this planet has no resemblance to the planets we know. This huge world completes its star orbit in just a few days.

In 2015, the atmosphere of 51 Pegasi b was studied in the visible spectrum. As a result, researchers were able to find out the real mass or orbital orientation of this planet through its light.

PSR B1620-26b; The oldest known planet

PSR B1620 is the oldest known exoplanet.

PSR B1620 is the oldest known exoplanet.

The name PSR B1620-26b may not be as easy to pronounce as many exoplanets. However, this planet, with an approximate age of 12.7 billion years, is the oldest planet ever discovered. This planet is only slightly younger than the age of the entire universe. This ancient planet orbits a pulsar as well as a superdense white dwarf at the same time. These two stars revolve around each other and the gas giant planet also revolves around their gravitational axis.

Gliese 876d; rocky planet

Illustration of the rocky planet Gliese 876d

Illustration of the rocky planet Gliese 876d

The planet Gliese 876d is only 15 light-years away from Earth, and due to its small size, it belongs to the group of rocky planets. Of course, this planet is slightly bigger than our Earth. By all accounts, Gliese 876d is a hell of a world. This planet is very hot, yet since its discovery in 2005, it has been considered important evidence for the existence of rocky worlds outside the solar system.

Kepler-11f; gas dwarf

Some planets like Kepler 11f are mini-Neptunes.

Some planets like Kepler 11f are mini-Neptunes.

There is a problem with the classification of smaller exoplanets; So far, we have observed several planets in space that are larger than Earth but smaller than Neptune; But we don’t have such a group in the solar system. For this reason, it is difficult to guess that rocky planets like Mars and Earth can grow to what extent? Or exactly in what dimensions do they become gas giants like Uranus and Neptune?

Kepler 11f is a mini-Neptune planet. The density of this planet shows that it has an atmosphere similar to Saturn and a small rocky core. This planet led to the creation of a new category called gas dwarf, which does not exist in our solar system.

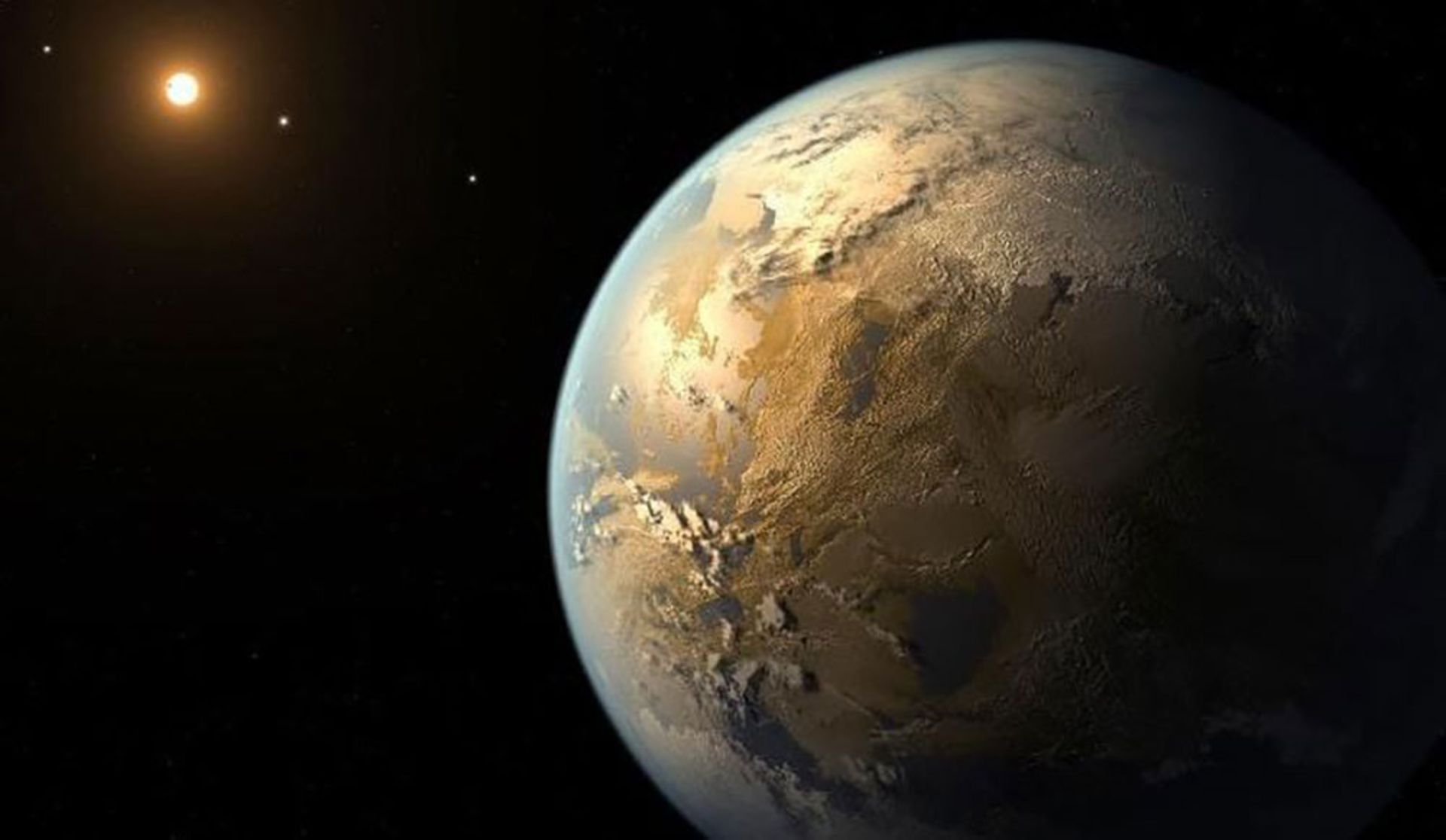

Kepler-452b; Earth-like planet

The Kepler 452b planet can be considered the most Earth-like planet.

The Kepler 452b planet can be considered the most Earth-like planet.

Kepler 452-b can be considered the most Earth-like planet ever discovered. The star of this planet is the same size as the sun and its year is slightly longer than the Earth’s year. Of course, this planet is slightly larger than Earth, but it is definitely located in the life belt of its star.

However, there are a few problems with Kepler 452b: First, the planet is more than 1,000 light-years away from Earth, so we’ll never reach it. It is also 1.5 billion years older than Earth, so it can be said that its host star has grown so much that it has made the planet uninhabitable. So maybe it was Earth’s twin many years ago.

Search for life in extrasolar planets

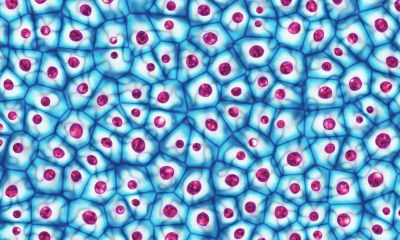

One of the biggest questions of mankind is whether there is life outside the earth. The James Webb Space Telescope, launched in 2021, has found evidence of the essential ingredients for extraterrestrial life: a mixture of gases in the atmospheres of Earth-like exoplanets. This telescope was able to discover atmospheric signs similar to Earth, such as oxygen, carbon dioxide, and methane, which are strong indicators of possible life.

Probably, future telescopes will be able to detect the signs of photosynthesis, which is the conversion of sunlight into chemical energy necessary for plants. Or maybe they can detect gases and molecules from animal life. Also, extraterrestrial intelligent life probably creates atmospheric pollution that can be detected from a distance.

In the area of the life belt, it is possible for liquid water to flow on the surface of the planet.

In the area of the life belt, it is possible for liquid water to flow on the surface of the planet.

So far, more than 5,000 exoplanets have been discovered, but their total number can reach trillions. One of the best tools for scientists to increase the accuracy of searches is the area known as the life belt. As we said in the previous section, the life belt is a distance from the orbit of a star whose temperature is suitable for the flow of surface liquid water.

Many other conditions are necessary for the formation of life on exoplanets: first of all, the size of the planet and the right atmosphere are important. Also, the host star must be stable and not emit deadly flares. Lifebelt is just one way to narrow down searches. So far, many Earth-like planets have been discovered, however, more advanced tools are needed to increase the accuracy of searches.

Interesting facts about exoplanets

In the era of innovation, we are getting closer to the outside world every day. Searching for extrasolar planets has been one of the latest human space adventures. In this section, we discuss interesting facts and points about these mysterious objects.

Detecting the color of an exoplanet for the first time in 2013

The study and discovery of extrasolar planets began in the ’90s, But it was in 2013 that researchers were able to identify the color of an exoplanet for the first time. Based on a measure called reflectivity, astronomers obtained a dark blue color for the planet HD 189733b. It was from this point that the colors of other exoplanets were obtained. For example, the color of the planet GJ 504b is purple. According to astronomers, helium planets are mostly white or gray.

There are 10 billion Earth-like planets in the Milky Way

According to estimates, the number of Earth-like planets in the Milky Way alone reaches ten billion. Kepler 22b was discovered in 2011 as the first exoplanet in the habitable belt. When the news of this discovery spread, people immediately fantasized about life on such a planet. However, the distance of 587 light years from Earth means that we have to spend thousands of years to reach this exoplanet. This planet is currently under investigation.

There are 10 billion Earth-like planets in the Milky Way

There are 10 billion Earth-like planets in the Milky Way

NASA’s Kepler space telescope has discovered the most exoplanets

NASA’s Kepler Space Telescope, which was launched for the first time in 2009, was dedicated to the search for exoplanets. Initially, the Kepler mission was supposed to last only 3.5 years, however, this spacecraft continued its investigations until 2018. This telescope definitively discovered more than 2,600 exoplanets.

The possibility of exoplanets around stars with high metallicity

Most of the physical materials in the world are composed of hydrogen and helium. Metallicity is the term astronomers use to describe elements other than helium and hydrogen. According to data collected by the Kepler telescope, stars with more diverse elements are more likely to host exoplanets in their orbits.

Using the gravitational microlensing method to observe exoplanets

In the gravitational microlensing method, a star other than the exoplanet host is used. When a star passes in front of another star, its gravity acts like a lens that magnifies the light of the other star. If the lensed star has a planet in its orbit, the exoplanet’s mass increases the magnification effect. Astronomers used this method to search for more than 20 exoplanets.

Most exoplanets were discovered through radial velocity

The general rule for identifying exoplanets is to observe the motion of their star. This method, which is also called Doppler oscillation, has been the most successful method for discovering exoplanets, so far 400 planets have been discovered this way. The radial velocity of the star changes due to the gravitational pull from the planet around it. In this case, the star seems to be sliding.

The transit method is the easiest way to find exoplanets

The transit method, which from our point of view is the burning of a star, is one of the common methods for discovering exoplanets. Using this method, astronomers can estimate the orbits and mass of exoplanets from Earth through their flickering frequency.

Exoplanets can orbit more than one star

Unlike the Solar System, where planets orbit a single star, some planetary systems can have more than one star. These double or triple systems provide unique contexts with multiple radiation sources.

Exoplanets can have harsh climates

Some exoplanets show strange weather phenomena. Hot Jupiters, for example, can reach scorching temperatures and violent storms.

Some exoplanets have strange orbits

Not all exoplanets follow an elliptical or circular pattern of orbital motion. Some planets have eccentric, elongated orbits, taking an adventurous journey around the axis of their star. Exoplanets can have unique atmospheres

By analyzing the light passing through the atmosphere of exoplanets, scientists can gain interesting insights about the composition of these planets. The atmosphere of some extrasolar planets has elements such as iron vapor, carbon dioxide, and even methane.

Conclusion

An exoplanet is a planet outside the solar system that is classified into different groups and types. The first group are rocky planets similar to Earth or larger than Earth, which are also called super-Earths. Super-Earths can eventually become gas planets known as mini-Neptunes. The next group is the gas planets, which are divided into gas giants, ice giants, and hot Jupiters.

So far, more than 5000 exoplanets have been discovered and confirmed, and this number is increasing day by day. According to estimates, there are only 10 billion Earth-like planets in the Milky Way. Earth-like planets are usually located in the life belts of their stars. In the zone of the life belt, the temperature of the planet is so suitable that it is possible for surface liquid water to flow on it, and this feature can increase the potential for life. Researchers hope to get more data from exoplanets by building more advanced telescopes because understanding exoplanets will help us to better understand our planet and the world around us.

James Webb space telescope map of the climate of an exoplanet

The highest observatory in the world officially started its work

Many mental disorders have physical roots

How to connect to the TV with a Samsung phone?

What is an exoplanet? Everything you need to know

The secret of the cleanest air on earth has been discovered

Asus Zenbook 14 OLED laptop review

How extinct animals could be brought back from death?

Motorola Edge 50 Pro

Healing diabetic wounds with bacteria-killing hydrogel

Popular

-

Technology9 months ago

Technology9 months agoWho has checked our Whatsapp profile viewed my Whatsapp August 2023

-

Technology10 months ago

Technology10 months agoHow to use ChatGPT on Android and iOS

-

Technology9 months ago

Technology9 months agoSecond WhatsApp , how to install and download dual WhatsApp August 2023

-

Technology11 months ago

Technology11 months agoThe best Android tablets 2023, buying guide

-

Humans1 year ago

Humans1 year agoCell Rover analyzes the inside of cells without destroying them

-

AI1 year ago

AI1 year agoUber replaces human drivers with robots

-

Technology10 months ago

Technology10 months agoThe best photography cameras 2023, buying guide and price

-

Technology11 months ago

Technology11 months agoHow to prevent automatic download of applications on Samsung phones