AI

San Francisco could use killer remote-controlled robots

Published

2 years agoon

The San Francisco Police Department can now use remote-controlled robots to kill criminals if the situation is acute and requires immediate action.

San Francisco police have received permits to use remote-controlled robots to kill criminals, The Verge reports. Members of the San Francisco City Board of Supervisors have approved a controversial policy allowing police robots to be used as a lethal force when the public or police officers are in imminent danger. Provided that the use of robots is preferable to any other strike strategy.

The San Francisco Police Department says it has no pre-armed robots in its fleet and doesn’t want to equip its devices with firearms. The robots can be equipped with explosives to engage and incapacitate or distract violent or armed or dangerous suspects, a San Francisco police official said. It is said that these robots will only be used when the situation is acute, and the police want to save innocent people or prevent more casualties.

The San Francisco Police Department currently has 17 robots, 12 of which are operational. These robots can often be divided into large and medium tracking robots (such as the Remotec F6A and Qinetiq Talon) and small robots (such as the iRobot FirstLook and the Recon Robotics Throwbot). All these robots are controlled remotely.

The first category can be used to investigate or detonate explosives. Small robots of the second category are sent to the target areas to carry out the process of monitoring and identifying suspicious subjects. All robots owned by the San Francisco Police Department are mostly controlled by humans and have limited autonomous capabilities.

Police departments in the United States have already used robots to kill criminals. It is said that the first incident related to this story happened in 2016. That year, the Dallas Police Department used a bomb disposal robot to kill a sniper who shot and killed five officers. A group of people supported the Dallas police for ending a tragic incident, and others criticized using a robot without using alternatives.

San Francisco legislators are said to have debated for two hours on the passage of the law on the use of killer robots, and the plan ended up with eight votes in favour and three against. One of the supporters of this project says that opponents of using robots to kill criminals may appear to the public as anti-police people. Meanwhile, the San Francisco Board of Supervisors chairman, who voted against the plan, said he was not anti-police and considered himself “a supporter of people of colour.”

Several police departments in different parts of America were against using robots technology to kill criminals. The Oakland Police Department initially gave the green light to this plan. Still, in the end, he gave up his initial decision without any explanation. Many civil rights groups have protested the approval of San Francisco’s new plan.

via:Theverge

AI

Why artificial intelligence has not yet succeeded in replacing Google?

Published

6 months agoon

14/04/2024

Why artificial intelligence has not yet succeeded in replacing Google?

When chatbots were introduced to the world and Microsoft added a version of them to Bing search, users were promised that it was time to say goodbye to long and fruitless searches; Because artificial intelligence was ready to revolutionize the search industry and even topple Google empire by changing the way we search for information on the Internet. But to be honest; Artificial intelligence chatbots, for all their promises and dust, have not yet been able to defeat Google or make Google’s search engine less popular than before.

Of course, it doesn’t seem fair to compare the almost newly born chatbots with Google, which has years of experience and power behind it. However, since the ubiquity and power of artificial intelligence have raised our expectations, it makes us wonder why Copilot, ChatGPT, and Jamnai have not yet been able to make a dent in traditional Google searches. Especially when companies like Perplexity and You.com tout themselves as the next generation of search products, and even Google and Bing believe AI is the future of search. I mean, what does Google have that artificial intelligence chatbots don’t have?

The search engine has many applications that are not necessarily limited to finding important and inaccessible scientific information. In fact, most users use the Google search engine just to access their email inbox, find different websites, or find out the age of actors. It is interesting to know that every year a large number of people go to Google and type the word “Google” in the search box!

A large number of people search for “Google” on Google every year

So our real question is not how well AI search engines can find information, but how well they can handle everything Google can do.

To answer this question, some of the best new AI products were put to the test. Based on information from SEO research company Ahrefs, a list of the most searched questions on Google was prepared to ask various artificial intelligence tools. The obtained results showed that in some cases, chatbots based on the linguistic model appear more useful than the Google results page; But for the most part, it became clear how difficult it would be for artificial intelligence (or anything else) to replace Google at the heart of the web. This is like trying to replace skilled librarians with robots; A robot may be smart, but it lacks the intuition, expertise, and experience of a librarian.

According to experts in the field of search, there are basically three types of search on the Internet. The first and most widely used of them is website search, in which the user types the desired website name in Google and then clicks on the relevant link. Almost all top Google searches from YouTube to Wordle and Yahoo mail fall into this category. In fact, “getting the user to the website” is the main job of the search engine.

“Bringing the user to the website” is the main task of the search engine

In this type of search where the user is directed to the desired website, artificial intelligence search engines generally have a weaker performance than Google. When you search for a website name on Google, it’s very rare that the first result isn’t what you’re looking for. Of course, on the search result page, you will find many links, none of which are the websites you want; But even so, Google is fast and rarely makes mistakes.

But artificial intelligence bots think for a few seconds after you type the word “Zoomit” in their box, and then provide a series of not-so-useful information about the company, while all we wanted from this search was a link to access the website; Let’s say that some chatbots don’t even give the website link to the user at the end of this information.

Additional information is not always useful, especially in searches where we just want to be directed to another website because generating this amount of information is time-consuming for artificial intelligence tools.

Read more: How to use ChatGPT on Android and iOS

Google is the perennial winner of targeted searches

Another type of search is informational queries where the user wants to know something specific for which there is a correct answer. For example, “the result of a certain team’s match”, “what time is it” and “the weather” is very popular information questions. In these questions, you just want to get the answer explicitly and you are not looking for additional information.

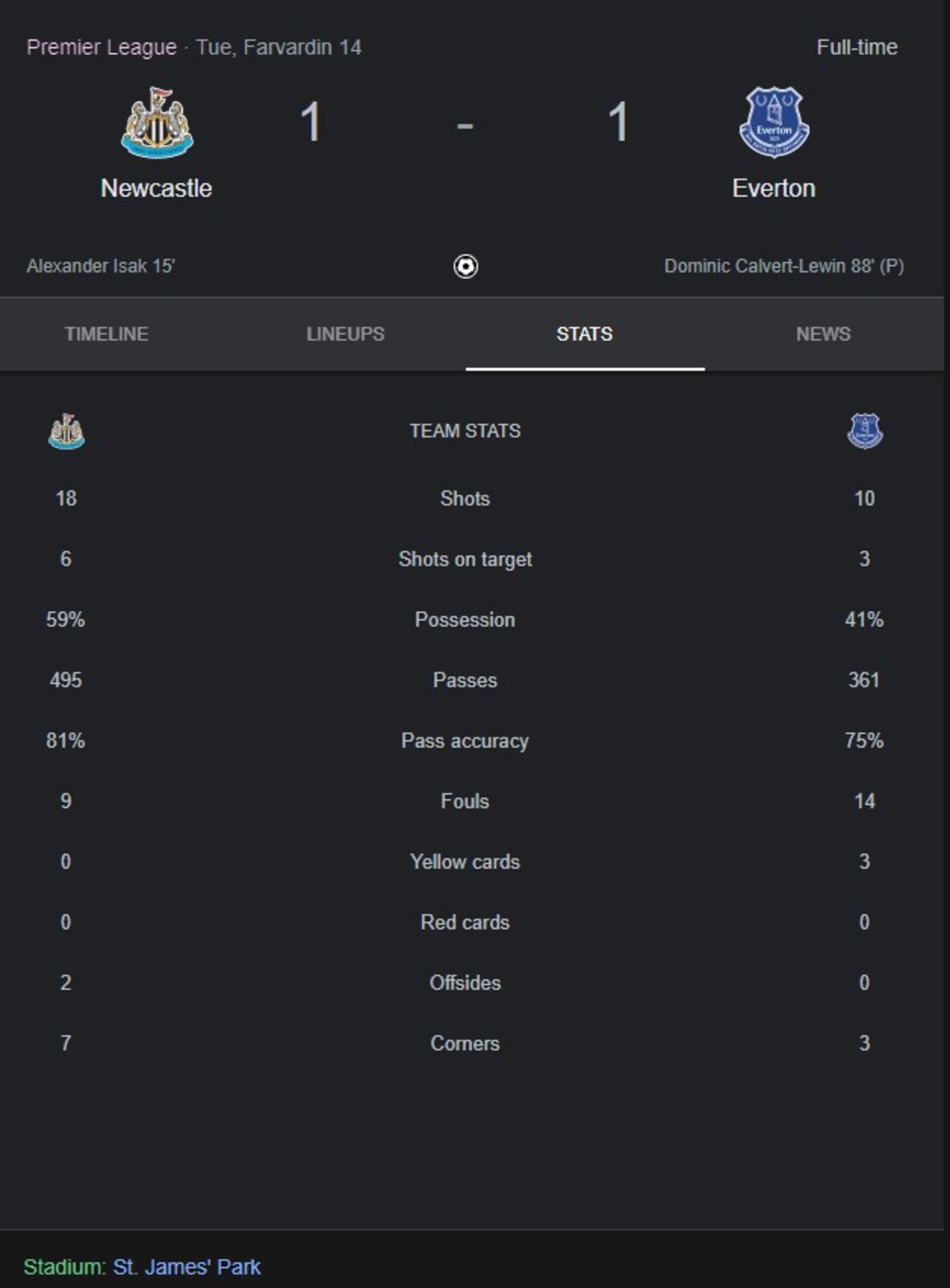

Artificial intelligence cannot be trusted for live and immediate issues like sports scores. For example, when I asked about the latest result of the Newcastle vs Everton football match on Tuesday 14 April 1403, both You.com and Perplexity gave me outdated information, but Copilot’s answer was correct. But unlike artificial intelligence bots, Google not only announced the result correctly but also provided useful statistics and information such as ball possession percentage, number of red and yellow cards, offside, and a view of each team’s football system.

Search “Newcastle and Everton football team result” in Google

Google’s extensive data often allows the search engine to provide relevant information and additional useful statistics to the user. But artificial intelligence bots may not be able to provide you with personalized information that seems useful, like Google, because they do not have access to things like your location and personal information. In this type of information search, Google’s ability to consider specific user contexts distinguishes this search engine from other artificial intelligence systems and gives it an advantage.

Google differentiates itself from AI bots by considering specific user contexts

When it comes to informational questions where time doesn’t affect their results, like “How many weeks are in a year” or “When is Women’s Day”, almost all AI bots get the answer right. In many cases, AI answers help to understand the answer by providing useful additional information; But for now, you can’t trust all the information they provide. When asked how many weeks are in a year, Google said there are 52.1429 weeks in a year, but You.com explained that each year is actually 52 weeks and one day, plus an extra day in leap years. 52 weeks and two days). This section of You.com’s additional information was more helpful than Google’s answer.

Perplexity’s answer was not as good as the other bots, as he initially said that a normal year is actually 52 weeks and a leap year is 52 weeks and one day; But in the subsequent explanations, he contradicted his initial statement and provided more correct explanations. These contradictions make the user spend more time searching to ensure the accuracy of the AI answer. If the user has to search again to verify the summary information that is supposed to get him the answer faster, Google will undoubtedly take less time from the user because it is more reliable.

Chatbots are faster at searching for buried information

There is a sub-genre of information searches that can be called buried information searches; Because to access the full answer we have to go to the websites and find the answers buried under a lot of ads and SEO keywords.

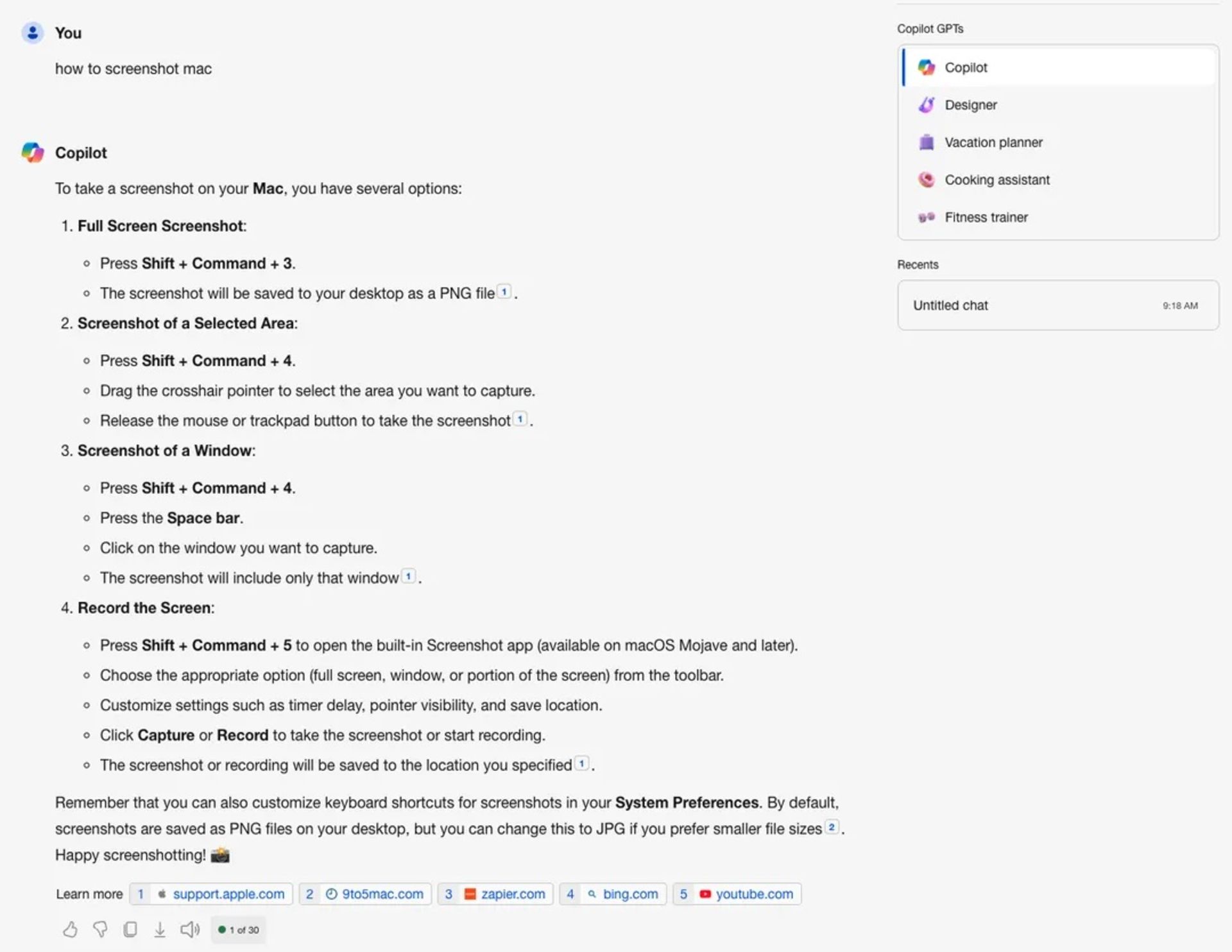

When we search for the phrase ” screenshot on Mac ” in Google, millions of pages come up to answer this search on the Internet, which we have to open until they finally tell us to press Cmd + Shift + 3 keys to take a picture of the entire screen. and press Cmd+Shift+4 to take a picture of a part of the screen. However, even Google’s Search Generative Experience (SGE), which quickly finds key points on a web page, doesn’t fully point to the answers, and you have to open websites to find out other ways.

Copilot’s answer to taking screenshots on Mac

Copilot’s answer to taking screenshots on Mac

In this sub-genre of information searches, artificial intelligence tools are the winners; Because they select all the useful information from the articles and provide them directly and in one place (a matter that affects copyright and the position of websites in Google search).

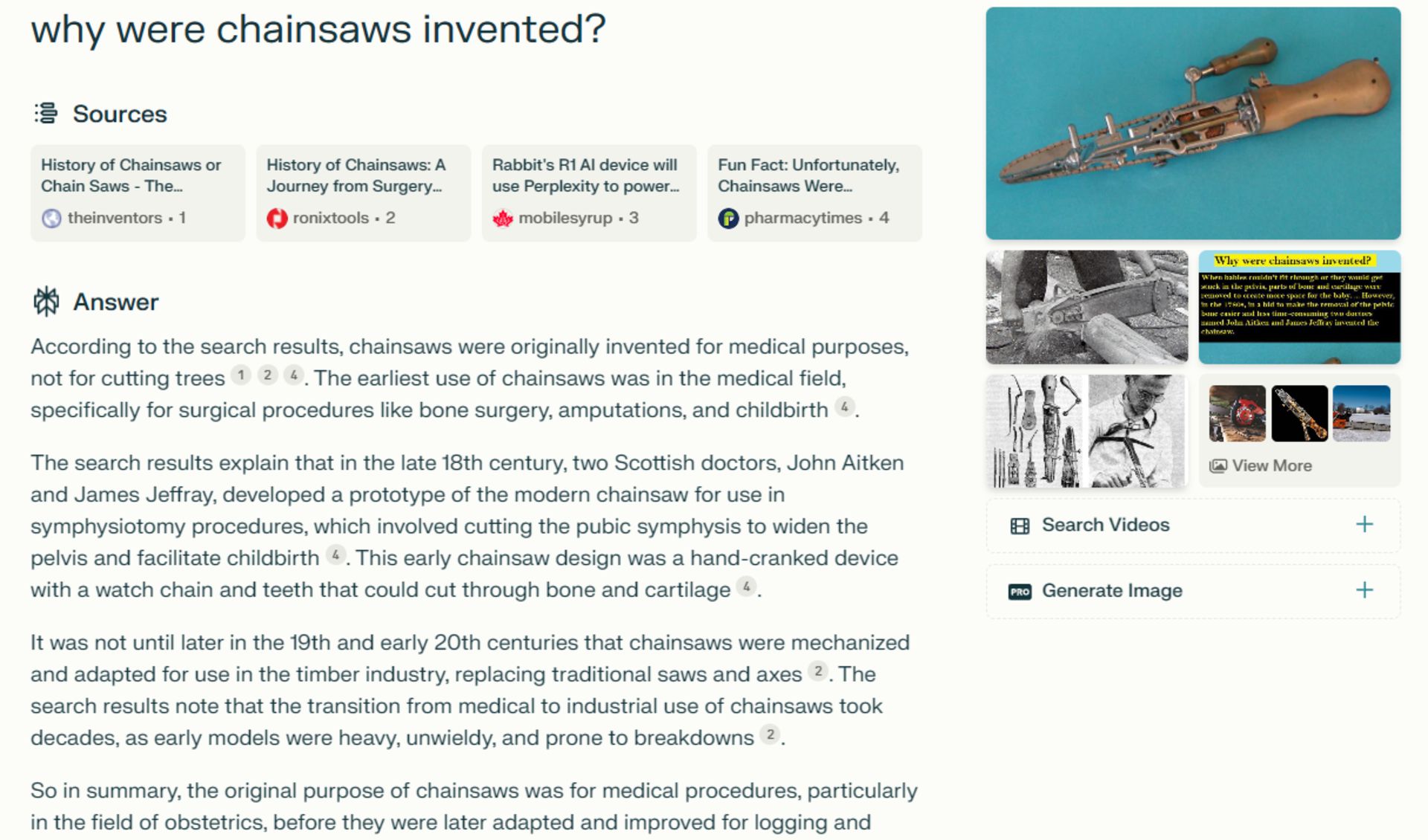

The third type of search in Google is exploratory searches that do not have a single answer and instead are the beginning of a learning process. For example, “How to tie a tie”, “Why was the chainsaw invented” and “What is Tik Tok” are among the most popular research questions. If you’ve ever Googled the history of NASA or the name of a musician you recently heard about, you’re exploring. Statistically, exploratory search is not as popular and widely used as guided and informational search, but this is exactly where AI search engines can shine.

The answer is plexity due to the invention of the chainsaw

The answer is plexity due to the invention of the chainsaw

In response to the question, “Why was the chainsaw invented,” Copilot wrote a multi-part answer on the medical origins of chainsaws before describing their technological evolution and eventual adoption by woodcutters, and finally providing useful links to further reading. Perplexity’s response was shorter but included some interesting pictures of vintage chainsaws. Google’s results contained a large number of similar links, but lacked the artificial intelligence combinations; Even Google’s generator search only listed basic information.

AI search engines shine in exploratory searches

Citations from AI engines are very useful. Perplexity, You.com and other bots are making progress in linking inline to their resources; This means that if you come across something in the description that catches your eye, you can be taken directly to the source by clicking on the line. Now, bots don’t always provide enough resources or put links in the right places, but it’s still a good and useful process.

The most searched question on Google is a simple question: “What to see”. Google has designed a completely dedicated page with different rows of suggestions for this question. This page contains top picks, the most popular, and newest, and dozens of other categories curated for you. In answering this particular question, Google’s targeted design outperforms both chatbots and traditional search results. When bots were asked the same question, none of the AI search engines performed as well as Google. Copilot listed five new popular movies. Perplexity suggested a random set of movies. You.com also offered old information, recommending to watch “14 Netflix Picks” without naming them.

Copilot’s suggestion to watch movies

Copilot’s suggestion to watch movies

In this type of search, artificial intelligence is a good idea because it is based on an algorithm; But the chatbot is the wrong user interface. Obviously, Google knows how popular the question “what to see” is, so it was able to design the answer in a more useful way by identifying the behavior of users. While chatbots are valuable for certain tasks, they may not excel in scenarios where visual presentation and efficiency are important. Google’s specialized approach and design show that for specific requests, the right user interface can significantly increase the user experience.

By identifying the behavior of users, Google designs the answer in a more useful way

At least for some web searches, generative AI can be a better tool than the search technology of decades past; But modern search engines are not just pages of links, but more like miniature operating systems. The purpose of most searches is not to start an amazing journey and discover information, but to get a link or a correct answer and then exit; Currently, systems based on large language models are slow competitors for search engines.

Artificial intelligence is an amazing technology and chatbots are powerful tools, but they alone cannot replace traditional Google search; Because a successful search goes beyond answering questions and includes understanding the user’s search intent, considering the context, and providing relevant information.

Chatbots are powerful, but they cannot replace Google by themselves

Google’s dominance over these factors cannot be denied; Disrupting the position of this search engine also requires fundamental innovations. Almost all technology companies, including Google itself, believe that artificial intelligence can help search engines understand queries and process information better.

Now the question is: can Google optimize its results pages, its business model, and the way it summarizes and presents information in an AI style before chatbots, AI companies can quickly turn their chatbots into better tools for searching the Internet. ? Whatever the answer, it’s going to take more than a few chatbots to take down Google.

AI

The future of generative artificial intelligence from its own language

Published

7 months agoon

27/03/2024

The future of generative artificial intelligence from its own language

We asked the ChatGPT chatbot, which has become very popular these days, to write an article in English about the future of generative artificial intelligence, that is, about its own future.

Our request was to submit a 1,200-word essay examining the potential negative and positive outcomes of this technology. We then asked the bot to also cite real-world examples where it apparently exceeded its capabilities.

We also asked him to write a piece about Sam Altman’s recent activities, which, as you’ll read below, was a topic he was capable of describing.

In the following, we will recount the statements of this productive artificial intelligence with the least interference due to the arrangement of the report.

The future of generative artificial intelligence; Striking a balance between exciting possibilities and potential pitfalls Generative Artificial Intelligence has emerged as an emerging and powerful force and has reshaped the technology landscape with its ability to autonomously create content.

Generative AI, from large language models like GPT-3 to image generation algorithms, holds the promise of the future. However, this promising future is not without challenges. In this article, with the aim of striking a balance between optimism and caution, we will examine the potential negative consequences and the most exciting possibilities of generative AI.

In addition, we address the issue of forced change and then Sam Altman’s return to OpenAI and explain the ethical considerations associated with the development of artificial intelligence.

Sam Altman’s change of leadership; Lessons for Ethical Artificial Intelligence

In recent months, the artificial intelligence community witnessed a significant leadership change in the OpenAI company with the presence of Sam Altman, who for the first time unveiled the famous ChatGPT generative artificial intelligence and was welcomed by millions of people around the world in a short period of time.

The company’s CEO Sam Altman, known for his influential role in guiding its initiatives, has faced a period of controversy surrounding his firing and subsequent return. This incident highlighted the challenges related to ethical considerations in the development and management of artificial intelligence.

Addressing Ethical Concerns: Sam Altman’s leadership change prompted a reassessment of ethical considerations in AI development and organizational decision-making. The incident raised questions about transparency, accountability, and the need for strong ethical frameworks to guide the development and deployment of AI technologies.

Transparency and Accountability: Leadership Transition emphasized the importance of transparency in organizational decision-making, especially in areas where artificial intelligence technologies with far-reaching consequences are involved. This highlighted the need for leaders and organizations to be accountable for their actions and ensure that ethical guidelines are followed.

Community involvement: The controversy surrounding Sam Altman’s leadership change also highlighted the importance of broader community involvement in decisions related to AI development. The call for more inclusive decision-making processes gained momentum, reinforcing the idea that diverse perspectives are crucial in navigating the ethical challenges associated with AI technologies.

Potential negative consequences

Ethical concerns and bias

One of the main concerns of generative AI is its sensitivity to biases in the training data. If the data used to train these models reflects social biases, AI may inadvertently perpetuate and reinforce these biases in the content it produces. Addressing this issue is critical to prevent unwanted reinforcement and the spread of harmful stereotypes of AI systems.

Security threats and deep fakes

The ability of generative AI to produce highly realistic and persuasive content raises serious security concerns. Deepfakes, for example, are images or videos generated by artificial intelligence that manipulate content and superimpose it on real footage.

This technology can be misused for malicious purposes such as creating fake news, impersonating people, or spreading false information. As generative AI becomes more sophisticated, the challenge of distinguishing between genuine and fake content becomes increasingly difficult.

Invasion of privacy Advances in generative artificial intelligence also raise concerns about invasion of privacy. The ability to produce real images and videos from people who have never participated in the production of such content compromises personal privacy.

Protecting individuals from the unauthorized use of their likeness in AI-generated content will be a pressing issue in the coming years.

Unemployment and economic disorder

The automation capabilities of productive artificial intelligence may lead to labor displacement in certain industries. Jobs that involve routine and repetitive tasks, such as content creation, may be at risk. Balancing technological progress and social well-being is a challenge to ensure that AI complements human labor rather than replacing it.

Moral dilemmas

As AI systems become more adept at producing content that mimics human creativity, ethical questions also arise. For example, who owns the artistic or literary rights produced by artificial intelligence? This issue, determining the legal and ethical implications of creations produced by non-human entities, challenges our conventional understanding of authorship and intellectual property.

The most exciting possibilities

Increasing creativity and productivity

A revolution in healthcare

Customized user experiences

Human and artificial intelligence cooperation

Innovation in art and entertainment

Conclusion

The new name of Google Artificial Intelligence was announced. Google changed the name of its conversational robot, which was called Bard, to Gemini and released a program based on the Android operating system for it.

The new name of Google Artificial Intelligence was announced

Just as Microsoft changed the name of its Bing chatbot to Copilot to integrate its AI brand, Google has done the same with Bard and Duet artificial intelligence, and these services are now ” They are named “Gemini”.

According to Engjet, the news of this name change was leaked at the beginning of this month.

Google has also introduced a dedicated Android app for Gemina alongside the paid version of this chatbot which has more advanced features.

“Bard has been the best way for people to experience our most powerful models firsthand,” Google CEO Sundar Pichai wrote in a blog post. To reflect the advanced technology at its core, Bard is now simply called Gemini, available in 40 languages on the web, and a new app is coming to Android as well as Google’s app for iOS.

Those who download the Gemini Android app can actually replace Google Assistant as the default assistant on their device. So when you long-press the home button or say “Hey Google,” your phone or tablet can activate Gemini instead of Google Assistant. You can also make this change from the settings.

Read More: The world’s first dental robot started working

By doing this, a new conversation icon will be activated on your screen. Along with quick access to Gemina, the overlay offers text suggestions, such as the ability to create a caption for a photo you just took or request more information about an article on your screen.

You can also access common smart assistant features through the Geminai app, from making calls and setting alarms to controlling smart home devices.

Google has said that it will enable more assistant functions for Gemina in the future. This certainly makes it look like Google is abandoning its smart assistant in favor of Gemina.

Regarding the operating system of iPhone (iOS) phones, there will not be a separate program from Gemina at the moment. Instead, it can be accessed through the Google app by tapping the Gemini button.

Gemina is available for Android and iOS in English in the US starting today.

Next week, Google will begin making Gemina available in more languages, including Japanese and Korean, and as expected, Gemina will be available in more countries in the near future.

In addition, Google is opening up access to what is its largest and most powerful AI model, Ultra 1.0, through the Gemini Advanced program. The company claims that this model can have longer and deeper conversations with the ability to recall text from previous chats.

Google says Gemini Advanced is more capable of highly complex tasks such as coding, logical reasoning, following subtle instructions, and collaborating on creative projects.

The app is now available in English in 150 countries and regions, and to access it you need to sign up for the new Google One AI Premium plan, which costs $20 a month.

Of course, this subscription comes with access to the Gemini Advanced program, which includes access to everything from the Google One Premium Plan to 2TB of storage and a VPN.

Subscribers will also be able to use Gemina in apps like Gmail, Docs, Slides, and Sheets in the near future, which is an example of Duet’s AI replacement.

Notably, Google says it sought to reduce concerns such as bias and unsafe content when developing Gemini Advanced and other artificial intelligence products. The company says that before refining the model with reinforcement learning and fine-tuning, extensive trust and safety checks, including red-timing (meaning testing by ethical third-party hackers), have been conducted.

Ancient humans survived the last ice age just fine

iPhone 16 Pro Review

Why is the colon cancer increasing in people younger than 50?

Why is it still difficult to land on the moon?

Biography of Geoffrey Hinton; The godfather of artificial intelligence

The Strawberry Project; The OpenAI artificial intelligence model

Everything you need to know about the Windows Blue Screen of Death

Starlink; Everything you need to know about SpaceX Satellite Internet

iOS 18 review: A smart update even without Apple’s intelligence

There is more than one way for planets to be born

Popular

-

Technology1 year ago

Technology1 year agoWho has checked our Whatsapp profile viewed my Whatsapp August 2023

-

Technology1 year ago

Technology1 year agoSecond WhatsApp , how to install and download dual WhatsApp August 2023

-

Technology1 year ago

Technology1 year agoHow to use ChatGPT on Android and iOS

-

AI2 years ago

AI2 years agoUber replaces human drivers with robots

-

Technology1 year ago

Technology1 year agoThe best Android tablets 2023, buying guide

-

Technology1 year ago

Technology1 year agoThe best photography cameras 2023, buying guide and price

-

Humans2 years ago

Humans2 years agoCell Rover analyzes the inside of cells without destroying them

-

Technology1 year ago

Technology1 year agoHow to prevent automatic download of applications on Samsung phones