Technology

What is CPU; Everything you need to know about processors

Published

5 days agoon

What is CPU; Everything you need to know about processors

The central processing unit ( CPU ) is considered a vital element in any computer and manages all calculations and instructions that are transferred to other computer components and its peripheral equipment. Almost all electronic devices and gadgets you use; From desktops and laptops and phones to gaming consoles and smartwatches, everyone is equipped with a central processing unit; In fact, this unit is considered basic for computers, without which the system will not turn on, let alone be usable. The high speed of the central processing unit is a function of the input command, and the components of the computer only gain executive power if they are connected to this unit.

-

What is a processor?

-

Processor performance

-

Operating units of processors

-

Processor architecture

-

Set of instructions

-

RISC vs. CISC or ARM vs. x86

-

A brief history of processor architecture

-

ARM and X86-64 architecture differences

-

Processor performance indicators

-

Processor frequency

-

cache memory

-

Processing cores

-

Difference between single-core and multi-core processing

-

Processing threads

-

What is hypertrading or SMT?

-

CPU in gaming

-

What is a bottleneck?

-

Setting up a balanced system

Since the central processing units manage the data of all parts of the computer at the same time, it may work slowly as the volume of calculations and processes increases, or even fail or crash as the workload increases. Today, the most common central processing units on the market consist of semiconductor components on integrated circuits, which are sold in various types, and the leading manufacturers in this industry are AMD and Intel, who have been competing in this field since 50 years ago.

What is a processor?

To get to know the central processing unit (CPU), we first introduce a part of the computer called SoC very briefly. SoC, or system on a chip, is a part of a system that integrates all the components a computer needs for processing on a silicon chip. The SoC has various modules, of which the central processing unit (abbreviated as CPU) is the main component, and the GPU, memory, USB controller, power management circuits, and wireless radios (WiFi, 3G, 4G LTE, etc.) are miscellaneous components that may be necessary. not exist on the SoC. The central processing unit, which from now on and in this article will be called the processor for short, cannot process instructions independently of other chips; But building a complete computer is only possible with SoC.

The SoC is slightly larger than the CPU, yet offers much more functionality. In fact, despite the great emphasis placed on the technology and performance of the processor, this part of the computer is not a computer in itself, and it can finally be introduced as a very fast calculator that is part of the system on a chip or SoC; It retrieves data from memory and then performs some kind of arithmetic (addition, multiplication) or logical (and, or, not) operation on it.

Processor performance

-

Comparison of Intel and AMD CPUs; All technical specifications and features

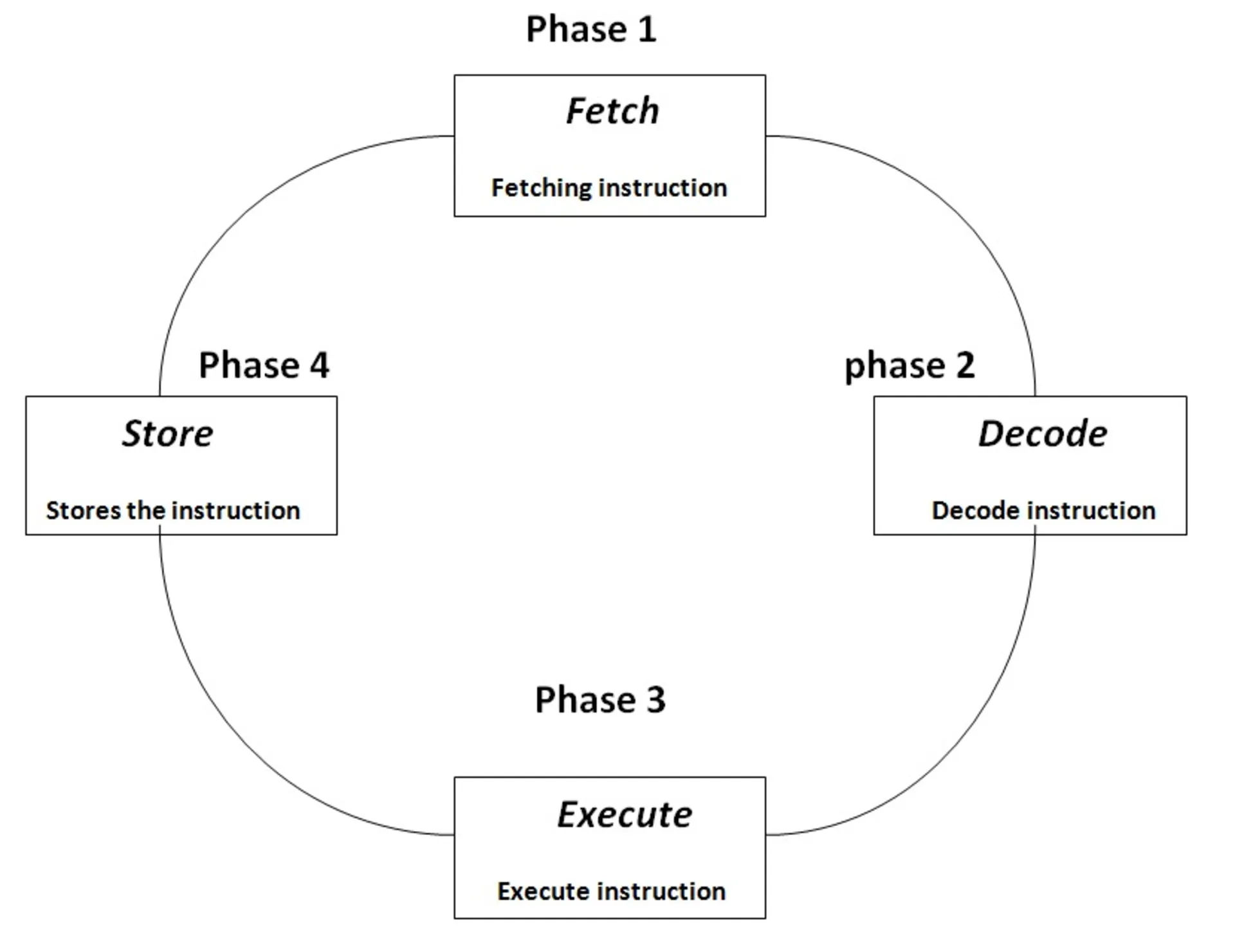

The process of processing instructions in the processor includes four main steps that are executed in order:

Calling or retrieving instructions from memory (Fetch): The processor first receives these instructions from memory in order to know how to manage the input and know the instructions related to it. This input may be one or infinitely many commands that must be addressed in separate locations. For this purpose, there is a unit called PC (abbreviation of Program Counter) or program counter, which maintains the order of sent commands; The processor is also constantly communicating with RAM in a cooperative interaction to find the address of the instruction (reading from memory).

Decoding or translation of instructions (Decode): Instructions are translated into a form that can be understood by the processor (machine language or binary). After receiving the commands, the processor needs to translate these codes into machine language (or binary) to understand them. Writing programs in binary language, from the very beginning, is a difficult task, and for this reason, codes are written in simpler programming languages, and then a unit called Assembler converts these commands into executable codes ready for processor processing.

Processing or execution of translated instructions (Execute): The most important step in the processor’s performance is the processing and execution of instructions. At this stage, the decoded and binary instructions are processed at a special address for execution with the help of the ALU unit (abbreviation of Arithmetic & Logic Unit) or calculation and logic unit.

Storage of execution results (Store): The results and output of instructions are stored in the peripheral memory of the processor with the help of the Register unit, so that they can be referred to in future instructions to increase speed (writing to memory).

The process described above is called a fetch-execute cycle, and it happens millions of times per second; Each time after the completion of these four main steps, it is the turn of the next command and all steps are executed again from the beginning until all the instructions are processed.

Operating units of processors

Each processor consists of three operational units that play a role in the process of processing instructions:

Arithmetic & Logic Unit (ALU): This is a complex digital circuit unit that performs arithmetic and comparison operations; In some processors, the ALU is divided into two sections, AU (for performing arithmetic operations) and LU (for performing logical operations).

Memory Control Unit (CU or Program Counter): This is a circuit unit that directs and manages operations within the processor and dictates how to respond to instructions to the calculation and logic unit and input and output devices. The operation of the control unit in each processor can be different depending on its design architecture.

Register unit (Register): The register unit is a unit in the processor that is responsible for temporarily storing processed data, instructions, addresses, sequence of bits, and output, and must have sufficient capacity to store these data. Processors with 64-bit architecture have registers with 64-bit capacity, and processors with 32-bit architecture have 32-bit registers.

Processor architecture

The relationship between the instructions and the processor hardware design forms the processor architecture; But what is 64 or 32-bit architecture? What are the differences between these two architectures? To answer this question, we must first familiarize ourselves with the set of instructions and how to perform their calculations:

Set of instructions

An instruction set is a set of operations that any processor can execute naturally. This operation consists of several thousands of simple and elementary instructions (such as addition, multiplication, transfer, etc.) whose execution is defined in advance for the processor, and if the operation is outside the scope of this set of instructions, the processor cannot execute it.

As mentioned, the processor is responsible for executing programs. These programs are a set of instructions written in a programming language that must be followed in a logical order and exactly step-by-step execution.

-

What is the difference between mobile, laptop, desktop, and server processors?

Since computers do not understand programming languages directly, these instructions must be translated into a machine language or binary form that is easier for computers to understand. The binary form consists of only two numbers zero and one and shows the two possible states of on (one) or off (zero) transistors for the passage of electricity.

In fact, each processor can be considered a set of electrical circuits that provide a set of instructions to the processor, and then the circuits related to that operation are activated by an electrical signal and the processor executes it.

Instructions consist of a certain number of bits. For example, in an 8-bit instruction; Its first 4 bits refer to the operation code and the next 4 bits refer to the data to be used. The length of an instruction set can vary from a few bits to several hundreds of bits and in some architectures it has different lengths.

In general, the set of instructions is divided into the following two main categories:

- Computer calculations with a reduced instruction set (Reduced instruction set computer): For a RISC-based processor (read risk), the set of defined operations is simple and basic. These types of calculations perform processes faster and more efficiently and are optimized to reduce execution time; RISC does not need to have complex circuits and its design cost is low. RISC-based processors complete each instruction in a single cycle and only operate on data stored in registers; So, they are simple instructions, they have a higher frequency, the information routing structure in them is more optimal, and they load and store operations on registers.

- Complex instruction set computer: CISC processors have an additional layer of microcode or microprogramming in which they convert complex instructions into simple instructions (such as addition or multiplication). Programmable instructions are stored in fast memory and can be updated. In this type of instruction set, a larger number of instructions can be included than in RICS, and their format can be of variable length. In fact, CISC is almost the opposite of RISC. CISC instructions can span multiple processor cycles, and data routing is not as efficient as RISC processors. In general, CISC-based processors can perform multiple operations during a single complex instruction, but they take multiple cycles along the way.

RISC vs. CISC or ARM vs. x86

RISC and CISC are the two starting and ending points of this spectrum in the instruction set category, and various other combinations are also visible. First, let’s state the basic differences between RISC and CISC:

|

RICS or Reduced Code of Practice |

CISC or Complex Instruction Set |

|---|---|

|

RISC instruction sets are simple; They perform only one operation and the processor can process them in one cycle. |

CISC instructions perform multiple operations, but the processor cannot process them in a single cycle. |

|

RISC-based processors have more optimized and simpler information routing; The design of these commands is so simple that they can be implemented in parts. |

CISC-based processors are complex in nature, and instructions are more difficult to execute. |

|

RISC-based processors require stored data to execute instructions. |

In CISC-based processors, it is possible to work with instructions directly through RAM, and there is no need to load operations separately. |

|

RISC does not require complex hardware and all operations are performed by software. |

CISC design hardware requirements are higher. CISC instructions are implemented using hardware, and software is often simpler than RISC. This is why programs based on the CISC design require less coding and the instructions themselves do a large part of the operation. |

As mentioned, in the design of today’s modern processors, a combination of these two sets (CISC or RISC) is used. For example, AMD’s x86 architecture originally uses the CISC instruction set, but is also equipped with microcode to simplify complex RISC-like instructions. Now that we have explained the differences between the two main categories of instruction sets, we will examine their application in processor architecture.

If you pay attention to the processor architecture when choosing a phone or tablet, you will notice that some models use Intel processors, while others are based on ARM architecture.

Suppose that different processors each have different instruction sets, in which case each must be compiled separately for each processor to run different programs. For example, for each processor from the AMD family, it was necessary to develop a separate Windows or thousands of versions of the Photoshop program were written for different processors. For this reason, standard architectures based on RISC or CISC categories or a combination of the two were designed and the specifications of these standards were made available to everyone. ARM, PowerPC, x86-64, and IA-64 are examples of these architecture standards, and below we introduce two of the most important ones and their differences:

A brief history of processor architecture

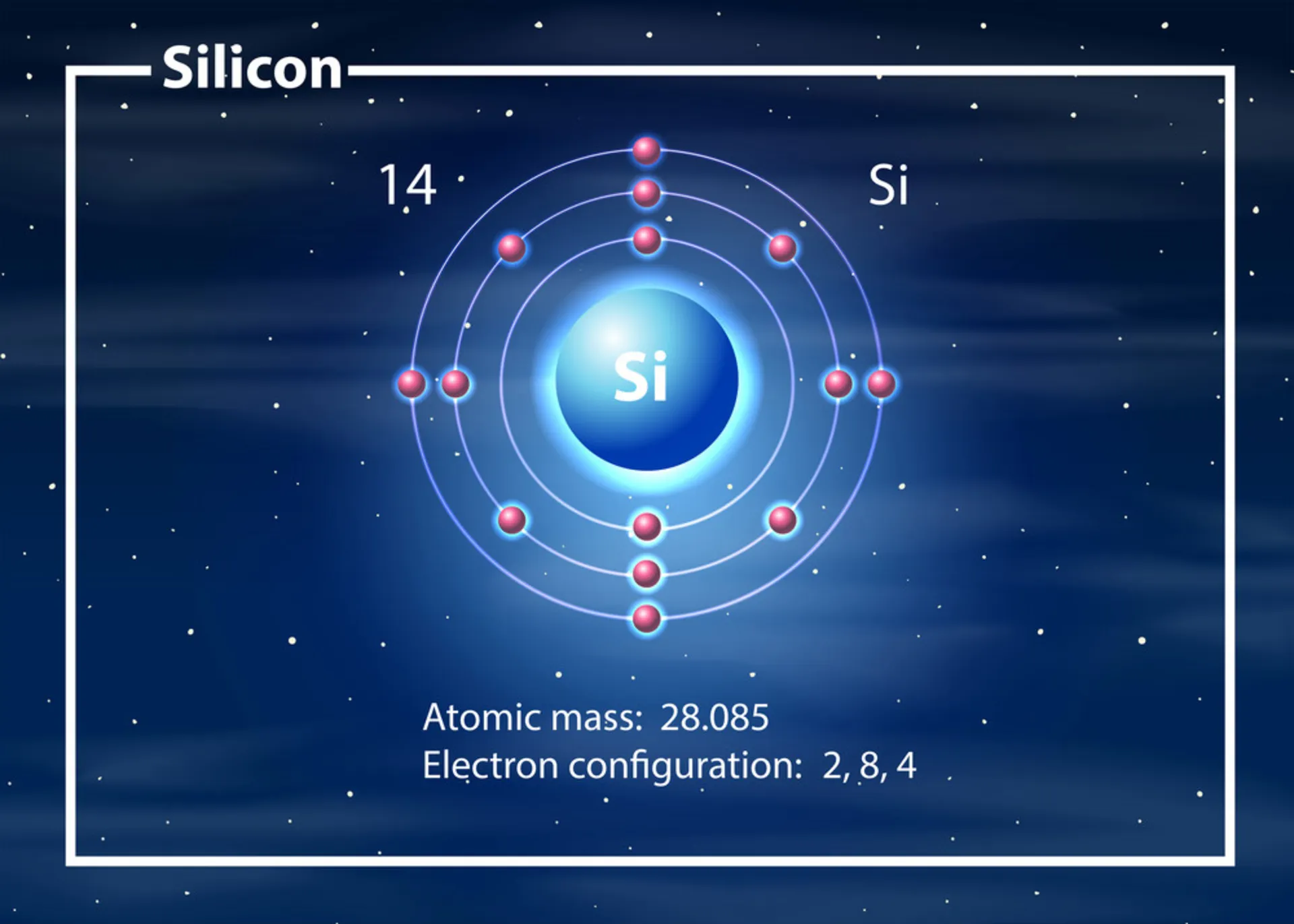

In 1823, a person named Baron Jones Jacob Berzelius discovered the chemical element silicon (symbol Si, atomic number 14) for the first time. Due to its abundance and strong semiconductor properties, this element is used as the main material in making processors and computer chips. Almost a century later, in 1947, John Bardeen , Walter Brattin and William Shockley invented the first transistor at Bell Labs and received the Nobel Prize.

The first efficient integrated circuit (IC) was unveiled in September 1958, and two years later IBM developed the first automated mass production facility for transistors in New York. Intel was founded in 1968 and AMD was founded a year later.

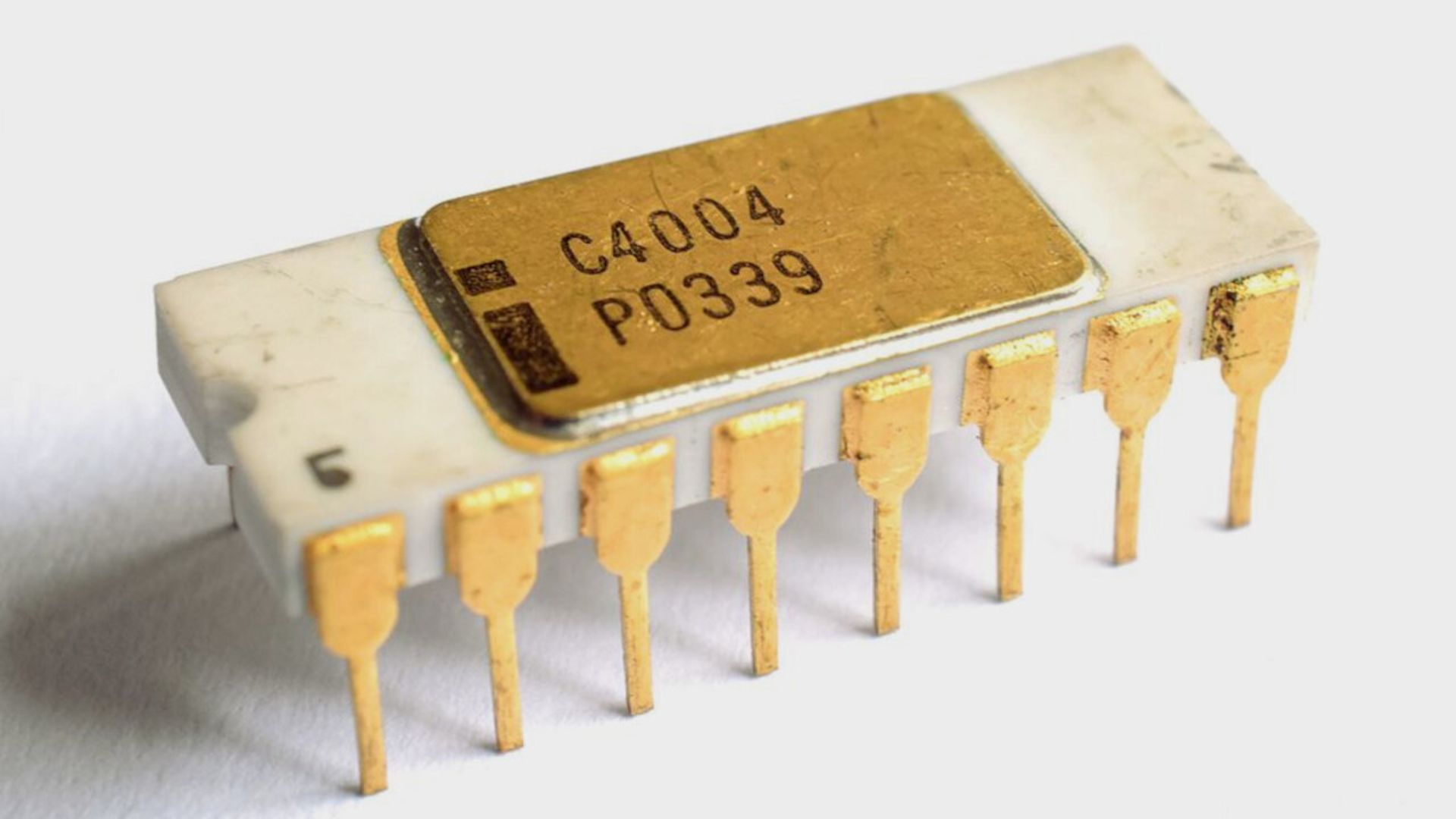

The first processor was invented by Intel in the early 1970s; This processor was called Intel 4004 and with the benefit of 2,300 transistors, it performed 60,000 operations per second. The Intel 4004 CPU was priced at 200 and had only 640 bytes of memory:

Intel CPU C4004 P0339

Intel CPU C4004 P0339

After Intel, Motorola introduced its first 8-bit processor (the MC6800) with a frequency of one to two MHz, and then MOS Technology introduced a faster and cheaper processor than the existing processors used in gaming consoles of the time, namely the Atari 2600 and Nintendo systems. Used like Apple II and Commodore 64. The first 32-bit processor was developed by Motorola in 1979, although this processor was only used in Apple’s Macintosh and Amiga computers. A little later, National Semiconductor released the first 32-bit processor for public use.

In 1993, PowerPC released its first processor based on a 32-bit instruction set; This processor was developed by the AIM consortium (consisting of three companies Apple, IBM, and Motorola) and Apple migrated from Intel to PowerPC at that time.

The difference between 32-bit and 64-bit processor (x86 vs. x64): Simply put, the x86 architecture refers to a family of instructions that was used in one of the most successful Intel processors, the 8086, and if a processor is compatible with the x86 architecture, that processor known as x86-64 or x86-32 for Windows 32 (and 16) versions bit is used; 64-bit processors are called x64 and 32-bit processors are called x86.

The biggest difference between 32-bit and 64-bit processors is their different access to RAM:

The maximum physical memory of x86 architecture or 32-bit processors is limited to 4 GB; While x64 architecture (or 64-bit processors) can access physical memory of 8, 16, and sometimes even up to 32 GB. A 64-bit computer can run both 32-bit and 64-bit programs; In contrast, a 32-bit computer can only run 32-bit programs.

In most cases, 64-bit processors are more efficient than 32-bit processors when processing large amounts of data. To find out which programs your operating system supports (32-bit or 64-bit), just follow one of the following two paths:

- Press the Win + X keys to bring up the context menu and then click System. -> In the window that opens, find the System type section in the Device specification section. You can see whether your Windows is 64-bit or 32-bit from this section.

- Type the term msinfo32 in the Windows search box and click on the displayed System Information. -> From the System Information section on the right, find the System type and see if your Windows operating system is based on x64 or X32.

The first route

The second path

ARM was a type of computer processor architecture that was introduced by Acorn in 1980; Before ARM, AMD, and Intel both used Intel’s X86 architecture, based on CISC computing, and IBM also used RISC computing in its workstations. In fact, Acorn was the first company to develop a home computer based on RISC computing, and its architecture was named after ARM itself: Acorn RISC Machine. The company did not manufacture processors and instead sold licenses to use the ARM architecture to other processor manufacturers. Acorn Holding changed the name Acorn to Advanced a few years later.

The ARM architecture processes 32-bit instructions, and the core of a processor based on this architecture requires at least 35,000 transistors. Processors designed based on Intel’s x86 architecture, which processes based on CISC calculations, require at least millions of transistors; In fact, the optimal energy consumption in ARM-based processors and their suitability for devices such as phones or tablets is related to the low number of transistors compared to Intel’s X86 architecture.

In 2011, ARM introduced the ARMv8 architecture with support for 64-bit instructions and a year after that, Microsoft also launched a Windows version compatible with the ARM architecture along with the Surface RT tablet.

ARM and X86-64 architecture differences

The ARM architecture is designed to be as simple as possible while keeping power dissipation to a minimum. On the other hand, Intel uses more complex settings with the X86 architecture, which is more suitable for more powerful desktop and laptop processors.

Computers moved to 64-bit architecture after Intel introduced the modern x86-64 architecture (also known as x64). 64-bit architecture is essential for optimal calculations and performs 3D rendering and encryption with greater accuracy and speed. Today, both architectures support 64-bit instructions, but this technology came earlier for mobile.

When ARM implemented 64-bit architecture in ARMv8, it took two approaches to this architecture: AArch32 and AArch64. The first one is used to run 32-bit codes and the other one is used to run 64-bit codes.

ARM architecture is designed in such a way that it can switch between two modes very quickly. This means that the 64-bit instruction decoder no longer needs to be compatible with 32-bit instructions and is designed to be backward compatible, although ARM has announced that processors based on the ARMv9 Cortex-A architecture will only be compatible with 64-bit instructions in 2023. and support for 32-bit applications and operating systems will end in next-generation processors.

The differences between ARM and Intel architecture largely reflect the achievements and challenges of these two companies. The approach of optimal energy consumption in the ARM architecture, while being suitable for power consumption under 5 watts in mobile phones, provides the possibility of improving the performance of processors based on this architecture to the level of Intel laptop processors. Compared to Intel’s 100-watt power consumption in Core i7 and Core i9 processors or even AMD processors, it is a great achievement in high-end desktops and servers, although historically it is not possible to lower this power below 5 watts.

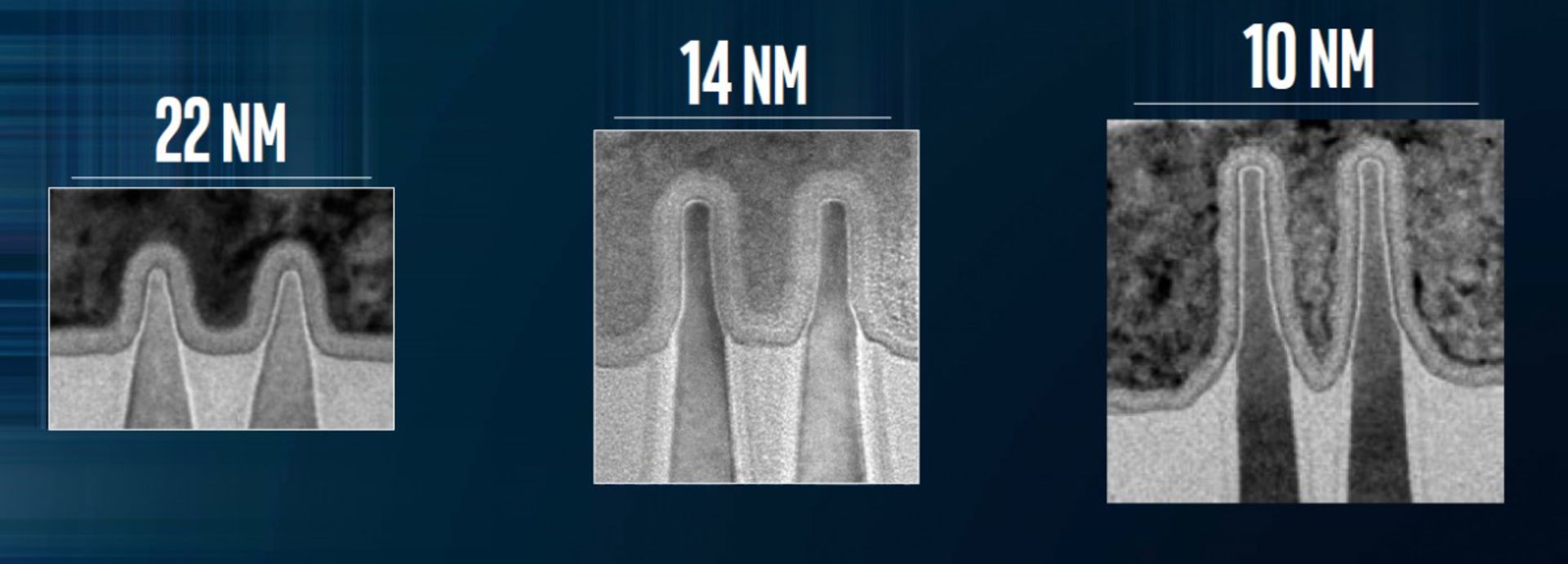

Processors that use more advanced transistors consume less power, and Intel has long been trying to upgrade its lithography from 14nm to more advanced lithography. The company recently succeeded in producing its processors with the 10nm manufacturing process, but in the meantime, mobile processors have also moved from 20nm to 14nm, 10nm, and 7nm designs, which is a result of competition from Samsung and TSMC. On the other hand, AMD unveiled 7nm processors in the Ryzen series and surpassed its x86-64 architecture competitors.

Nanometer: A meter divided by a thousand is equal to a millimeter, a millimeter divided by a thousand is equal to a micrometer, and a micrometer divided by a thousand is equal to a nanometer, in other words, a nanometer is a billion times smaller than a meter.

Lithography or manufacturing process: lithography is a Greek word that means lithography, which refers to the way components are placed in processors, or the process of producing and forming circuits; This process is carried out by specialized manufacturers in this field, such as TSMC. In lithography, since the production of the first processors until a few years ago, nanometers showed the distances of placing processor components together; For example, the 14nm lithography of the Skylake series processors in 2015 meant that the components of that processor were separated by 14nm. At that time, it was believed that the less lithography or processor manufacturing process, the more efficient energy consumption and better performance.

The distance between the placement of components in processors is not so relevant nowadays and the processes used to make these products are more contractual; Because it is no longer possible to reduce these distances beyond a certain limit without reducing productivity. In general, with the passage of time, the advancement of technology, the design of different transistors, and the increase in the number of these transistors in the processor, manufacturers have adopted various other solutions such as 3D stacking to place transistors on the processors.

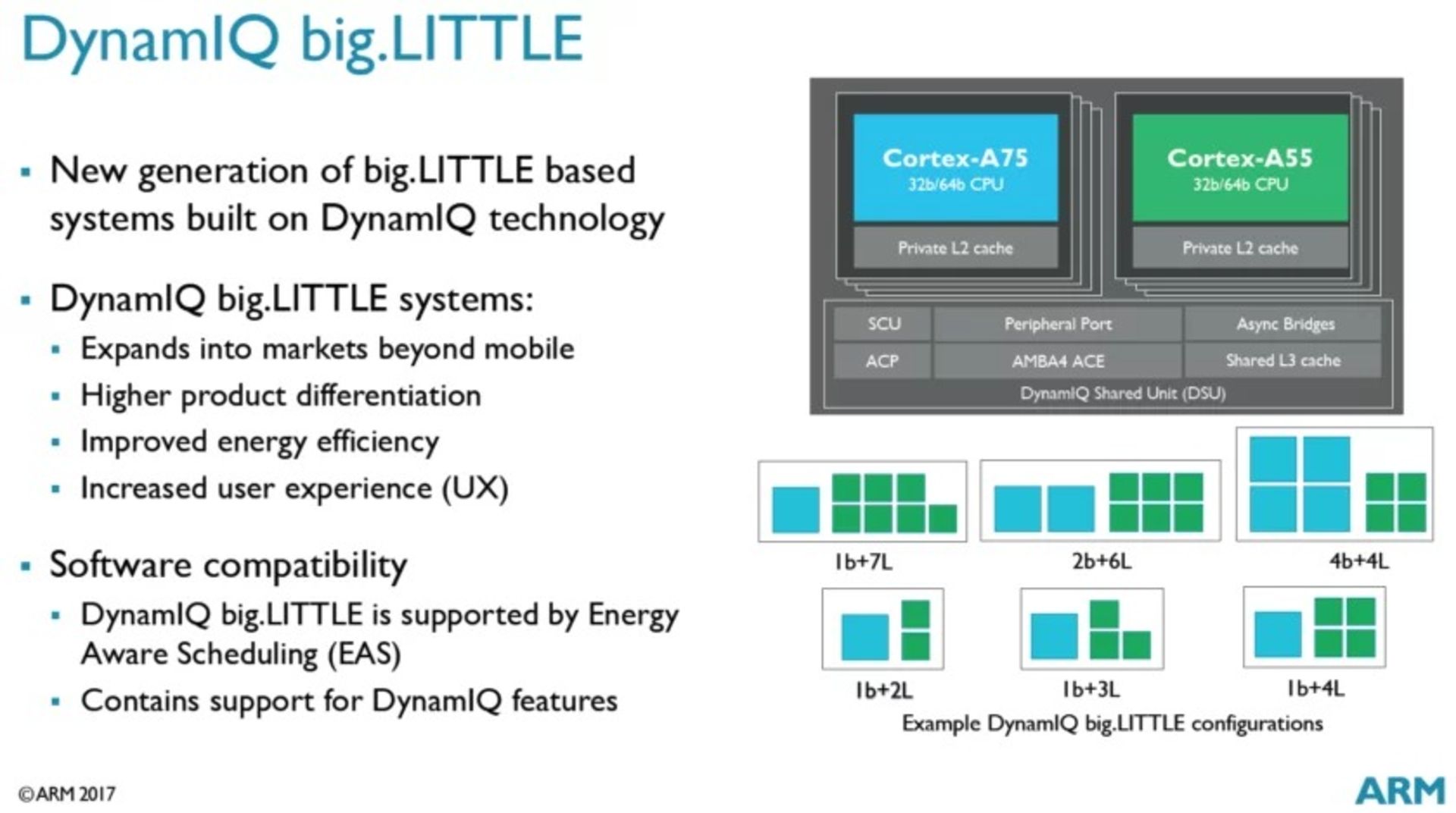

The most unique feature of ARM architecture can be considered as keeping the power consumption low in running mobile applications; This achievement comes from ARM’s heterogeneous processing capability; ARM architecture allows processing to be divided between powerful and low-power cores, and as a result, energy is used more efficiently.

ARM’s first attempt in this field dates back to the big.LITTLE architecture in 2011, when the large Cortex-A15 cores and the small Cortex-A7 cores arrived. The idea of using powerful cores for heavy applications and using low-power cores for light and background processing may not have been given as much attention as it should be, but ARM experienced many unsuccessful attempts and failures to achieve it; Today, ARM is the dominant architecture in the market: for example, iPads and iPhones use ARM architecture exclusively.

In the meantime, Intel’s Atom processors, which did not benefit from heterogeneous processing, could not compete with the performance and optimal consumption of processors based on ARM architecture, and this made Intel lag behind ARM.

Finally, in 2020, Intel was able to use a hybrid architecture for cores with a powerful core (Sunny Cove) and four low-consumption cores (Tremont) in the design of its 10 nm Lakefield processors, and in addition to this achievement, it also uses graphics and connectivity capabilities. , but this product was made for laptops with a power consumption of 7 watts, which is still considered high consumption for phones.

Another important distinction between Intel and ARM is in the way they use their design. Intel uses its developed architecture in the processors it manufactures and sells the architecture in its products, while ARM sells its design and architecture certification with customization capabilities to other companies, such as Apple, Samsung, and Qualcomm, and these companies They can make changes in the set of instructions of this architecture and design depending on their goals.

Manufacturing custom processors is expensive and complicated for companies that manufacture these products, but if done right, the end products can be very powerful. For example, Apple has repeatedly proven that customizing the ARM architecture can bring the company’s processors to par with x84-64 or beyond.

Apple eventually plans to remove all Intel-based processors from its Mac products and replace them with ARM-based silicon. The M1 chip is Apple’s first attempt in this direction, which was released along with MacBook Air, MacBook Pro and Mac Mini. After that, the M1 Max and M1 Ultra chips also showed that the ARM architecture combined with Apple’s improvements could challenge the x86-64 architecture.

As mentioned earlier, standard architectures based on RISC or CISC categories or a combination of the two were designed and the specifications of these standards were made available to everyone; Applications and software must be compiled for the processor architecture on which they run. This issue was not a big concern before due to the limitations of different platforms and architectures, but today the number of applications that need different compilations to run on different platforms has increased.

ARM-based Macs, Google’s Chrome OS, and Microsoft’s Windows are all examples in today’s world that require software to run on both ARM and x86-64 architectures. Native software compilation is the only solution that can be used in such a situation.

In fact, for these platforms, it is possible to simulate each other’s code, and the code compiled for one architecture can be executed on another architecture. It goes without saying that such an approach to the initial development of an application compatible with any platform is accompanied by a decrease in performance, but the very possibility of simulating the code can be very promising for now.

After years of development, currently, the Windows emulator for a platform based on ARM architecture provides acceptable performance for running most applications, Android applications also run more or less satisfactorily on Chromebooks based on Intel architecture, and Apple, which has a special code translation tool for has developed itself (Rosetta 2) supports older Mac applications that were developed for the Intel architecture.

However, as mentioned, all three perform weaker in the implementation of programs than if the program was written from scratch for each platform separately. In general, the architecture of ARM and Intel X86-64 can be compared as follows:

|

architecture |

ARM |

X86-64 |

|---|---|---|

|

CISC vs. RISC |

The ARM architecture is an architecture for processors and therefore does not have a single manufacturer. This technology is used in the processors of Android phones and iPhones. |

The X86 architecture is produced by Intel and is exclusively used in desktop and laptop processors of this company. |

|

Complexity of instructions |

The ARM architecture uses only one cycle to execute an instruction, and this feature makes processors based on this architecture more suitable for devices that require simpler processing. |

The Intel architecture (or the X86 architecture associated with 32-bit Windows applications) often uses CISC computing and therefore has a slightly more complex instruction set and requires several cycles to execute. |

|

Mobile CPUs vs. Desktop CPUs |

The dependence of the ARM architecture on the software makes this architecture be used more in the design of phone processors; ARM (in general) works better on smaller technologies that don’t have constant access to the power supply. |

Because Intel’s X86 architecture relies more on hardware, this architecture is typically used to design processors for larger devices such as desktops; Intel focuses more on performance and is considered a better architecture for a wider range of technologies. |

|

Energy consumption |

The ARM architecture not only consumes less energy thanks to its single-cycle computing set but also has a lower operating temperature than Intel’s X86 architecture; ARM architectures are great for designing phone processors because they reduce the amount of energy required to keep the system running and execute the user’s requested commands. |

Intel’s architecture is focused on performance, so it won’t be a problem for desktop or laptop users who have access to an unlimited power source. |

|

Processor speed |

CPUs based on ARM architecture are usually slower than their Intel counterparts because they perform calculations with lower power for optimal consumption. |

Processors based on Intel’s X86 architecture are used for faster computing. |

|

operating system |

ARM architecture is more efficient in the design of Android phone processors and is considered the dominant architecture in this market; Although devices based on the X86 architecture can also run a full range of Android applications, these applications must be translated before running. This scenario requires time and energy, so battery life and overall processor performance may suffer. |

Intel architecture reigns as the dominant architecture in tablets and Windows operating systems. Of course, in 2019, Microsoft released the Surface Pro X with a processor that uses ARM architecture and could run the full version of Windows. If you are a gamer or if you have expectations from your tablet beyond running the full version of Windows, it is better to still use the Intel architecture. |

During the competition between Arm and x86 over the past ten years, ARM can be considered the winning architecture for low-power devices such as phones. This architecture has also made great strides in laptops and other devices that require optimal energy consumption. On the other hand, although Intel has lost the phone market, the efforts of this manufacturer to optimize energy consumption have been accompanied by significant improvements over the years, and with the development of hybrid architecture, such as the combination of Lakefield and Alder Lake, now more than ever, there are many commonalities with processors. It is based on Arm architecture. Arm and x86 are distinctly different from an engineering point of view, and each has its own individual strengths and weaknesses, however, today it is no longer easy to distinguish between the use cases of the two, as both architectures are increasingly supported. It is increasing in ecosystems.

Processor performance indicators

Processor performance has a great impact on the speed of loading programs and their smooth execution, and there are various measures to measure the performance of each processor, of which frequency (clock speed) is one of the most important. So be careful, the frequency of each core can be considered as a criterion for measuring its processing power, but this criterion does not necessarily represent the overall performance of the processor and many things such as the number of cores and threads, internal architecture (synergy between cores), cache memory capacity, Overclocking capability, thermal power, power consumption, IPC, etc. were also considered to judge the overall performance of the processor.

Synergy is an effect that results from the flow or interaction of two or more elements. If this effect is greater than the sum of the effects that each of those individual elements could create, then synergy has occurred.

In the following, we will explain more about the factors influencing the performance of the processor:

Processor frequency

One of the most important factors in choosing and buying a processor is its frequency (Clock Speed), which is usually a fixed number for all its cores. The number of operations that the processor performs per second is known as its speed and is expressed in Hertz, MHz (MHz for older processors), or GHz.

At the same frequency, a processor with a higher IPC can do more processing and is more powerful

More precisely, frequency refers to the number of computing cycles that processor cores perform per second and is measured in GHz (GHz-billion cycles per second).

For example, a 3.2 GHz processor performs 3.2 billion operations per second. In the early 1970s, processors passed the frequency of one megahertz (MHz) or running one million cycles per second, and around 2000 the gigahertz (GHz) unit of measurement equal to one billion hertz was chosen to measure their frequency.

Sometimes, multiple instructions are completed in one cycle, and in some cases, an instruction may be processed in multiple cycles. Since different architectures and designs of each processor perform instructions in a different way, the processing power of their cores can be different depending on the architecture. In fact, without knowing the number of instructions processed per cycle (IPC) comparing the frequency of two processors is completely meaningless.

Suppose we have two processors; One is produced by Company A and the other by Company B, and the frequency of both of them is the same and equal to one GHz. If we have no other information, we may consider these two processors to be the same in terms of performance; But if company A’s processor completes one instruction per cycle and company B’s processor can complete two instructions per cycle. Obviously, the second processor will perform faster than the A processor.

In simpler words, at the same frequency, a processor with a higher IPC can do more processing and is more powerful. So, to properly evaluate the performance of each processor, in addition to the frequency, you will also need the number of instructions it performs in each cycle.

Therefore, it is better to compare the frequency of each processor with the frequency of processors of the same series and generations with the same processor. It’s possible that a processor from five years ago with a high frequency will outperform a newer processor with a lower frequency because newer architectures handle instructions more efficiently.

Intel’s X-series processors may outperform higher-frequency K-series processors because they split tasks between more cores and have larger caches; On the other hand, in the same generation of processors, a processor with a higher frequency usually performs better than a processor with a lower frequency in many applications. This is why the manufacturer company and processor generation are very important when comparing processors.

Base frequency and boost frequency: The base frequency of any processor is the minimum frequency that the processor works with when idle or when performing light processing; on the other hand, the boost frequency is a measure that shows how much the processor performs when performing heavier calculations or more demanding processes. can increase. Boost frequencies are automatically applied and limited by heat from heavy processing before the processor reaches unsafe levels of computing.

In fact, it is not possible to increase the frequency of a processor without physical limitations (mainly electricity and heat), and when the frequency reaches about 3 GHz, the power consumption increases disproportionately.

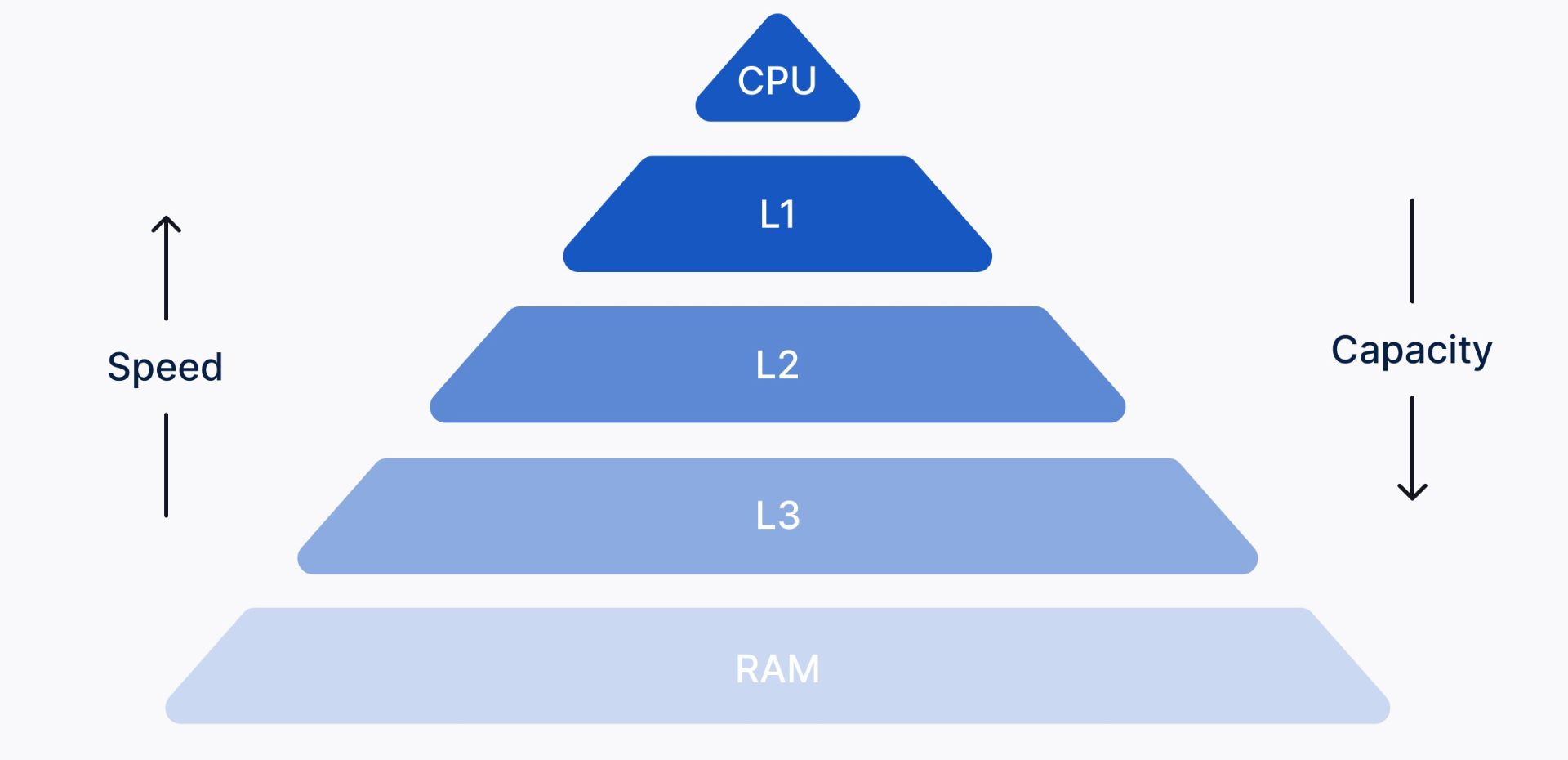

Cache memory

Another factor that affects the performance of the processor is the capacity of the processor’s cache memory or RAM; This type of RAM works much faster than the main RAM of the system due to being located near the processor and the processor uses it to temporarily store data and reduce the time of transferring data to/from the system memory.

-

What is L2, L1, and L3 cache memory and what effect does it have on processor performance?

Therefore, cache can also have a large impact on processor performance; The more RAM the processor has, the better its performance will be. Fortunately, nowadays all users can access benchmark tools and evaluate the performance of processors themselves, regardless of manufacturers’ claims.

Cache memory can be multi-layered and is indicated by the letter L. Usually, processors have up to three or four layers of cache memory, the first layer (L1) is faster than the second layer (L2), the second layer is faster than the third layer (L3), and the third layer is faster than the fourth layer (L4). . The cache memory usually offers up to several tens of megabytes of space to store, and the more space there is, the higher the price of the processor will be.

The cache memory is responsible for maintaining data; This memory has a higher speed than the RAM of the computer and therefore reduces the delay in the execution of commands; In fact, the processor first checks the cache memory to access desired data, and if the desired data is not present in that memory, it goes to the RAM.

- Level one cache memory (L1), which is called the first cache memory or internal cache; is the closest memory to the processor and has high speed and smaller volume than other levels of cache memory, this memory stores the most important data needed for processing; Because the processor, when processing an instruction, first of all goes to the level one cache memory.

- Level two (L2) cache memory, which is called external cache memory, has a lower speed and a larger volume than L1, and depending on the processor structure, it may be used jointly or separately. Unlike L1, L2 was placed on the motherboard in old computers, but today, in new processors, this memory is placed on the processor itself and has less delay than the next layer of cache, namely L3.

- The L3 cache memory is the memory that is shared by all the cores in the processor and has a larger capacity than the L1 or L2 cache memory, but it is slower in terms of speed.

- Like L3, L4 cache has a larger volume and lower speed than L1 or L2; L3 or L4 are usually shared.

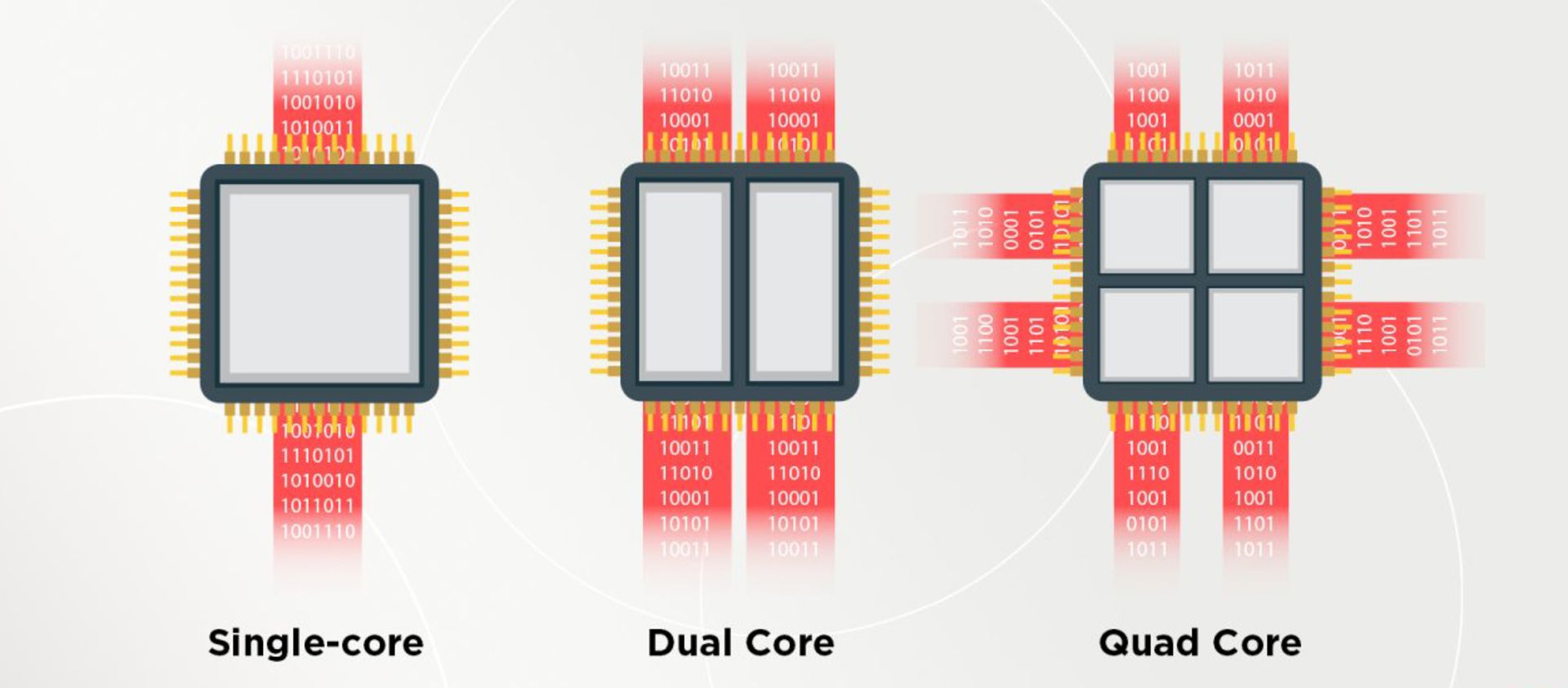

Processing cores

The core is the processing unit of the processor that can independently perform or process all computing tasks. From this point of view, the core can be considered as a small processor in the whole central processing unit. This part of the processor consists of the same operational units of calculation and logical operations (ALU), memory control (CU), and registers (Register) that perform the process of processing instructions with a fetch-execution cycle.

In the beginning, processors worked with only one core, but today processors are mostly multi-core, with at least two or more cores on an integrated circuit, processing two or more processes simultaneously. Note that each core can only execute one instruction at a time. Processors equipped with multiple cores execute sets of instructions or programs using parallel processing (Parallel Computing) faster than before. Of course, having more cores does not mean increasing the overall performance of the processor. Because many programs do not yet use parallel processing.

- Single-core processors: The oldest type of processor is a single-core processor that can execute only one command at a time and is not efficient for multitasking. In this processor, the start of a process requires the end of the previous operation, and if more than one program is executed, the performance of the processor will decrease significantly. The performance of a single-core processor is calculated by measuring its power and based on frequency.

- Dual-core processors: A dual-core processor consists of two strong cores and has the same performance as two single-core processors. The difference between this processor and a single-core processor is that it switches back and forth between a variable array of data streams, and if more threads or threads are running, a dual-core processor can handle multiple processing tasks more efficiently.

- Quad-core processors: A quad-core processor is an optimized model of a multi-core processor that divides the workload between cores and provides more effective multitasking capabilities by benefiting from four cores; Hence, it is more suitable for gamers and professional users.

- Six-core processors (Hexa-Core): Another type of multi-core processor is a six-core processor that performs processes at a higher speed than four-core and two-core types. For example, Intel’s Core i7 processors have six cores and are suitable for everyday use.

- Octa-Core processors: Octa-core processors are developed with eight independent cores and offer better performance than previous types; These processors include a dual set of quad-core processors that divide different activities between different types. This means that in many cases, the minimum required cores are used for processing, and if there is an emergency or need, the other four cores are also used in performing calculations.

- Ten-core processors (Deca-Core): Ten-core processors consist of ten independent systems that are more powerful than other processors in executing and managing processes. These processors are faster than other types and perform multitasking in the best possible way, and more and more of them are released to the market day by day.

Difference between single-core and multi-core processing

In general, it can be said that the choice between a powerful single-core processor and a multi-core processor with normal power depends only on the way of use, and there is no pre-written version for everyone. The powerful performance of single-core processors is important for use in software applications that do not need or cannot use multiple cores. Having more cores doesn’t necessarily mean faster, but if a program is optimized to use multiple cores, it will run faster with more cores. In general, if you mostly use applications that are optimized for single-core processing, you probably won’t benefit from a processor with a large number of cores.

Let’s say you want to take 2 people from point A to B, of course a Lamborghini will do just fine, but if you want to transport 50 people, a bus can be a faster solution than multiple Lamborghini commutes. The same goes for single-core versus multi-core processing.

In recent years and with the advancement of technology, processor cores have become increasingly smaller, and as a result, more cores can be placed on a processor chip, and the operating system and software must also be optimized to use more cores to divide instructions and execute them simultaneously. allocate different If this is done correctly, we will see an impressive performance.

-

How do processors use multiple cores?

-

How do Windows and other operating systems use multiple cores in a processor?

In traditional multi-core processors, all cores were implemented the same and had the same performance and power rating. The problem with these processors was that when the processor is idle or doing light processing, it is not possible to lower the energy consumption beyond a certain limit. This issue is not a concern in conditions of unlimited access to power sources but can be problematic in conditions where the system relies on batteries or a limited power source for processing.

This is where the concept of asymmetric processor design was born. For smartphones, Intel quickly adopted a solution that some cores are more powerful and provide better performance, and some cores are implemented in a low-consumption way; These cores are only good for running background tasks or running basic applications such as reading and writing email or browsing the web.

High-powered cores automatically kick in when you launch a video game or when a heavy program needs more performance to do a specific task.

Although the combination of high-power and low-consumption cores in processors is not a new idea, using this combination in computers was not so common, at least until the release of the 12th generation Alder Lake processors by Intel.

In each model of Intel’s 12th generation processors, there are E cores (low consumption) and P cores (powerful); The ratio between these two types of cores can be different, but for example, in Alder Lake Core i9 series processors, eight cores are intended for heavy processing and eight cores for light processing. The i7 and i5 series have 8.4 and 6.4 designs for P and E cores, respectively.

There are many advantages to having a hybrid architecture approach in processor cores, and laptop users will benefit the most, because most daily tasks such as web browsing, etc., do not require intensive performance. If only low-power cores are involved, the computer or laptop will not heat up and the battery will last longer.

Low-power cores are simple and inexpensive to produce, so using them to boost and free up powerful, advanced cores seems like a smart idea.

Even if you have your system connected to a power source, the presence of low-power cores will be efficient. For example, if you are engaged in gaming and this process requires all the power of the processor, powerful cores can meet this need, and low-power cores are also responsible for running background processes or programs such as Skype, etc.

At least in the case of Intel’s Alder Lake processors, the P and E cores are designed to not interfere with each other so that each can perform tasks independently. Unfortunately, since combining different processors is a relatively new concept for x86 processors, this fundamental change in the x86 architecture is fraught with problems.

Before the idea of hybrid cores (or the combination of powerful cores or P and low consumption or E) was proposed, software developers had a reason to develop their products. They did not see a form compatible with this architecture, so their software was not aware of the difference between low-consumption and high-consumption cores, and this caused in some cases Reports of crashes or strange behavior of some software (such as Denuvo).

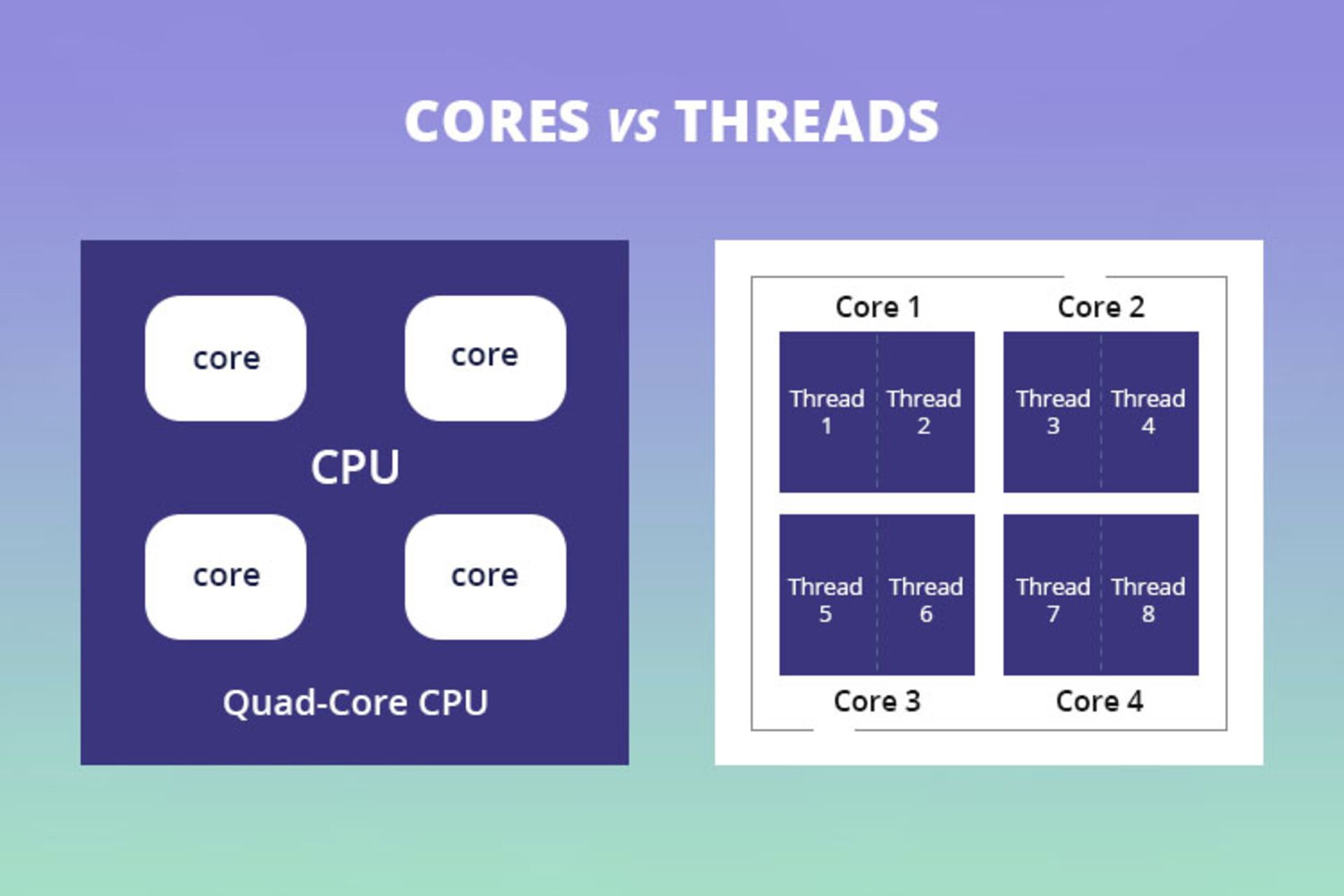

Processing threads

Processing threads are threads of instructions that are sent to the processor for processing; Each processor is normally capable of processing one instruction, which is called the main instruction, and if two instructions are sent to the processor, the second instruction is executed after the first instruction is executed. This process can slow down the speed and performance of the processor. In this regard, processor manufacturers divide each physical core into two virtual cores (Thread), each of which can execute a separate processing thread, and each core, having two threads, can execute two processing threads at the same time.

Active processing versus passive processing

Active processing refers to the process that requires the user to manually set data to complete an instruction; Common examples of active processing include motion design, 3D modeling, video editing, or gaming. In this type of processing, single-core performance and high-core speed are very important, so in the implementation of such processing, we need fewer, but more powerful, cores to benefit from smooth performance.

Passive processing, on the other hand, is instructions that can usually be easily executed in parallel and left alone, such as 3D rendering and video; Such processing requires processors with a large number of cores and a higher base frequency, such as AMD’s Threadripper series processors.

One of the influential factors in performing passive processing is the high number of threads and their ability to be used. In simple words, a thread is a set of data that is sent to the processor for processing from an application and allows the processor to perform several tasks at the same time in an efficient and fast way; In fact, it is because of the threads in the system that you can listen to music while surfing the web.

Threads are not physical components of the processor but represent the amount of processing that the processor cores can do, and to execute several very intensive instructions simultaneously, you will need a processor with a large number of threads.

The number of threads in each processor is directly related to the number of cores; In fact, each core can usually have two threads and all processors have active threads that allocate at least one thread to perform each process.

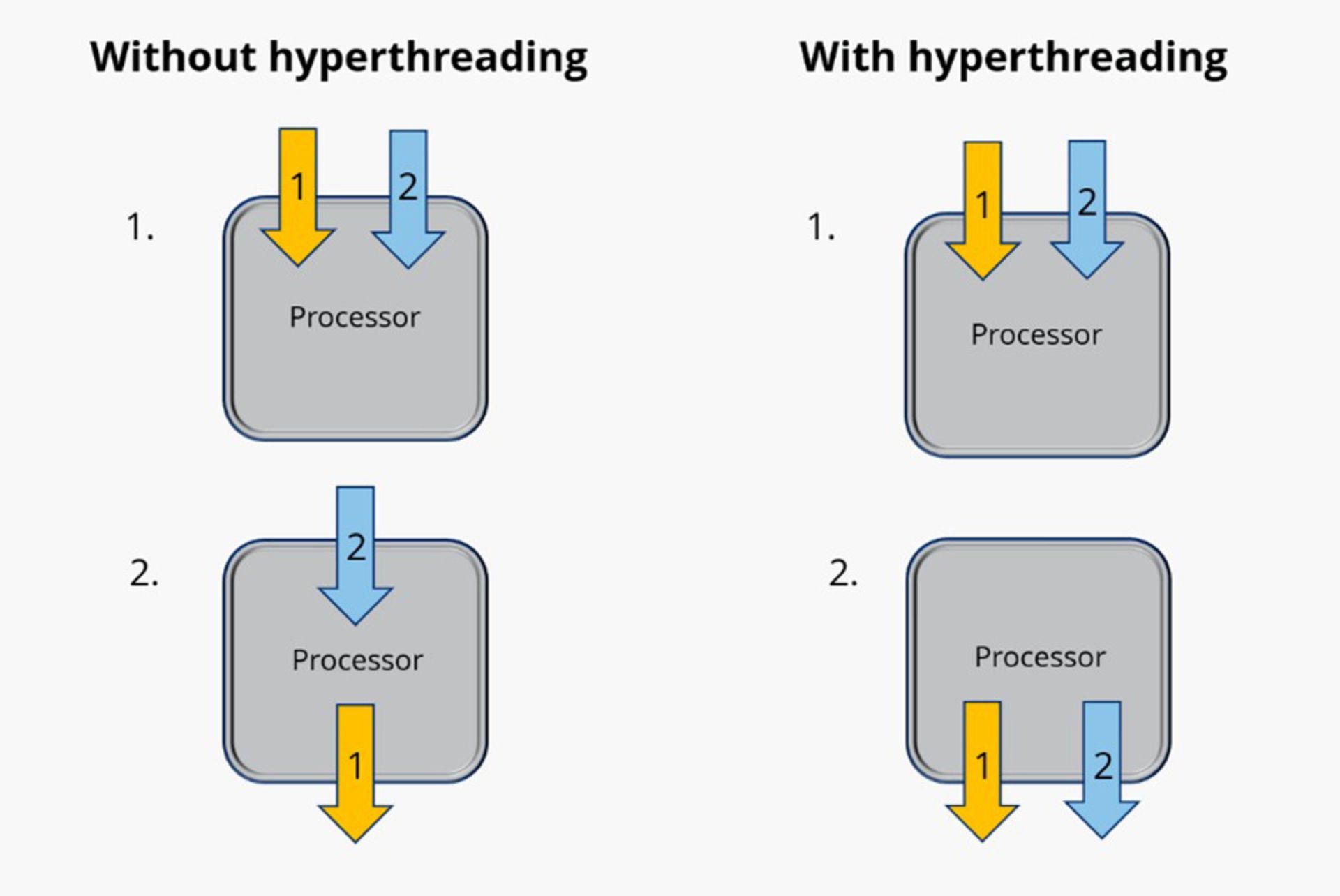

What is hypertrading or SMT?

Hyperthreading in Intel processors and simultaneous multithreading (SMT) in AMD processors are concepts to show the process of dividing physical cores into virtual cores; In fact, these two features are a solution for scheduling and executing instructions that are sent to the processor without interruption.

Today, most processors are equipped with hyperthreading or SMT capability and run two threads per core. However, some low-end processors, such as Intel’s Celeron series or AMD’s Ryzen 3 series, do not support this feature and only have one thread per core. Even some high-end Intel processors come with disabled hyperthreading for various reasons such as market segmentation, so it is generally better to read the Cores & Threads description section before buying any processor. Check it out.

Hyperthreading or simultaneous multithreading helps to schedule instructions more efficiently and use parts of the core that are currently inactive. At best, threads provide about 50% more performance compared to physical cores.

In general, if you’re only doing active processing like 3D modeling during the day, you probably won’t be using all of your CPU’s cores; Because this type of processing usually only runs on one or two cores, but for processing such as rendering that requires all the power of the processor cores and available threads, using hyperthreading or SMT can make a significant difference in performance.

CPU in gaming

Before the release of multi-core processors, computer games were developed for single-core systems, but after the introduction of the first dual-core processor in 2005 by AMD and the release of four, six and eight-core processors after that, there is no longer a limit to the help of more cores. did not have Because the ability to execute several different operations at the same time was provided for the processors.

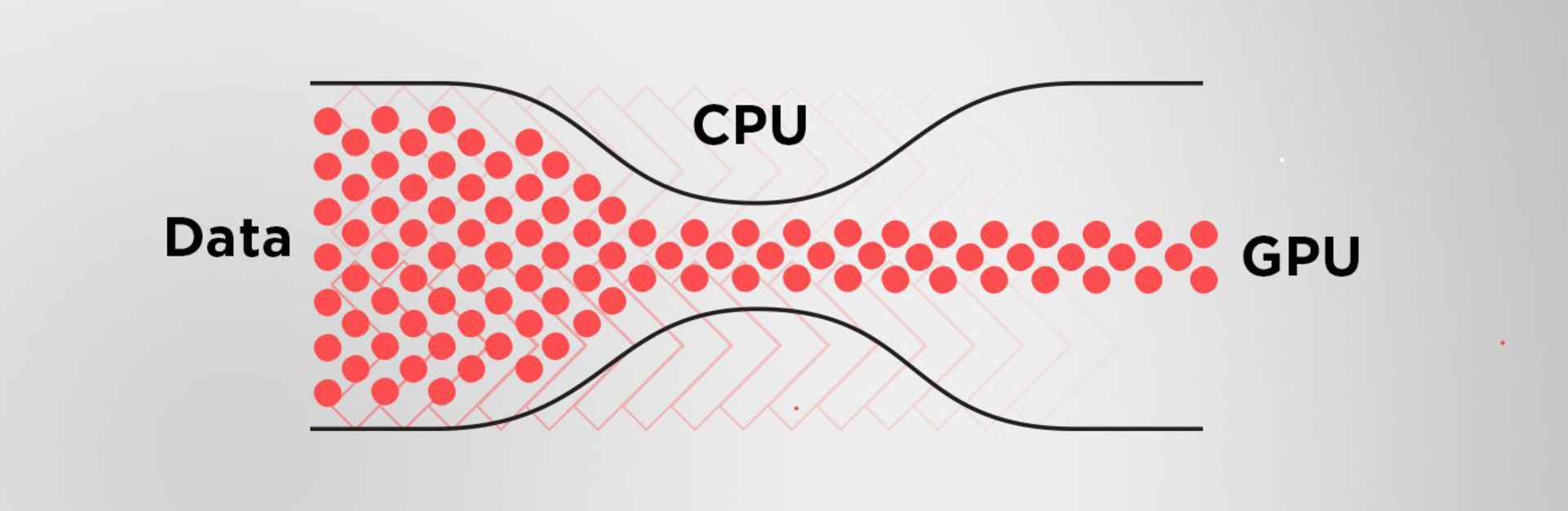

In order to have a satisfactory gaming experience, every gamer must choose a balanced processor and graphics processor (we will examine the graphics processor and its function in a separate article) in a balanced way. If the processor has a weak or slow performance and cannot execute commands fast enough, the system graphics cannot use its maximum power; Of course, the opposite is also true. In such a situation, we say that the graphics has become a bottleneck.

What is a bottleneck?

In the field of computers, botlink (or bottleneck) is said to limit the performance of a component as a result of the difference in the maximum capabilities of two hardware components. Simply put, if the graphics unit receives instructions faster than the processor can send them, the unit will sit idle until the next set of instructions is ready, rendering fewer frames per second; In this situation, the level of graphics performance is limited due to processor limitations.

The same may happen in the opposite direction. If a powerful processor sends commands to it faster than the graphics unit can receive, the processor’s capabilities are limited by the poor performance of the graphics.

In fact, a system that consists of a suitable processor and graphics, provides a better and smoother performance to the user. Such a system is called a balanced system. In general, a balanced system is a system in which the hardware does not create bottlenecks (or bottlenecks) for the user’s desired processes and provides a better user experience without disproportionate use (too much or too little) of system components.

It is better to pay attention to a few points to set up a balanced system:

- You can’t set up a balanced system for an ideal gaming experience by just buying the most expensive processor and graphics available in the market.

- Butlink is not necessarily caused by the quality or oldness of the components and is directly related to the performance of the system hardware.

- Graphics botlinking is not specific to advanced systems, and balance is also very important in systems with low-end hardware.

- The creation of botlinks is not exclusive to the processor and graphics, but the interaction between these two components prevents this problem to a large extent.

Setting up a balanced system

In the case of gaming or graphics processing, when the graphics do not use their maximum power, the effect of processor power on improving the quality of the user’s gaming experience will be noticeable if there is high coordination between the graphics unit and the processor; In addition, the type and model of the game are also two important factors in choosing hardware. Currently, quad-core processors can still be used to run various games, but Hexa-core processors or more will definitely give you smoother performance. Today, multi-core processors for games such as first-person shooters (FPS) or online multiplayer games are considered essential for any gaming system.

You may like

Technology

7 Windows problems that may lead users to MacOS or Linux

Published

2 days agoon

11/11/2024

7 Windows problems that may lead users to MacOS or Linux

There are many users who have used different versions of Windows for many years and have been satisfied with most of the features of these versions; But according to some of them, there are annoying features in Windows 11 that, if not fixed, may discourage some of them from Windows and lead them to other operating systems such as Linux or MacOS. For example, it took several years for the Control Panel to finally be moved to the System Settings section, but this is not as annoying as other Windows problems that we will mention below.

Display annoying ads

We can almost say that Microsoft has a monopoly on the desktop operating system market. According to Statista , about 72% of personal computers will be using one of Microsoft’s Windows versions by February 2024; It’s just after a decade of decline in the number of Windows users due to the increasing popularity of macOS, so that in 2013 Windows had nearly 91% of the market share.

Microsoft uses the interest of Windows users to display ads for its other products

Unfortunately, Microsoft uses its dominant position in the operating system market to display annoying ads; Such as advertisements that are displayed in the start menu, lock screen and even when upgrading the operating system for users. Some users believe that Microsoft’s ads in Windows are not only annoying in terms of user experience, but can even be considered a form of exclusive behavior.

Microsoft’s exclusive behavior for advertising its other products in Windows is similar to what the company did in 1998 and was questioned by the US Department of Justice. At that time, Microsoft made Internet Explorer the default browser for Windows; While several other companies were competing with each other in the browser market.

Of course, Apple, like Microsoft, places its product ads in Safari; But the low market share of this browser has caused Apple’s exclusive behavior to have little effect on the operating system market. On the other hand, Google Chrome still maintains its popularity among users due to its features and high quality and remains the winner of the browser competition.

To summarize; Microsoft can also advertise its products like other companies; But due to its exclusive position, it places these ads directly in the Windows operating system, and according to users, this is unfair and illegal.

Forced to search in the cloud

Many Windows users prefer to use the Start menu search tool to find their programs, files or games instead of pinning; sometimes when searching for a program or file, ads and purchase offers and links to previous Bing searches are also displayed for users; Some have even stated that these ads caused them to be unable to find the program they had previously installed in some cases. Even Windows ME with all its instability and bugs was better than Windows 11 at least in terms of internal search!

Fortunately, there is a way to disable ads and suggested links in Windows 11 Search; But for this you need to use the registry editor tool and many people can’t disable it without using the guide.

Add new buttons to taskbar

Windows has a habit of placing application icons in the taskbar when it introduces a new feature; Like the new icon of widgets and copilot. The only problem some users have with this theme is that the new icons are displayed by default and they have to go through an extra step to remove them.

The mandatory presence of new application icons in the Taskbar is not pleasant for some users

Forcing new features on users is a common theme in the tech world. These conditions make some users feel that even if they pay for the license, they do not own their Windows, and Microsoft still owns the operating system.

Transferring supporting documents to the web

Microsoft seems to have forgotten that some users may not always have full access to the Internet. This topic does not attract the attention of users anywhere except Windows support documents; Because the documents are accessible online and on the Microsoft website instead of always being available.

Online access to support documentation becomes even more annoying when even the resource link doesn’t connect users directly to the desired page. These links are first opened in the Edge browser (even if the user’s default browser is something else) and they look for the related page with the term site:microsoft.com in the Bing search engine.

Providing users with online access to support documentation is actually another way to direct users to other Microsoft services that almost no one uses. Maybe Microsoft’s goal is to reduce the size of Windows for installation by removing support documents; But even this argument is accepted when the supporting documents are not textual; Because text documents occupy very little space.

Insist on using Microsoft services

Users who have used Windows for years and have used other Windows services such as OneDrive, Microsoft 365 and Edge browser. Some users have not had a satisfactory experience with these services and have decided to abandon them; But again, Microsoft is trying to impose the use of the mentioned services on its users in different ways; Just like the supporting documents we mentioned in the previous section. A number of Windows users believe that Microsoft should respect the choice of users and stop insisting.

Backing up the desktop in OneDrive

Microsoft has tried to make users’ work easier by adding the ability to back up specific desktop folders to OneDrive; But the said feature has become troublesome for people who use Windows on several different devices. The reason for such a problem is that when the desktop shortcuts of a computer are backed up, the links of these backups appear as broken links on other user’s devices.

Read more: How to make Windows 11 look like Windows 10?

It’s true that turning off desktop sync will fix the problem of broken links on other devices, but some users believe that it might be better to disable sync from the start to avoid any hassles.

Remove applications

Unlike other big companies, Microsoft is not used to removing its programs; But the removal of two applications from new versions of Windows has raised the objection of some users. Paint 3D and WordPad are two useful applications that have been practically retired, and some Windows users feel their emptiness.

In today’s world, the opinion of users and their convenience should be the center of attention of any company. Due to the powerfulness of Apple’s chips and the ease with which users get used to this company’s operating systems, Microsoft may face a decline in its market share in the future if it ignores the preferences of users.

What do you think about these 7 Windows problems? Are these problems bothering you or are you dealing with other problems in Windows?

acceptableAdjective: worthy, decent, sure of being accepted or received with at least moderate pleasure

Adjective: Barely worthy, less than excellent; passable.

Samsung Galaxy Ring Review; One ring to rule over all?!

Smart rings are somewhat reminiscent of Fitbit bracelets such as the Flex 2; Wristbands that had no screen and only measured steps and calories burned. Although these wristbands gradually gave way to more advanced smartwatches and wristbands, now they have returned with more precision and features in the form of smart rings.

Before big companies like Samsung entered the smart rings market, this field was mainly in the hands of startups like Oura. However, Oura smart rings have not yet entered the Iranian market, and Galaxy Ring is considered to be the first smart ring from a well-known brand that was released in Iran. Galaxy Ring, due to its high accuracy in sleep quality, is perhaps only ideal for people who do not feel comfortable wearing a smartwatch while sleeping.

If you have a Samsung watch and phone, the Galaxy Ring is easily compatible with the Samsung ecosystem and provides you with various features; Of course, users of other Android phones can also use the ring features; But the advanced functions of Galaxy Ring, such as providing daily points and using motion gestures, are only for Samsung users.

Almost all the features of the Galaxy Ring are also offered by many cheap smart watches; perhaps with much higher accuracy than the Samsung Smart Ring; But the popularity of smart rings like Galaxy Ring is mostly due to their design and very lightweight. Can the features and attractive design of the Galaxy Ring justify its high price? To answer this question, stay with me until the end of the Galaxy Ring review.

Galaxy Ring is available in 9 sizes from 5 to 13; So you can definitely find the right ring for your finger size; In order to find the right ring size, Samsung suggests that you get a measurement kit before buying, which of course is not applicable for users inside Iran. Samsung doesn’t specify exactly which finger to use the ring with, just make sure it fits around your finger in a way that’s not too tight and not too loose.

The silver color of the Galaxy Ring has given it a special and eye-catching effect; so that if someone is unaware of its intelligence, they will see it as a beautiful accessory on your finger; Of course, considering the cost you have to bear to buy this ring, you might feel more satisfied with others knowing that you have a smart and expensive ring on your finger.

Apart from silver color, you can also choose from gold and black colors; But since its golden color is very similar to wedding rings, black and silver colors are more attractive options. The titanium used in making the ring, in addition to its beauty, makes it resistant to scratches; Therefore, you will have a combination of beauty and durability together.

Galaxy Ring is so light that just like a simple ring, sometimes you forget you have it in your hand. This light weight makes you not feel bad while sleeping, unlike smartwatches. Thanks to 10ATM and IP68 certification, there is no need to take it off even when washing hands, swimming, or showering. These certificates guarantee that the ring will remain undamaged in 1.5 meters of water for half an hour, and if the water depth increases to 100 meters, there will be no danger to the ring for 10 minutes.

To use the Galaxy Ring and connect it to the phone and use the sensors, which I will talk about in detail later, open the case and make sure that the battery of the ring has enough charge. Remove the ring from the charging case and, as I mentioned earlier, place it on your finger so that the sensors are under the finger; To guide the user, Samsung has created a small appendage on the ring that should be placed under your finger.

Setting up Galaxy Ring is very simple and fast; Just turn on the phone’s Bluetooth and tap Connect after seeing the connection window. After that, you can proceed with the other setup steps through the Samsung Wearables app.

In the inner part of the ring, there are grooves that host the sensors. One of these sensors is an accelerometer that tracks movement activities such as walking and running. Another sensor designed by the Koreans is called Optical Bio-signal and monitors heart rate. The latest sensor is also responsible for measuring changes in skin temperature during sleep and using this information, it can also track women’s menstrual cycle.

To access all the features of the Galaxy Ring, you need to sign in to the Samsung Health app. This program is available to Samsungs by default; But if you don’t have a Samsung phone, you can download the application from the Play Store; However, as mentioned at the beginning of the article, some features are only available to users who connect to the ring with a Samsung phone.

At Zomit, we usually compare gadgets with their competitors to check their performance; However, due to the fact that smart rings are not widespread in the Iranian market yet, there is no competitor to compare. However, wristbands or smart watches can be a good benchmark for measuring the accuracy of the Galaxy Ring’s sensors. Therefore, in order to better examine the sensors of the ring, we went to the Xiaomi Smart Band 8, and the ring left us very hopeful in some areas and completely disappointed in others.

The first thing we checked on the Samsung Smart Ring was its performance and accuracy when monitoring sleep quality. Considering that many people will probably buy smart rings to evaluate the quality of their sleep, Samsung itself has a special emphasis on this issue and has obviously spent enough time on it. The ring’s performance in monitoring sleep quality was very good. The interesting thing is that the ring can detect the signs of snoring before others know about it and inform the user.

Unlike sleep monitoring, the Galaxy Ring did not have satisfactory accuracy in assessing stress levels, and during several tests, it showed variable results each time. In contrast, Xiaomi’s smart band provided better performance and more stable and reliable results in this field; So, if accurate stress level monitoring is important to you, the Samsung Smart Ring is not a reliable option, at least not yet. This issue also applies to the ring’s accuracy in counting steps and distinguishing walking from running; Unless Samsung increases the accuracy of the sensors with a software update.

The first thing we checked on the Samsung Smart Ring was its performance and accuracy when monitoring sleep quality. Considering that many people will probably buy smart rings to evaluate the quality of their sleep, Samsung itself has a special emphasis on this issue and has obviously spent enough time on it. The ring’s performance in monitoring sleep quality was very good. The interesting thing is that the ring can detect the signs of snoring before others know about it and inform the user.

Unlike sleep monitoring, the Galaxy Ring did not have satisfactory accuracy in assessing stress levels, and during several tests, it showed variable results each time. In contrast, Xiaomi’s smart band provided better performance and more stable and reliable results in this field; So, if accurate stress level monitoring is important to you, the Samsung Smart Ring is not a reliable option, at least not yet. This issue also applies to the ring’s accuracy in counting steps and distinguishing walking from running; Unless Samsung increases the accuracy of the sensors with a software update.

The ring can automatically monitor sports activities and record important information such as exercise duration, calories burned, and steps taken, but you need to enable this option to automatically track sports activities. The complete list of sports and the details of recorded activities can also be seen in the Samsung Health application.

One of the interesting features of the Galaxy Ring is the support for motion gestures; A feature that is not activated automatically and you have to activate it through the Wearable application. After activation, you can take a picture of yourself by pressing the index finger and thumb twice. Fortunately, it is also possible to personalize this feature and it can be used to turn off the alarm, which is much more useful than taking photos. As I mentioned, the activation of this feature must be done through Wearable and is only available for Samsung users.

Another feature that is exclusive to Samsung users is called Find My Ring and it helps you have a chance to find your ring if you lose it. Find My Ring helps you find the ring effortlessly by displaying the ring’s location on the map and blinking LEDs. This feature can be a lifesaver when you’ve lost your ring in unfamiliar places.

Samsung users have other advantages; Longer battery life and more accurate sensors. If you have a Samsung smartwatch, you are a few steps ahead of other users; Because you can divide tasks between the ring and the smartwatch; For example, the watch can perform tasks such as measuring heart rate and stress level, and the Galaxy Ring can only monitor the quality of sleep. In this case, both the watch and the ring will have more charging.

Read more: Galaxy S24 FE Review

Samsung says that if you wear the ring continuously, Galaxy AI will analyze your health data and based on them, make recommendations to improve your health; For example, it will notify you if it detects an irregular heartbeat. However, since the ring doesn’t have a built-in speaker or vibration capability for notifications, it keeps you up to date with alerts using notifications sent to your phone. Galaxy Ring gives you a score between 0 and 100 based on sleep data, heart rate, and previous day’s activities in the Energy Score section of the Samsung Health app so that you can monitor your activities more closely.

Galaxy Ring battery capacity varies depending on its size; Samsung announced the battery capacity of the smaller sizes at 18 mAh and the battery capacity of the larger sizes at 23.5 mAh and claims that it will charge up to 7 days if the ring is used 24 hours a day.

In our tests, in 24 hours of use and while constantly testing various parameters, only 2% of the battery charge was reduced; So, we can conclude that Samsung’s claim about charging the ring is correct, and even in case of continuous use, the ring lasts between 6 to 7 days. Unlike the ring, the battery capacity of the charging case is equal to 361 mAh for all sizes.

To recharge the Galaxy Ring, the USB Type-C port is installed on the charging case and wireless charging is also available. Samsung says that the ring can be charged up to 40% in 30 minutes. With a thumb calculation, we can conclude that the full charge of the battery takes approximately one hour and 15 minutes, which is a reasonable time considering the one-week charging of the ring.

If you have connected the Galaxy Ring to a Samsung phone, you can see the amount of remaining charge in the Wearable application; But Samsung offers other solutions for this. By pressing the central button of the case, through the lighting around the ring, you can see the approximate charge amount of the ring. If the ring is not inside the case, you can see the charge amount of the case by pressing the same button. If the amount of charge is less than 15%, the lights will show red and otherwise, they will show green.

I mentioned earlier that smart rings are not yet widespread in the Iranian market as they should be, and Galaxy Ring is one of the first rings to enter the Iranian market. If you intend to buy a Samsung ring just for sleep and you don’t have any financial worries, you can buy the ring with confidence; But you should note that the ring is not very reliable in areas such as stress level measurement.

Regardless of the relatively low accuracy of the ring in some aspects, its very high price is the most important issue to consider. In the price range of 400 dollars, you can buy the best smart watches and benefit from many more features; As long as the price of the ring remains in this range, it is not worth buying. What do you think about the Samsung Smart Ring?

Positive points

- High accuracy in monitoring sleep quality

- Support for motion gestures

- Strong titanium body

- Light, comfortable, and minimal

- Excellent charging

Negative points

- Limited features for users outside the Samsung ecosystem

- Low accuracy in stress level measurement

- Very high price

Apple Intelligence Review

Ten, eleven years ago, when the chief scientist of Siri’s development sat down to watch the movie Her for the second time, he tried to understand what it was about Samantha, the artificial intelligence character of the movie, that made the protagonist fall in love with her without seeing her; The answer was clear to him. Samantha’s voice was completely natural instead of being robotic! This made Siri in iOS 11, which was released about four years later, have a human (Terry) voice.

But Samantha was not just a natural voice, she was so intelligent that you thought she really had the power to think, and Siri iOS 11 was supposed to be more than just a natural voice; Or at least that’s what Apple wanted to show us. In the demo that Apple released that year of its programs for Siri, it showed a normal day in the life of Dwayne Johnson with his best friend Siri. While exercising and tending to her potty, Johnson asked Siri to check her calendar and reminders list, get her a Lyft cab, read her emails, show her photos of the clothes she designed from the gallery, and finally Rock in an astronaut suit. Suspended in space, we see that he asks Siri to make a Facetime call and take a selfie with him.

In almost all of Siri’s more or less exaggerated advertising, Apple tried to present its voice assistant as a constant and useful companion that can handle anything without the need to run a program ourselves. Siri was so important to Apple that Phil Schiller introduced it as “the best feature of the iPhone” at the iPhone 4S unveiling ceremony and said that we would soon be able to ask Siri to do our jobs for us.

But this “soon” took 13 years and we still have to wait at least another year to see the “real Siri” that was shown in the demos; I mean when Siri tries to get to know the user better by monitoring the user’s interaction with the iPhone and makes us unnecessary to open many applications every day.

For now, what the iOS 18.2 beta version of “smarter” Siri has given us is the integration with ChatGPT and a tool called Visual Intelligence, which offers something like Google Lens and ChatGPT image analysis together. To use Apple’s image generators such as ImagePlayground, Genmoji, and ImageWand, you must join a waiting list that will be approved over the coming weeks.

-

Siri with ChatGPT seasoning

-

visual intelligence; Only for iPhone 16

-

And finally: the magic eraser for the iPhone

-

Artificial intelligence writing tool

-

Detailed features: from smart gallery search to the new Focus mode

-

The most exciting features… yet to come!

With this account, Apple Intelligence not only joined the artificial intelligence hype later than its competitors and currently has almost no new and unique features to offer, but it is perhaps the most incomplete product that Cupertino residents have offered to their users.

Still, better late than never, and the future of Apple Intelligence looks even more exciting than its current state.

Siri with ChatGPT seasoning

The integration of Siri with ChatGPT means that instead of relying on Google to answer complex requests, Siri will now rely on the popular OpenAI chatbot (of course, permission must be obtained first; however, for faster responses, this option can be disabled by unchecking “Confirm ChatGPT Requests” In the ChatGPT section of the settings, disable it).

The quality of the answers is what we expect from ChatGPT, and Apple even gives you the option to download this chatbot application in the Apple Intelligence & Siri section of the settings; But the good news is that using ChatGPT is free and there is no need to create an account. If you have a Pro account, you can log into the app, but if you don’t, OpenAI won’t be able to save your requests and use them to train its chatbot later.

Beta version of Apple Intelligence

Request permission to ChatGPT

To use ChatGPT you probably need to change the IP

The real intelligence of Siri is where it determines what request to answer by itself, what request to ask Google, and what request to give to ChatGPT. For example, a question about the weather is answered by Siri, a question about the news of the day is usually left to Google, and if you have a request to produce text or image, Siri goes to ChatGPT; Of course, if you get bored and start your question with “Ask ChatGPT”, Siri will go straight to the chatbot.

Answer with Siri

Answer with Google

Reply with ChatGPT

The new Siri has a good feeling and the color change of the keyboard and the color halo around the screen when interacting with Siri is eye-catching; More importantly, we no longer need to call Siri to ask her name, and by double-tapping the bottom of the screen, the keyboard will pop up and you can type your request to her (a feature that shy people like me appreciate). However, the use of chatbots, which are also the most famous, is not a new thing, and it is unlikely that Siri’s connection with ChatGPT will excite anyone, at least until this moment.

We have to wait until 2025 for the story to become exciting; When Apple promised that finally ” real Siri ” will make us unnecessary to deal with different applications.

visual intelligence; Only for iPhone 16

Apple Intelligence is only available for iPhone 15 Pro and later users and iPads and MacBooks equipped with M-series chips; But the access to the Visual Intelligence feature in the iOS 18.2 version is even more limited and will only be available to iPhone 16 series users; Because of the “Camera Control” button.

Visual Intelligence is a mouthful for a feature that is not Apple’s initiative and we experienced it a long time ago with Google Lens (of course, the Circle to Search feature of Samsung phones has a similar situation). By holding down the camera control button, the camera view will open. If you tap on the Search option on the right, the subject you see in the camera will be searched in the Google Images section to find similar ones on different websites. If you select the Ask option on the left side of the screen, ChatGPT will come into action and analyze the image for you.