Space

Why is it still difficult to land on the moon?

Published

4 days agoon

Why is it still difficult to land on the moon?

This year, the private company Spacel and the Indian Space Organization both met tragic ends when they tried to land their spacecraft on the surface of the moon. Despite the astonishing leaps made in recent decades in computing, artificial intelligence and other technologies, it seems that landing on the moon should be easier now; But recent setbacks show that we still have a long way to go with safe and trouble-free landings on the surface of Earth’s only moon.

50 years after sending the first man to the surface of the moon, the question arises as to why safely landing a spacecraft on Earth’s nearest cosmic neighbor is still a difficult task for space agencies and private space companies. Stay with Zoomit to check the answer to this question.

Why is the lunar landing associated with 15 minutes of fear?

Despite the complexities of any space mission, sending an object from Earth into orbit around the moon today is easy. Christopher Riley, the director of the documentary film In the Shadow of the Moon produced in 2007 and the author of the book Where We Stood (2019), both of which are about the history of the Apollo 11 mission, explained the reasons for the difficulty of landing on the moon in an interview with Digital Trends. is According to him: “Today, the paths between the Earth and the Moon are well known, and it is easy to predict them and fly inside them.”

Chandrayaan 2 mission launch

However, the real challenge is getting the spacecraft out of orbit and landing it on the lunar surface; Because there is a delay in the communication between the Earth and the Moon, and the people in the control room who are present on the Earth cannot manually control the spacecraft in order to land it safely on the Moon. As a result, the spacecraft must descend automatically, and to do so, it will fire its descent engines to slow its speed from thousands of kilometers per hour to about one meter per second, in order to make a safe landing on the lunar surface.

For this reason, the director of the Indian Space Research Organization (ISRO), who was trying to land the Vikram lander last month, described the final descent of the spacecraft as “frightening 15 minutes”; Because as soon as the spacecraft enters the landing stage, the control of its status is out of the hands of the mission control members. They can only watch the spacecraft land and hope that everything goes according to plan, that hundreds of commands are executed correctly, and that the automatic landing systems gently bring the spacecraft closer to the surface of the moon.

The Great Unknown: The Landing Surface

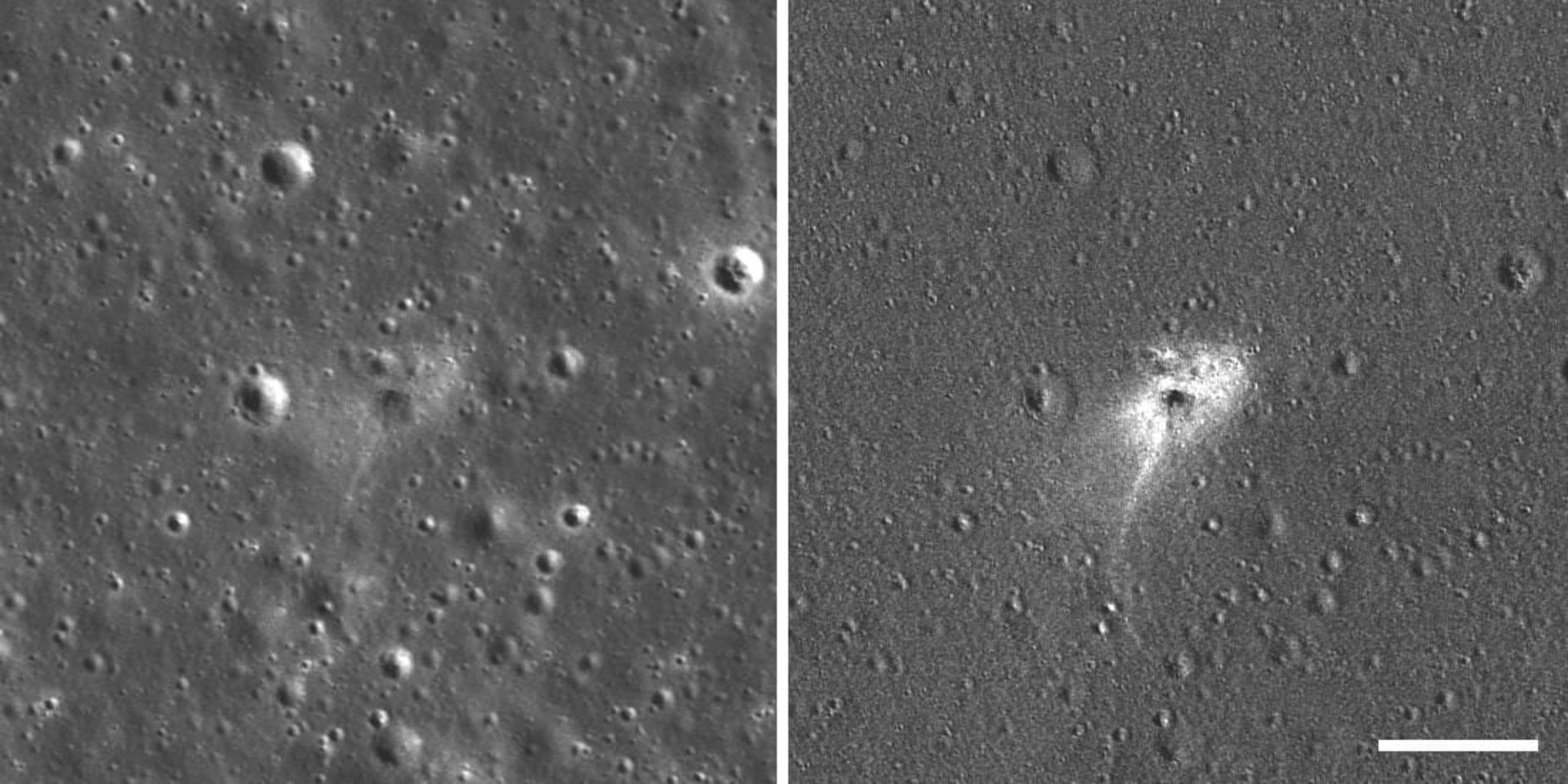

One of the biggest challenges in the final descent phase is identifying the type of landing site. Despite the availability of instruments such as the Lunar Reconnaissance Orbiter (LRO) that can capture amazing views of the lunar surface, it is still difficult to know what kind of surface the spacecraft will encounter when it lands on the moon.

Left: Breshit crash site. Right: The ratio of the before and after images highlights the occurrence of minor changes in surface brightness.

Leonard David, author of Moon Fever: The New Space Race (2019) and veteran space reporter, says:

The Lunar Reconnaissance Orbiter is a very valuable asset that has performed really well over the years; But when you get a few meters above the surface of the moon, complications appear that cannot be seen even with the very powerful LRO camera.

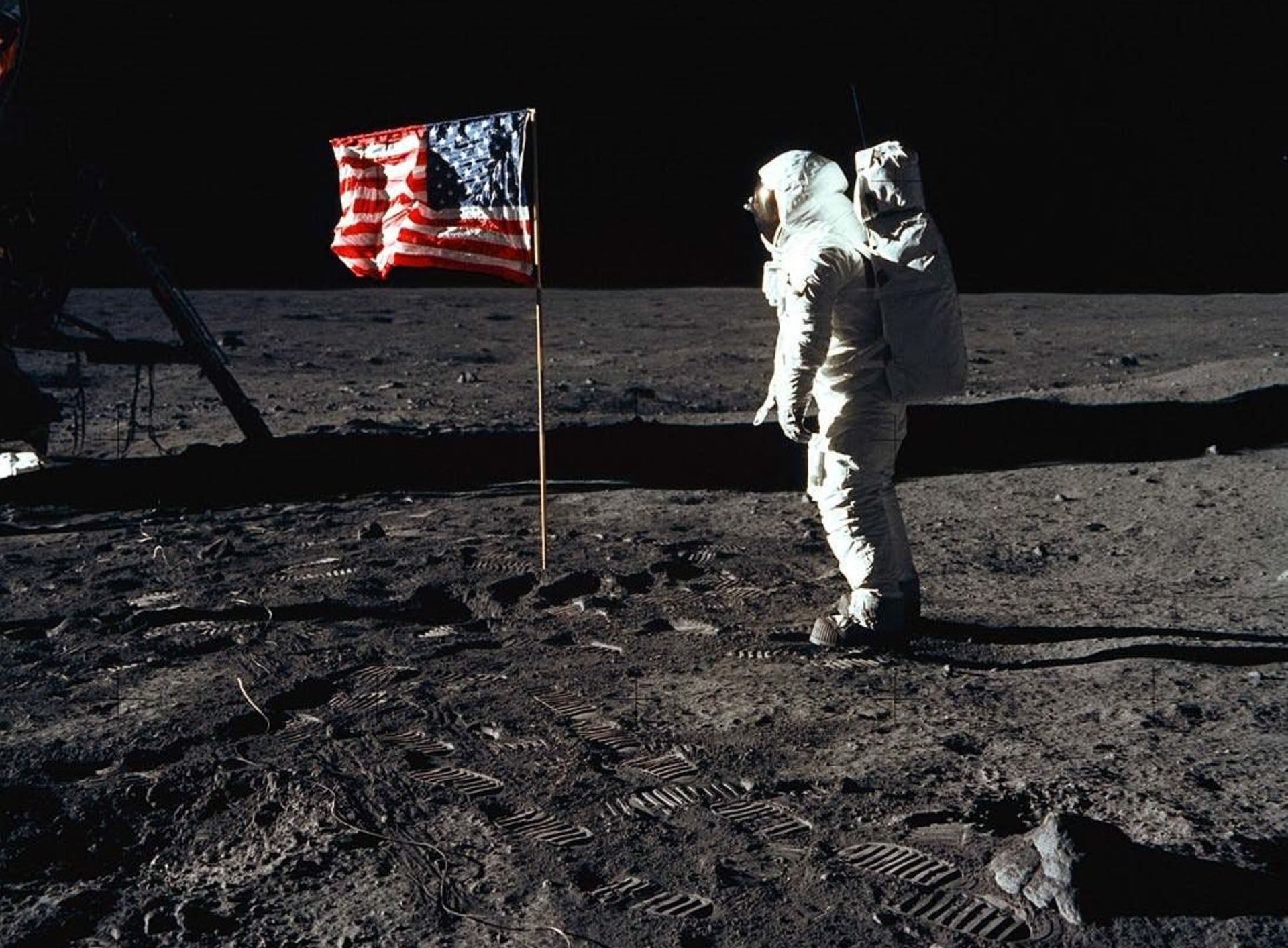

Even today, despite the imaging data available, “some landing sites still have unknown remains,” Riley says. He notes that the Apollo 11 mission included an advantage that today’s unmanned landers lack, which is the presence of an astronaut’s observer’s eyes that can closely observe the surface of the spacecraft’s landing site. As you probably know, in the mission that led to the landing of the first man on the surface of the moon, the Eagle computer was guiding the spacecraft to a place full of boulders; But to avoid hitting the rocky surface of the moon, Armstrong took control of the spacecraft himself and landed it on a flat surface.

The uneven surface of the landing site had caused many problems in previous lunar missions such as Apollo 15. In this mission, the astronauts were told that as soon as the spacecraft touched the surface of the moon, they should turn off the engines to prevent dust from being sucked in and the risk of a return explosion. But the Apollo 15 spacecraft landed in a crater, and because of this, one of its legs came into contact with the surface earlier than the others. When the crew shut down the engines, the spacecraft, moving at a speed of 1.2 meters per second, experienced a hard landing. The lander landed at an oblique angle, and although it eventually landed safely, it nearly overturned, causing a fatal disaster.

- Half a century after Apollo 11; How did the great human leap happen?

- dust storms; The nightmare of space missions to the moon

The difficult landing of Apollo 15 introduced another complicating factor in lunar landings: lunar dust. The Earth’s moon is covered with dust that is thrown into the air by any movement and sticks to everything it comes in contact with. As the spacecraft approaches the surface of the moon, huge plumes of dust are kicked up that limit the field of view and endanger the spacecraft’s electronics and other systems. We still do not have a solution to deal with the dust problem.

An achievement that has been achieved before

Another reason why the moon landing remains a challenge is that gaining public support for lunar projects seems difficult. Referring to Neil Armstrong and Buzz Aldrin, the two astronauts who walked on the moon during the Apollo 11 mission, David says, “We convinced ourselves that we had sent Neil and Buzz [to the surface of the moon]; “As a result, when it comes to lunar missions, people may say we’ve been there before and we’ve had this success.”

But in reality, our understanding of the moon is still very little, especially in relation to long-term missions. Now, with a 50-year gap between the Apollo missions and NASA’s upcoming Artemis project, the knowledge gained has been lost as engineers and specialists retire. David says:

We need to recover our ability to travel into deep space. We haven’t gone beyond near-Earth orbit since Apollo 17 and since 1972. NASA is no longer the same organization that put men on the moon, and there is a whole new generation of mission operators.

The importance of redundancy

As the first private spacecraft entered into orbit around the moon, the Space project was of considerable importance; But its failure to land smoothly on the surface of the moon made the achievement of landing on the surface of the moon still remain in the hands of governments. However, we can expect more private companies, such as Jeff Bezos ‘ Blue Origin, which is developing its lunar lander, to target the moon in the future. According to Elon Musk, even the giant SpaceX Starship spacecraft, which is being built with the ultimate goal of sending a human mission to Mars , can also land on the moon.

According to David, private companies’ participation in lunar landings has advantages such as increased innovation. However, companies are under pressure to save money, and this can lead to a lack of redundancy and support systems that are essential in the event of errors and malfunctions. Lunar rovers typically include two or even three layers of support systems. David is concerned that private companies will be encouraged to eliminate these redundancies in order to cut costs and save money.

Crew Dragon SpaceX passenger capsule

“We saw Elon Musk’s Dragon capsule catch fire after a failed test on the stand,” says David, referring to the explosion of the SpaceX spacecraft in April, which had no crew on board. “This accident was kind of a wake-up call about how unpredictable the performance of spacecraft can be.” David compared the Crew Dragon incident to the Apollo 1 disaster, which killed three NASA astronauts during a test launch in 1967.

Another problem related to the lack of redundancy systems is the lack of information needed when an error occurs. As for the recent landings, it seems that the SpaceX crash was caused by human error; however, it is not clear what caused the failure of Chandrayaan 2 in the calm landing, and it is possible that without the necessary systems to record and send information to the lander, we will never find out the main reason for the failure of this mission. Without the required data, it becomes much more difficult to prevent problems from reoccurring in the future.

The future of lunar landings

Currently, many projects are underway to facilitate future moon landings. Ultimately, we need to be able to build the necessary infrastructure for a long-term stay on the moon.

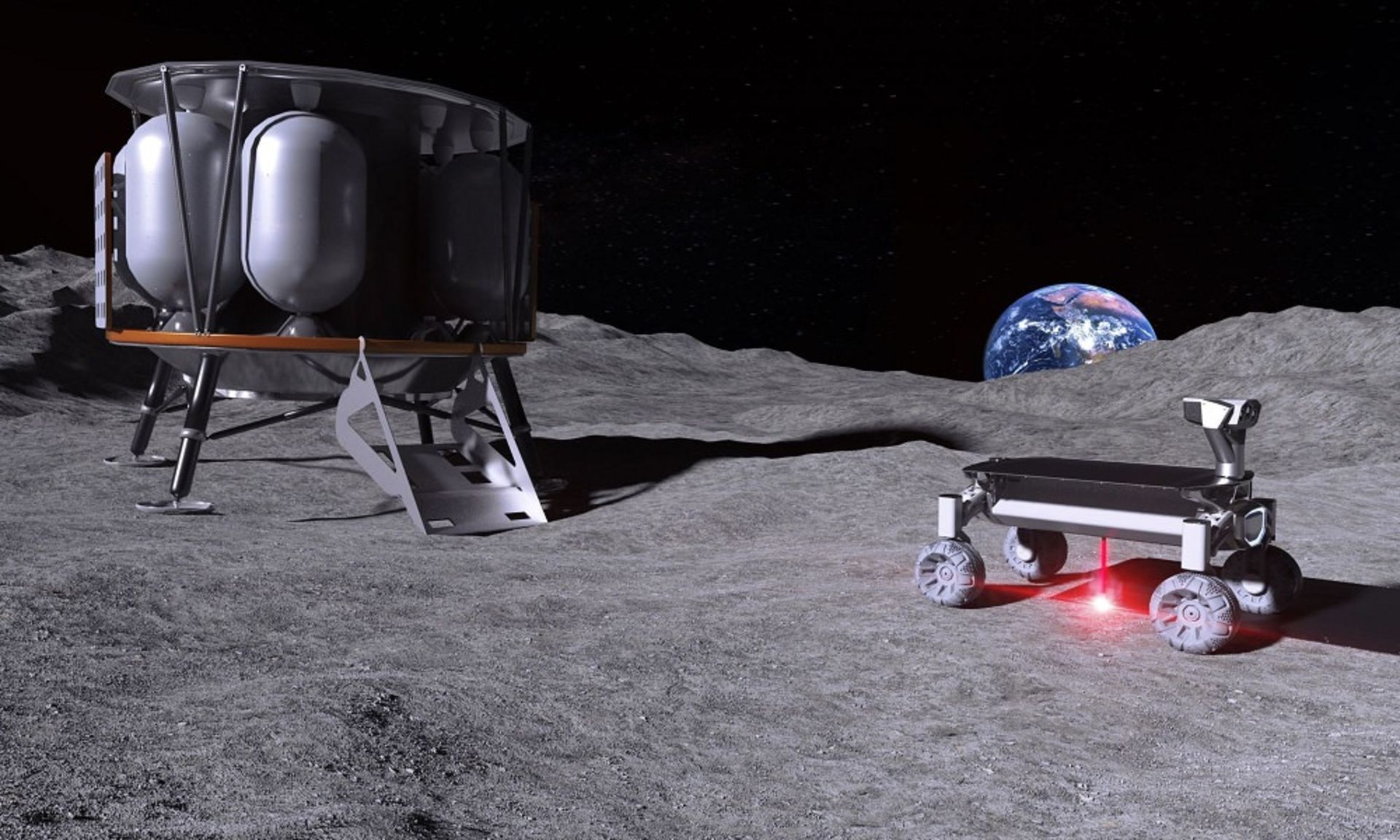

Conceptual design of Moonrise technology on the moon. On the left side is the Alina lunar module, and on the right side, the lunar rover equipped with Moonrise technology melts the lunar soil with the help of a laser.

If we can make long-term stays on the moon possible, or even build a permanent base there, landing spacecraft on the lunar surface will be much easier. By constructing the landing sites, a flat, safe, and free surface of unknown debris can be created for the landing of surface occupants. For example, researchers are currently conducting research at NASA’s Kennedy Space Center to investigate the feasibility of using microwaves to melt the lunar soil (regolith) and turn it into a hard foundation so that it can be used as a landing and launch site. The European Space Agency is also investigating how to use 3D printing to create landing sites and other infrastructure on the moon.

Read more: Europa Clipper, NASA’s flagship probe was launched

Other ideas include the use of lidar remote sensing systems, which are similar to radar systems; But instead of radio waves, it uses lasers to land the spacecraft. Lidar technology provides more accurate readings and uses a network of GPS satellites to help guide the spacecraft during landing.

The problem of public support

As important as technology is, public interest and support are essential to the success of the lunar landing program. “Apollo had enormous resources that are perhaps only comparable today to China’s space program,” says Riley. “Remember that Apollo carried the best computer imaginable, the human brain.” It goes without saying that there is an element of luck involved in every landing.

US Vice President Mike Pence speaking at the 50th anniversary of the Apollo 11 mission

Finally, there is the question of what kind of failure is acceptable for people. David says:

I think we have to be serious about the fact that we’re probably going to lose people. There is a serious possibility that the manned lunar lander will crash and kill the astronauts inside. The American people continued to support NASA despite the failures and bad luck of the Apollo program, But at that time there was a lot of pressure to compete with the Soviet Union. Without the bipolar atmosphere of the Cold War and the space race, would people still support missions with human lives in between?

You may like

-

Europa Clipper, NASA’s flagship probe was launched

-

Dark matter and ordinary matter can interact without gravity!

-

James Webb Space Telescope deepens cosmology’s biggest controversy

-

James Webb vs. Hubble

-

There is more than one way for planets to be born

-

Why is Jupiter one of the first targets of the James Webb Space Telescope?

Europa Clipper, NASA’s flagship probe was launched

After years of waiting, NASA’s Europa Clipper probe was finally launched on Monday at 7:36 p.m. Iran time from the Kennedy Space Center on top of SpaceX’s Falcon Heavy rocket and began a major astrobiology mission to Europa, the potentially habitable moon of Jupiter.

As SpaceX’s massive rocket powered by 27 powerful Merlin engines lifted off from pad 39A, NASA live broadcast reporter Dron Neal said, “The launch of Falcon Heavy with Europa Clipper will reveal the secrets of the vast ocean beneath the icy crust of Europa, Jupiter’s moon. It has been hidden, it will reveal.”

The engines of the two side boosters of the Falcon Heavy were shut down and separated from the central booster approximately three minutes after the flight. The central booster continued to fly for another minute, and then in the fourth minute of the launch, the separation of the upper stage from the first stage was confirmed. Finally, 58 minutes later, Europa Clipper was injected into interplanetary orbit as scheduled. A few minutes later, the mission team made contact with the probe, and people in the control room cheered and applauded.

Falcon Heavy’s unique launch

The launch of NASA’s new probe was delayed due to some mishaps. NASA and SpaceX initially planned to launch the Europa Clipper mission on Thursday, October 10; But with powerful Hurricane Milton hitting Florida’s Gulf Coast on Wednesday evening, a delay in the launch became inevitable. NASA shut down Kennedy Space Center to deal with the storm, and Europa Clipper was placed inside SpaceX’s hangar near Launch Pad 39A.

The recent launch was Falcon Heavy’s 11th flight overall and its second interplanetary mission. Also, this was the first flight of the Falcon Heavy, when all three boosters of the first stage of the rocket were deployed.

Typically, the Falcon Heavy and Falcon 9 first-stage boosters store enough fuel to perform landing maneuvers for recovery and reuse in the future; But Europa Clipper needed all the power that Falcon Heavy could provide in order to make it on its way to the Jupiter system.

A long way to the launch pad

In late 2015, the US Congress directed NASA to launch Europa Clipper using the Space Launch System (SLS), NASA’s massive rocket. SLS was still under construction at the time and was several years away from reaching the launch pad. The delay in completing the construction of this powerful rocket and NASA’s need to assign at least the first three versions of SLS to the Artemis lunar mission caused the Europa Clipper launch date to be in an aura of uncertainty.

In the 2021 House budget draft for NASA, the agency was directed to launch Europa Clipper by 2025 and, if possible, with SLS. However, due to the unavailability of the Space Launch System, NASA had to go to SpaceX’s Falcon Heavy. This decision was not without cost. As the most powerful rocket ever used in an operational mission, SLS can send Europa Clipper directly to the Jupiter system in less than three years.

Europa Clipper will use the gravitational assistance of Mars and Earth on its way to the Jupiter system

Now, even in Falcon Heavy’s fully disposable mode, the Clipper’s trip to Europe takes almost twice as long. The probe should make a flyby of Mars in February 2025 and a flyby of Earth in December 2026 to gain enough speed to reach its destination in April 2030.

Missile problems were not the only obstacles facing Europa Clipper on its way to the launch pad. For example, the rising costs of this five billion dollar probe forced NASA to cancel the construction of one of the probe’s science instruments. This instrument, named “Identification of Europa’s internal features using a magnetometer” (ICEMAG), was designed to measure Europa’s magnetic field.

Then in May 2024, NASA found that transistors similar to those used in Europa Clipper, which are responsible for regulating the probe’s electricity, were “failing at lower-than-expected radiation doses.” Following this discovery, NASA conducted more tests on the transistors and finally concluded in late August that these components could support the initial mission in the radiation-rich environment around Jupiter.

Ambitious mission to a fascinating moon

NASA/Jet Propulsion Laboratory-Caltech

Europa Clipper is one of NASA’s most exciting and ambitious flagship missions, and it has impressive features. For example, the mission probe is the largest spacecraft NASA has ever built for a planetary mission. Europa Clipper weighed almost 6,000 kg at the time of launch and will be more than 30 meters long (bigger than a basketball court) by opening its huge solar panels in space.

Clipper’s Europa destination is also a prominent location: Europa, one of Jupiter’s four Galilean moons. The moon is covered with an icy outer shell, which scientists believe hides a vast ocean of salty liquid water. For this reason, Europa is considered one of the best places in the solar system to support alien life.

In early 2012, studies began to look for potential plumes of water rising from Europa’s surface. Some researchers theorize that those water columns and vents from which the columns protrude may contain evidence of life living beneath the moon’s icy crust. However, NASA scientists have made it clear that Europa Clipper is not looking for extraterrestrial life in Europa; Rather, this probe will only investigate the potential of the submoon water environment to support life.

“If there’s life on Europa, it’s going to be under the ocean,” Bonnie Buratti, senior Europa Clipper scientist at NASA’s Jet Propulsion Laboratory, said in September. As a result, we cannot see it.” “We will be looking for organic chemicals that are prerequisites for life on the surface of the moon,” Borrati added. There are things we can observe; such as DNA or RNA; But we don’t expect to see them. As a result, [the probe] is only looking for habitable environments and evidence for the ingredients of life, rather than life itself.”

NASA scientists have made it clear that Europa Clipper is not looking for extraterrestrial life in Europa

Europa Clipper will collect data using a suite of nine scientific instruments, including visible and thermal cameras, several spectrometers, and special equipment to identify Europa’s magnetic environment. As stated on NASA’s Europa Clipper page, the probe will help scientists achieve three main goals:

- Determining the thickness of Europa’s ice sheet and understanding how Europa’s ocean interacts with the lunar surface.

- Investigating the composition of Europa’s ocean to determine whether it has the materials necessary to form and sustain life.

- Studying the formation of Europe’s surface features and discovering signs of recent geological activities; such as the sliding of crustal plates or the discharge of water columns in space.

Europa Clipper also transports Earth’s culture to the Solar System. A piece called “In Praise of Mystery: A Poem for Europe” by Edda Lemon, a famous American poet, is engraved in the artist’s own handwriting on a metal plate. In addition, the probe carries a coin-sized chip that contains the names of 2.6 million inhabitants of planet Earth.

6-year journey

Johns Hopkins University Applied Physics

If all goes according to plan, Europa Clipper will enter Jupiter’s orbit in April 2030. When the probe gets there, it will use up 50-60% of its 2,722 kg of fuel by performing an injection maneuver for 6-8 hours.

The injection maneuver puts Europa Clipper in an elliptical orbit around the gas giant. A series of long maneuvers will then be performed to align the trajectory so that the probe can fly by Europa more than 45 times and study it closely. In fact, Europa Clipper will remain around Jupiter throughout its mission; Because according to the launch environment of Europa, it will be very dangerous for the spacecraft to go around the moon.

If all goes according to plan, Europa Clipper will enter Jupiter’s orbit in April 2030

The first flight over Europe will not take place before the spring of 2031. NASA will use the first pass to make further corrections to Europa Clipper’s trajectory in preparation for the probe’s first science mission. With the start of scientific flybys in May 2031, Europa Clipper will aim its array of sensors towards the far hemisphere from Jupiter and will approach the surface of the moon up to 25 km. The second science campaign will begin two years later, in May 2033, in the Jupiter-facing hemisphere of Europa.

The end of the Europa Clipper mission is set for September 2034. At that time, NASA will crash the spacecraft into Ganymede, another Galilean moon of Jupiter. This disposal strategy was chosen because Ganymede is considered a relatively poor candidate to host life, and the mission team wanted to make sure they did not contaminate potentially life-hosting Europa with terrestrial microbes.

Space

Dark matter and ordinary matter can interact without gravity!

Published

3 weeks agoon

01/10/2024

Preposition: For each; per.

Noun: A topology name.

Noun: which has mass but which does not readily interact with normal matter except through gravitational effects.

Adverb: Beyond all others.

Preposition: For each; per.

Noun: A topology name.

Dark matter and ordinary matter can interact without gravity!

Why is dark matter associated with the adjective “dark”? Is it because it harbors some evil forces of the universe or hidden secrets that scientists don’t want us to know? No, it is not. Such fanciful assumptions may sound appealing to a conspiracy theorist, but they are far from the truth.

Dark matter is called dark because it does not interact with light. So when dark matter and light collide, they pass each other. This is also why scientists have not been able to detect dark matter until now; it does not react to light.

Although it has mass and mass creates gravity, this means that dark matter can interact with normal matter and vice versa. Such interactions are rare, and gravity is the only known force that causes these two forms of matter to interact.

However, a new study suggests that dark matter and ordinary matter interact in ways other than gravity.

If this theory is correct, it shows that our existing models of dark matter are somewhat wrong. In addition, it can lead to the development of new and better tools for the detection of dark matter.

Read more: There is more than one way for planets to be born

A new missing link between dark and ordinary matter

Dark matter is believed to have about five times the mass of normal matter in our universe, which helps hold galaxies together and explains some of the motions of stars that don’t make sense based on the presence of visible matter alone.

For example, one of the strongest lines of evidence for the existence of dark matter is the observation of rotation curves in galaxies, which show that stars at the outer edges of spiral galaxies rotate at rates similar to those near the center. These observations indicate the presence of an invisible mass.

Also, for their study, the researchers studied six ultra-dim dwarf (UFD) galaxies located near the Milky Way. However, in terms of their mass, these galaxies have fewer stars than they should. This means they are mostly made up of dark matter.

According to the researchers, if dark matter and normal matter interact only through gravity, the stars in these UFDs should be denser in the centers and more spread out toward the edges of the galaxies. However, if they interact in other ways, the star distribution looks different.

The authors of the study ran computer simulations to investigate both possibilities. When they tested this for all six ultra-dim dwarf (UFD) galaxies, they found that the distribution of stars was uniform, meaning that the stars were spread evenly across the galaxies.

This was in contrast to what is generally observed for gravitational interactions between dark matter and normal matter.

What causes this interaction?

The results of the simulations showed that gravity is not the only force that can make dark matter and normal matter interact. Such an interaction has never been observed before, and it could change our understanding of dark matter and dark energy.

However, this study has a major limitation. What caused the interaction between the two forms of matter is still a mystery. While the current study provides tantalizing hints of a novel interaction, its exact nature and underlying causes remain unknown. Hopefully, further research will clarify the details of such interactions.

This study was published in The Astrophysical Journal Letters.

Space

James Webb Space Telescope deepens cosmology’s biggest controversy

Published

4 weeks agoon

26/09/2024

How the James Webb Space Telescope deepens cosmology’s biggest controversy

Summary of the article:

- Almost a century ago, Edwin Hubble discovered the expansion of the universe and calculated the expansion rate or the cosmic constant.

- Since Hubble, many groups have tried to measure the expansion rate of the universe. However, the values they obtained differed from the theoretical predictions. This difference is called Hubble tension.

- Scientists today use three methods to measure the expansion rate of the universe: Cephasian variable stars, TRGB red giant stars, and JAGB asymptotic giants.

- However, the Hubble tension still exists, indicating that the methods for calculating the Hubble constant suffer from a systematic flaw.

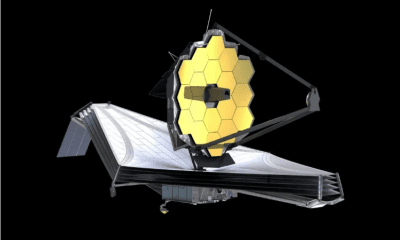

- Researchers hope to be able to use the James Webb telescope in the coming years to achieve more accurate measurements of the universe’s expansion rate and thus resolve the Hubble tension.

Almost a century ago, Edwin Hubble discovered that the universe was getting bigger. However, today’s measurements of how fast the universe is expanding are contradictory. These discrepancies show that our understanding of the laws of physics may be incomplete. On the other hand, everyone expected the sharp eyes of the James Webb telescope to bring us closer to the answer to the riddle; But a new analysis of the telescope’s long-awaited observations once again reflects inconsistent expansion rates from different types of data, while pointing to possible sources of error.

Two competing groups have led efforts to measure the rate of expansion of the universe, known as Hubble’s constant, or H0. A group led by Adam Reiss of Johns Hopkins University, relying on the known constituents of the universe and the governing equations, has consistently calculated the Hubble constant to be approximately 8 percent higher than the theory predicts the universe’s expansion rate. This discrepancy, known as the Hubble tension, indicates that the model of the cosmological theory may have missed some elements such as raw materials or effects that speed up the expansion of the universe. Such an element can be a clue to a more complete understanding of the world.

This spring, Reiss and his team published new measurements of the Hubble constant based on data from the James Webb Telescope and found a value consistent with their previous estimates. However, a rival group led by Wendy Friedman of the University of Chicago warns that more precise measurements are needed. The team’s measurements of the Hubble constant are closer to the theoretical estimate than Riess’ calculations, suggesting that the Hubble stress may not be real.

Since the commissioning of the James Webb Telescope in 2022, the astrophysical community has been waiting for Friedman’s multidimensional analysis based on telescope observations of three types of stars. The results are now as follows: the two-star types provide estimates of the Hubble constant that are in line with the theoretical prediction; While the results of the third star, which is the same type used by Reiss, are consistent with his team’s higher estimates of Hubble’s constant. According to Friedman, the fact that the results of the three methods are contradictory does not mean that there are unknown physical foundations, but that there are some systematic errors in the calculation methods.

Contradictory world

The difficult part of measuring cosmic expansion is measuring the distance of space objects. In 1912, American astronomer Henrietta Levitt first used pulsating stars known as Cephasian variables to calculate distances. These stars flicker at a rate proportional to their intrinsic luminosity. By understanding the luminosity or radiant power of a Cephasian variable, we can compare it with its apparent brightness or dimming to estimate its galaxy’s distance from us.

Edwin Hubble used Levitt’s method to measure the distances to a set of galaxies hosting the Cephasian variable, and in 1929 he noticed that the galaxies that are farther away from us are moving away faster. This finding meant the expansion of the universe. Hubble calculated the expansion rate to be a constant value of 500 km/s per megaparsec. In other words, two galaxies that are 1 megaparsec or approximately 3.2 million light years apart are moving away from each other at a speed of 500 km/s.

As progress was made in calibrating the relationship between the pulsation frequency of Cepheids and their luminosity, measurements of the Hubble constant improved. However, since the Cephasian variables are very bright, the whole approach used has limitations. Scientists need a new way to measure the distance of galaxies from each other in the infinite space.

In the 1970s, researchers used Cephasian variables to measure the distance to bright supernovae, and in this way they achieved more accurate measurements of the Hubble constant. At that time, as now, two research groups undertook the measurements, and using supernovae and Cephasian variable stars, they achieved contradictory values of 50 km/s per megaparsec and 100 km/s per megaparsec. However, no agreement was reached and everything became completely bipolar.

Edwin Hubble, the American astronomer who discovered the expansion of the universe, stands next to the Schmidt telescope at the Palomar Observatory in this photo from 1949.

Edwin Hubble, the American astronomer who discovered the expansion of the universe, stands next to the Schmidt telescope at the Palomar Observatory in this photo from 1949.

The launch of the Hubble Space Telescope in 1990 gave astronomers a new and multi-layered view of the universe. Friedman led a multi-year observing campaign with Hubble, and in 2001 he and his colleagues estimated the expansion rate to be 72 km/s/Mpa with an uncertainty of at most 10%.

A Nobel laureate for the discovery of dark energy, Reiss got into the expansion game a few years later. In 2011, his group found the Hubble constant to be 73 with a three percent uncertainty. Soon after this, cosmologists excelled in another way. In 2013, they used Planck’s observations of light left over from the early universe to determine the exact shape and composition of the early universe.

In the next step, the researchers connected their findings to Einstein’s theory of general relativity and developed a theoretical model to predict the current state of the universe, up to approximately 14 billion years into the future. Based on these calculations, the universe should be expanding at an approximate rate of 67.4 km/s per megaparsec with an uncertainty of less than one percent.

Reese’s team measurement remained at 73, even with the improved accuracy. This higher value indicates that the galaxies today are moving away from each other at a faster rate than theoretically expected. This is how the Hubble tension was born. According to Reiss, today’s Hubble tension shows us that something is missing in the cosmological model.

The missing factor could be the first new element in the universe to be discovered since dark energy. Theorists still have doubts about the identity of this agent. Perhaps this force is some kind of repulsive energy that lasted for a short time in the early universe, or perhaps it is the primordial magnetic fields created during the Big Bang, or perhaps what is being missed is more about ourselves than the universe.

Ways of seeing

Some cosmologists, including Friedman, suspected that unknown errors were to blame for Hubble’s tension. For example, Cephasian variable stars are located in the disks of younger galaxies in regions full of stars, dust, and gas. Even with Hubble’s fine resolution, you don’t see a single Cephasian variable, according to George Afstatio, an astrophysicist at the University of Cambridge. Rather, you see it overlapping with other stars. This density of stars makes measurements of brightness difficult.

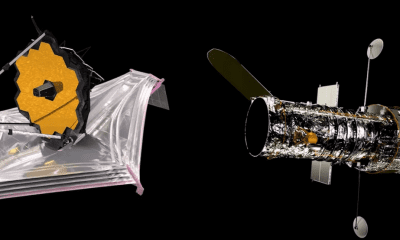

When the James Webb Telescope launches in 2021, Reiss and his colleagues will use its powerful infrared camera to peer into the crowded regions that host the Cephasian variables. They wanted to know whether the claims of Friedman and other researchers about the effect of the area’s crowding on the observations were correct.

The 6.5-meter multi-section mirror of the James Webb Space Telescope at NASA’s Goddard Space Flight Center in Maryland. This mirror passed various test stages in 2017.

The 6.5-meter multi-section mirror of the James Webb Space Telescope at NASA’s Goddard Space Flight Center in Maryland. This mirror passed various test stages in 2017.

When the researchers compared the new numbers to distances calculated from Hubble data, they saw a surprising match. The latest results from the James Webb telescope confirmed the Hubble constant measured by the Hubble telescope a few years ago: 73 km/s/Mpa with a difference of one kilometer or so.

Concerned about crowding, Friedman turned to alternative stars that could serve as distance indicators. These stars are found in the outer reaches of galaxies and away from the crowd. One of those stars belongs to the group ” Red Giant Branch ” or TRGB for short. A red giant is an old star with a puffy atmosphere that shines brightly in the red light spectrum. As a red giant ages, it eventually burns helium in its core, and at this point, the star’s temperature and brightness suddenly decrease.

A typical galaxy has many red giants. If you plot the brightness of these stars against their temperature, you reach a point where the brightness drops off. The star population before this brightness drop is a good distance indicator; Because in each galaxy, such a population has a similar distribution of luminosity. By comparing the brightness of these star populations, astronomers can estimate their relative distances.

The Hubble tension shows that the standard model of the cosmos is missing something

Regardless of the method used, physicists must calculate the absolute distance of at least one galaxy as a reference point in order to calibrate the entire scale. Using TRGB as a distance index is more complicated than using Kyphousian variables. MacKinnon and colleagues used nine wavelength filters from the James Webb telescope to understand how brightness relates to their color.

Astronomers are also looking for a new indicator: carbon-rich stars that belong to a group known as the “Jay region asymptotic giant” (JAGB). These stars are far from the bright disk of the galaxy and emit a lot of infrared light. However, it was not possible to observe them at long distances until James Webb’s launch.

Friedman and his team have applied for observation time with the James Webb Space Telescope in order to observe TRGBs and JAGBs, along with more fixed spacing indices and Cephasian variables, in 11 galaxies.

The vanishing solution

On March 13, 2024, Friedman, Lee, and the rest of the team meet in Chicago to find out what they’ve been hiding from each other. Over the past months, they were divided into three groups, each tasked with measuring distances to 11 galaxies using one of three methods: Cephasian variable stars, TRGBs, and JAGBs.

These galaxies also host related types of supernovae, so their distances can calibrate the distances of supernovae in many more distant galaxies. The rate at which galaxies move away from us divided by their distance gives the value of the Hubble constant.

Wendy Friedman at the University of Chicago is trying to fit the James Webb Telescope observations into the Standard Cosmological Model.

Wendy Friedman at the University of Chicago is trying to fit the James Webb Telescope observations into the Standard Cosmological Model.

Three groups of researchers calculated distance measures with a unique, random counterbalancing value added to the data. During the face-to-face session, they removed those values and compared the results.

All three methods obtained similar distances with three percent uncertainty. Finally, the group calculated three values of Hubble’s constant for each distance index. All values were within the theoretical prediction range of 67.4. Therefore, Hubble’s tension seemed to be resolved. However, they ran into problems with further analysis to write the results.

The JAGB analysis was good, But the other two were wrong. The team found that there were large error bars in the TRGB measurements. They tried to minimize the errors by including more TRGBs; But when they started doing this, they found that the distance to the galaxies was less than they first thought. This change caused the value of Hubble’s constant to increase.

Friedman’s team also discovered an error in Cephaus’s analysis: in almost half of the pulsating stars, the term crowd was applied twice. Correcting this error increased the value of Hubble’s constant significantly. Hubble’s tension was revived.

Finally, after efforts to fix the errors, the researchers’ paper presents three distinct values of Hubble’s constant. The JAGB measurement yielded a result of 67.96 km/s/megaparsec. The TRGB result was equal to 69.85 with similar error margins. Hubble’s constant was obtained at a higher value of 72.05 in the Kyphousian variable method. In this way, different hypotheses about the characteristics of these stars caused Hubble’s stress value to vary from 69 to 73.

By combining the aforementioned methods and uncertainties, the average Hubble stress value equal to 69.96 was obtained with an uncertainty of four percent. This margin of error overlaps with the theoretical prediction of the expansion rate of the universe, as well as the higher value of Tim Reiss.

Tensions and resolutions

The James Webb Space Telescope has provided methods for measuring the Hubble constant. The idea is simple: closer galaxies look more massive; Because you can make out some of their stars, while more distant galaxies have a more uniform appearance.

A method called gravitational convergence is more promising. A massive galaxy cluster acts like a magnifying glass, bending and magnifying the image of a background object, creating multiple images of the background object when its light takes different paths.

Brenda Fry, an astronomer at the University of Arizona, is leading a program to observe seven clusters with the James Webb Space Telescope. Looking at the first images they captured last year of the G165 cluster, Fry and his colleagues noticed three spots that were not previously seen in the images. These three points were actually separate images of a supernova that was located in the background of the aforementioned cluster.

After repeating the observation several times, the researchers calculated the difference between the arrival times of the three gravitational lensing images of the supernova. This time delay is proportional to Hubble’s constant and can be used to calculate this value. The group obtained an expansion velocity of 75.4 km/s/Mpa with a large uncertainty of 8.1%. Fry expects the error bars to correct after several years of similar measurements.

Both Friedman’s and Reiss’ teams predict that they will be able to get a better answer with James Webb’s observations in the coming years. “With improved data, the Hubble tension will eventually be resolved, and I think we’ll get to the bottom of it very quickly,” Friedman says.

Ancient humans survived the last ice age just fine

iPhone 16 Pro Review

Why is the colon cancer increasing in people younger than 50?

Why is it still difficult to land on the moon?

Biography of Geoffrey Hinton; The godfather of artificial intelligence

The Strawberry Project; The OpenAI artificial intelligence model

Everything you need to know about the Windows Blue Screen of Death

Starlink; Everything you need to know about SpaceX Satellite Internet

iOS 18 review: A smart update even without Apple’s intelligence

There is more than one way for planets to be born

Popular

-

Technology1 year ago

Technology1 year agoWho has checked our Whatsapp profile viewed my Whatsapp August 2023

-

Technology1 year ago

Technology1 year agoSecond WhatsApp , how to install and download dual WhatsApp August 2023

-

Technology1 year ago

Technology1 year agoHow to use ChatGPT on Android and iOS

-

AI2 years ago

AI2 years agoUber replaces human drivers with robots

-

Technology1 year ago

Technology1 year agoThe best Android tablets 2023, buying guide

-

Technology1 year ago

Technology1 year agoThe best photography cameras 2023, buying guide and price

-

Humans2 years ago

Humans2 years agoCell Rover analyzes the inside of cells without destroying them

-

Technology1 year ago

Technology1 year agoHow to prevent automatic download of applications on Samsung phones